the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Assessing boundary condition and parametric uncertainty in numerical-weather-prediction-modeled, long-term offshore wind speed through machine learning and analog ensemble

Weiming Hu

Mike Optis

Guido Cervone

Stefano Alessandrini

To accurately plan and manage wind power plants, not only does the time-varying wind resource at the site of interest need to be assessed but also the uncertainty connected to this estimate. Numerical weather prediction (NWP) models at the mesoscale represent a valuable way to characterize the wind resource offshore, given the challenges connected with measuring hub-height wind speed. The boundary condition and parametric uncertainty associated with modeled wind speed is often estimated by running a model ensemble. However, creating an NWP ensemble of long-term wind resource data over a large region represents a computational challenge. Here, we propose two approaches to temporally extrapolate wind speed boundary condition and parametric uncertainty using a more convenient setup in which a mesoscale ensemble is run over a short-term period (1 year), and only a single model covers the desired long-term period (20 year). We quantify hub-height wind speed boundary condition and parametric uncertainty from the short-term model ensemble as its normalized across-ensemble standard deviation. Then, we develop and apply a gradient-boosting model and an analog ensemble approach to temporally extrapolate such uncertainty to the full 20-year period, for which only a single model run is available. As a test case, we consider offshore wind resource characterization in the California Outer Continental Shelf. Both of the proposed approaches provide accurate estimates of the long-term wind speed boundary condition and parametric uncertainty across the region (R2>0.75), with the gradient-boosting model slightly outperforming the analog ensemble in terms of bias and centered root-mean-square error. At the three offshore wind energy lease areas in the region, we find a long-term median hourly uncertainty between 10 % and 14 % of the mean hub-height wind speed values. Finally, we assess the physical variability in the uncertainty estimates. In general, we find that the wind speed uncertainty increases closer to land. Also, neutral conditions have smaller uncertainty than the stable and unstable cases, and the modeled wind speed in winter has less boundary condition and parametric sensitivity than summer.

- Article

(5589 KB) - Full-text XML

-

Supplement

(814 KB) - BibTeX

- EndNote

This work was authored in part by the National Renewable Energy Laboratory, operated by Alliance for Sustainable Energy, LLC, for the U.S. Department of Energy (DOE) under Contract No. DE-AC36-08GO28308. Funding provided by the U.S. Department of Energy Office of Energy Efficiency and Renewable Energy Wind Energy Technologies Office, by the Bureau of Ocean Energy Management (BOEM) under agreement no. IAG-19-2123 and by the National Offshore Wind Research and Development Consortium under agreement no. CRD-19-16351. The views expressed in the article do not necessarily represent the views of the DOE or the U.S. Government. The U.S. Government retains and the publisher, by accepting the article for publication, acknowledges that the U.S. Government retains a nonexclusive, paid-up, irrevocable, worldwide license to publish or reproduce the published form of this work, or allow others to do so, for U.S. Government purposes.

Offshore wind energy keeps increasing its market penetration as an inexpensive and clean source of energy. In some areas of the world, such as the North Sea in Europe, offshore wind represents a well-established source of electricity, with a total installed capacity of about 15 GW and a planned increase of up to 74 GW by 2030 (van Hoof, 2017). As the cost of offshore wind energy has been decreasing faster than expected (Stiesdal, 2016; Brandily, 2020), many other regions are currently planning to adopt offshore wind energy solutions to meet their energy needs. The United States falls within this group, with its offshore technical resource potential being estimated to be about twice the present national energy demand (Musial et al., 2016). While one single 30 MW offshore wind power plant has been operating since 2016 (Deepwater Wind, 2016), many other offshore wind plants are being planned, mostly concentrated along the eastern seaboard and the Outer Continental Shelf (OCS) off the coast of California, for a total of about 86 GW installed capacity expected by 2050 (Bureau of Ocean Energy Management, 2018).

Such extensive growth requires an accurate long-term characterization of the offshore wind resource (Brower, 2012). Direct observations of the wind resource offshore are oftentimes limited to buoys, which offer measurements at very limited heights. Hub-height measurement of the wind resource offshore can be achieved with either offshore meteorological towers (e.g., Neumann et al., 2004; Fabre et al., 2014; Peña et al., 2014; Kirincich, 2020) or floating lidars (Carbon Trust Offshore Wind Accelerator, 2018; OceanTech Services/DNV GL, 2020). However, the often prohibitive costs connected to both these measurement solutions limit their availability to a handful of locations despite recent efforts in leveraging their punctual hub-height measurements for wind speed vertical extrapolation over a larger region (Bodini and Optis, 2020; Optis et al., 2021a). Given these constraints, numerical weather prediction (NWP) models at the mesoscale are often used to obtain an in space and time continuous mapping of the available offshore wind resource at the heights relevant for commercial wind power plant deployment (e.g., Mattar and Borvarán, 2016; Salvação and Soares, 2018), with some studies (Papanastasiou et al., 2010; Steele et al., 2013; Arrillaga et al., 2016) also focusing on the validation of modeled coastal wind effects, such as sea breezes, which have a significant impact on offshore wind energy production (Archer et al., 2014).

Tens of billions of dollars will be invested in the US offshore wind energy industry in the coming years. In order to minimize the financial risk associated with such major investments, not only is a characterization of the time-varying offshore wind resource needed, but an assessment of the uncertainty connected to this numerical prediction is of primary importance. A 1 % uncertainty change in the mean wind resource translates to a 1.6 %–1.8 % uncertainty for the long-term wind plant annual energy production (Johnson et al., 2008; White, 2008; Holstag, 2013; Truepower, 2014) with a significant increase in the interest rates for new project financing. However, assessing the uncertainty in modeled wind speed is a problematic task. NWP model ensembles tend to lead to an underdispersive behavior (Buizza et al., 2008; Alessandrini et al., 2013) so that only a limited component of the actual wind speed error with respect to observations can be quantified. The full uncertainty in NWP-model-predicted wind speed can be quantified only when direct observations of the wind resource are available. In this scenario, the residuals between modeled and observed wind speed can be calculated, and the model error is quantified in terms of its bias (i.e., the mean of the residuals) and uncertainty (i.e., the standard deviation of the residuals). The obtained model uncertainty would then be added to the inherent uncertainty of the wind speed measurements by using a sum of squares approach (JCGM 100:2008, 2008). However, as we have already mentioned, direct observations of the wind resource are not always readily available, especially offshore, so that other ways to quantify at least specific components of the full wind speed uncertainty are needed.

When considering NWP models, the choices of the model setup and inputs have a direct impact on the model wind speed prediction and therefore on its uncertainty. Hahmann et al. (2020) recently provided a detailed analysis of the sensitivity in wind speed predicted by NWP models as part of the development of the New European Wind Atlas. Among the various sources of uncertainty, the choices of the planetary boundary layer (PBL) scheme (Ruiz et al., 2010; Carvalho et al., 2014a; Hahmann et al., 2015; Olsen et al., 2017) and of the large-scale atmospheric forcing (Carvalho et al., 2014b; Siuta et al., 2017) have been shown to have a major impact. Model resolution (Hahmann et al., 2015; Olsen et al., 2017), spin-up time (Hahmann et al., 2015), and data assimilation techniques (Ulazia et al., 2016) have also been shown to contribute to the wind speed sensitivity. The variability in modeled wind speed that derives from all the different model choices leads to what we will call boundary condition and parametric uncertainty of the modeled wind speed. Optis et al. (2021b) recently explored best practices for quantifying and communicating NWP-modeled wind speed boundary condition and parametric uncertainty offshore. In their approach, an ensemble of Weather Research and Forecasting (WRF) model (Skamarock et al., 2008) simulations is created by considering different WRF versions, namelists, and external forcings, and the wind speed boundary condition and parametric uncertainty is then quantified in terms of its across-ensemble variability. The use of numerical ensembles for uncertainty quantification is not exclusive to the wind energy community, as has been extensively applied in ample spectrum fields (e.g., Zhu, 2005; Parker, 2013; Murphy et al., 2004). However, running an NWP ensemble across a large region and for the long-term period needed for an accurate characterization of the naturally varying wind resource is computationally prohibitive so that innovative and more computationally efficient ways are needed to quantify some components of the long-term wind speed uncertainty.

Here, we consider wind speed characterization in the California OCS, and we propose and compare two innovative techniques for modeled wind speed long-term boundary condition and parametric uncertainty quantification. To do so, we consider a setup that is computationally more affordable, wherein WRF ensembles are only run over a short period (1 year) and are accompanied by a single, long-term (20 years) WRF simulation. First, we use a machine-learning algorithm to temporally extrapolate the WRF-based boundary condition and parametric uncertainty from the ensemble year to the full 20-year period. While machine learning has been successfully applied to various atmospheric (e.g., Xingjian et al., 2015; Gentine et al., 2018; Bodini et al., 2020) and wind-energy-related (e.g., Clifton et al., 2013; Arcos Jiménez et al., 2018; Optis and Perr-Sauer, 2019) problems, this represents, to the authors' knowledge, its first application for NWP uncertainty extrapolation. We compare the machine-learning-based approach with the predictions from the analog ensemble (AnEn) technique (Delle Monache et al., 2013) to quantify uncertainty in the wind resource from the variability in modeled cases with similar atmospheric conditions. Typical applications of AnEn include renewable energy probabilistic forecast for both solar (Alessandrini et al., 2015a, b; Cervone et al., 2017) and wind (Junk et al., 2015; Vanvyve et al., 2015) energy. The use of AnEn for long-term offshore wind speed uncertainty quantification represents a novel application of the technique.

In the remainder of this paper, we describe the experimental setup and our proposed methods to quantify and temporally extrapolate modeled wind speed boundary condition and parametric uncertainty in Sect. 2. Section 3 validates the techniques used and compares the mean long-term predictions from the two approaches. Also, we discuss physical insights into the main drivers for offshore wind speed boundary condition and parametric uncertainty. Finally, we conclude and suggest future work in Sect. 4.

2.1 Numerical simulation setup

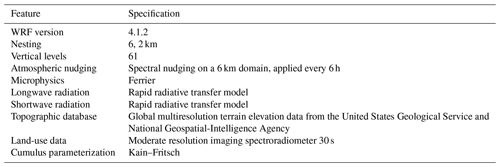

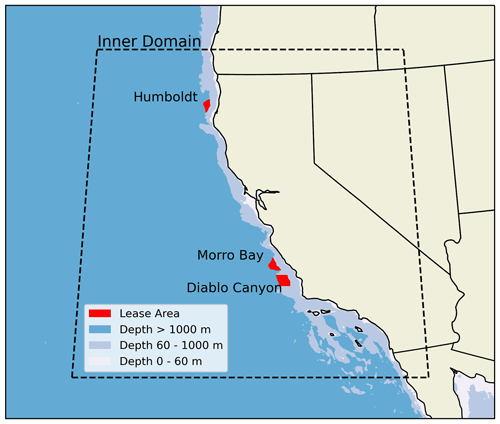

We consider a 20-year numerical data set recently developed by the National Renewable Energy Laboratory to provide accurate cost estimates for floating wind in the California OCS (Fig. 1).

Figure 1Map of the inner domain of the WRF numerical simulations for the California OCS. The current three wind energy lease areas are shown in red.

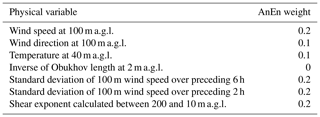

As described in detail in Optis et al. (2020), this product includes a single WRF setup that is run for a 20-year period (2000–2019) and an additional 15 WRF ensemble members run over a single year (2017), which was selected because of strong data coverage from the network of buoy and coastal radar observations used for model validation. All of the simulations are run with the common attributes in Table 1. A total of over 200 000 grid cells are included in the WRF inner domain, which we consider in our uncertainty analysis.

The 16 WRF ensemble members are constructed based on variations in boundary conditions and key WRF model parameters that previous research determined to have a primary impact on modeled wind speed:

-

Reanalysis forcing product is selected between ERA5, developed by the European Centre for Medium-Range Weather Forecasts (Hersbach et al., 2020), and the Modern-Era Retrospective analysis for Research and Applications, Version 2 (Gelaro et al., 2017), developed by the National Aeronautics and Space Administration.

-

PBL parameterization is chosen between the Mellor–Yamada–Nakanishi–Niino (MYNN; Nakanishi and Niino, 2004) and the Yonsei University (Hong et al., 2006) schemes.

-

Sea surface temperature product is selected between the Operational Sea Surface Temperature and Sea Ice Analysis (OSTIA) data set produced by the UK Met Office (Donlon et al., 2012) and the National Center for Environmental Prediction Real-Time Global product.

-

Land surface model is chosen between the Noah model and the updated Noah multiparameterization model (Niu et al., 2011).

The setup we chose to use for the single long-term WRF run is the result of a validation process. We compared and validated the 16 model setups with observations from buoys from the National Data Buoy Center and coastal radar measurements from the National Oceanic and Atmospheric Administration profiler network. While these are the only observations in the area available for model validation, these data sets cannot be used for quantifying the model uncertainty in wind speed. In fact, both data sources have significant limitations that do not allow for a direct comparison with offshore WRF data at heights relevant for wind energy development. On one hand, buoys only measure wind speed close to the water level, which can have a very different regime than the hub-height winds. On the other hand, the coastal radars measure at more relevant heights for wind energy but only at the interface between the ocean and land. Results from the validation (whose details can be found in Optis et al., 2020) revealed that the WRF setup providing the most accurate results is the one using ERA5 as reanalysis product, MYNN as a PBL scheme, OSTIA as a sea surface temperature product, and Noah as a land surface model. Therefore, we selected and adopted this WRF setup for the single 20-year WRF run.

In our analysis, we use hourly average data (calculated from 5 min WRF raw output), and we quantify the WRF wind speed boundary condition and parametric sensitivity in terms of the across-ensemble standard deviation of the WRF-predicted 100 m wind speed at any hour, t, normalized by the hourly average 100 m wind speed itself:

where WSi is the mean hourly 100 m wind speed from each ensemble member, is the mean hourly wind speed averaged across the 16 ensemble members, WS1 is the mean hourly 100 m wind speed from the WRF control run (i.e., the one used for the long-term period), and N=16 is the total number of WRF ensemble members. Within a numerical ensemble framework, the use of (normalized) standard deviation as a primary uncertainty metric has been recommended by Optis et al. (2021b) as it provides more consistent estimates than the ensemble interquartile range. While we acknowledge that our quantification of the WRF wind speed boundary condition and parametric sensitivity is going to be limited by the finite number of choices made to construct the ensemble members, we note how the considered set of settings represents either state-of-the-art products or the most popular and widely accepted choices for WRF applied to wind resource characterization. In the next sections, we present the two approaches we propose to temporally extrapolate this boundary condition and parametric uncertainty from 2017 (i.e., the only year when the uncertainty can be directly calculated from the WRF ensemble members) to the remaining 19 years.

2.2 Machine-learning approach

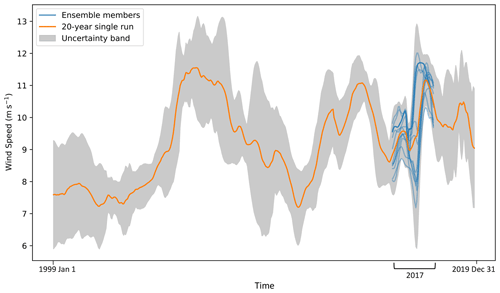

The first approach we use to temporally extrapolate the boundary condition and parametric uncertainty in 100 m modeled wind speed is a machine-learning gradient-boosting model (GBM) (Friedman, 2002). We select a GBM because ensemble-based algorithms are known to provide robust and accurate predictions in nonlinear problems. Moreover, we have tested a set of other machine-learning algorithms (random forest, generalized additive model), and the GBM provided the lowest prediction error. With this approach, at each of the more than 200 000 grid cells, we train the model on calendar year 2017 to predict the WRF across-ensemble standard deviation of hourly average 100 m wind speed, normalized by the hourly average 100 m wind speed itself (Eq. 1). We then apply the trained model to quantify the modeled wind speed boundary condition and parametric uncertainty in the remaining 19 years for which only the single WRF run is available (Fig. 2).

Figure 2Qualitative illustration of the concept used to temporally extrapolate the 100 m modeled wind speed boundary condition and parametric uncertainty through the proposed machine-learning and analog ensemble approaches. Wind speed uncertainty is directly quantified as its WRF across-ensemble normalized standard deviation (Eq. 1) for the single year in which ensembles were run and then extrapolated to the remaining 19 years, which were run with only a single WRF setup using the proposed GBM and AnEn approaches.

The input features we use to feed the GBM are all taken (as hourly averages) from the single WRF setup that is run for the full 20-year period and are

-

wind speed at 100 m above ground level (a.g.l.)

-

sine and cosine1 of wind direction at 100 m a.g.l.

-

air temperature at 40 m a.g.l.

-

wind shear coefficient calculated between 10 and 200 m a.g.l.

-

inverse of Obukhov length at 2 m a.g.l.

-

100 m wind speed standard deviation calculated from the preceding 2 h

-

100 m wind speed standard deviation calculated from the preceding 6 h

-

sine and cosine1 of the hour of the day

-

sine and cosine1 of the month.

The distribution of these variables is presented and discussed in Sect. 3.1. We acknowledge that a correlation exists between some of the input features used. However, we found that including all the features produced the best model accuracy. Also, principal component analysis could be applied to reduce the number of features used, but it is beyond the scope of our analysis. We also acknowledge how different choices for the atmospheric stability parameter could be explored, potentially leading to a more accurate representation of stability at the heights of interest for wind energy development compared to the near-surface Obukhov length.

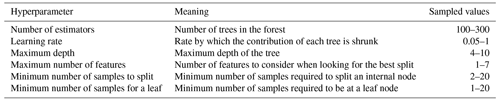

The learning algorithm is trained using the root-mean-square error (RMSE) as a performance metric to tune the algorithm weights. To avoid overfitting, we implement regularization during the training of the learning algorithm using the hyperparameters and value ranges listed in Table 2. At each site, we sampled 20 combinations of hyperparameters using a randomized cross validation. More details about the validation of the results from the proposed approach are given in Sect. 3.1.

2.3 Analog ensemble approach

The second approach we use to quantify and extrapolate modeled wind speed boundary condition and parametric uncertainty is based on the AnEn approach. At each site and for each hour (hereafter referred to as the “target hour”), the AnEn considers a set of atmospheric variables, which are consistent between the AnEn and the machine-learning approach, in a 3 h window centered on the considered time stamp. Then, the AnEn looks for analog atmospheric conditions at the considered site using data from the single long-term WRF setup for the year 2017. More in detail, the multivariate atmospheric state within the considered time window is compared with the atmospheric conditions modeled by the long-term WRF setup in all of the 3 h time windows in 2017. For each hour in 2017, the AnEn calculates a similarity metric, formally defined as a multivariate Euclidean distance measure (Delle Monache et al., 2013):

where F is the WRF-modeled atmospheric state at the search window centered at time, t (where t varies over the full 20-year period); A is the WRF-modeled atmospheric state over a window centered at time, t′ (where t′ varies in 2017); N is the number of atmospheric variables being considered to identify the analogs; ωi is the predictor weight associated with the atmospheric variable, i; and σi is the standard deviation of the atmospheric variable, i, calculated over the search period.

Once the similarity metric is calculated for all of the hours (t′) in 2017, the m analog hours with the highest similarity are selected to form the analog ensemble. Finally, the WRF-modeled across-ensemble 100 m wind speed standard deviation for each analog hour is considered. The average of these m values, normalized by the 100 m wind speed at that target hour from the single long-term WRF run, is then used as the AnEn extrapolated uncertainty to associate with the initial target hour. As previously mentioned, the AnEn approach is then repeated at each grid cell in the domain and target hour to generate an m-member ensemble forecast for the full long-term period.

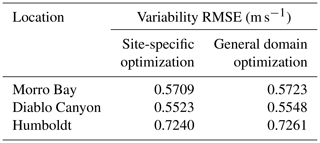

Table 3Variability in the RMSE of two weight optimization schemes: site-specific optimization and general domain optimization.

The results from the AnEn approach are sensitive to the predictor weights, ωi, and the number of analog members, m (Junk et al., 2015; Alessandrini et al., 2019). Therefore, the AnEn approach first needs to be trained to determine the optimal values of these parameters which maximize the accuracy of the AnEn predictions. In doing so, we use RMSE between 2017 AnEn-predicted and WRF-predicted wind speed uncertainty as the score metric for the optimization process. Training AnEn at each grid cell over our large domain is a computationally challenging task (Hu et al., 2021a). Therefore, we explore whether the same number of analogs and a single combination of optimized weights can be used over the whole domain. First, we perform a site-specific weight optimization at three sites, one for each wind energy lease area in the California OCS (Fig. 1). Then, we alternatively tune a single combination of weights for all three sites, and we refer to this second approach as the general domain optimization. We compare the RMSE values from the two approaches in Table 3. At each site, the RMSE from the general domain optimization is only slightly higher (<0.5 % increase) than that from the much more expensive site-specific optimization. Therefore, we select the optimal number of analogs (m=16, notably the same as the number of WRF ensemble members) and weights resulting from the AnEn training at the three sites all together. The optimal weights are listed in Table 4.

3.1 Validation of the proposed approaches

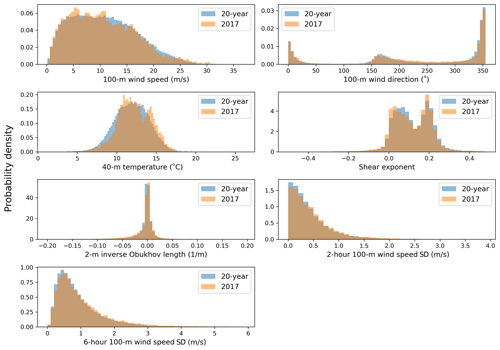

As a first step, we need to assess the accuracy and validity of our proposed approaches for the wind speed boundary condition and parametric uncertainty extrapolation. As an initial validation step, we compare the distributions of the atmospheric variables used as inputs to the machine-learning and AnEn algorithms for 2017 with what is found in the full 20-year period. In fact, in order for both approaches to be accurate, it is essential that the considered atmospheric variables in 2017 (i.e., with which the models are trained) experience a range of variability representative of the full 20-year period (i.e., to which the models are applied).

Figure 3Distributions of the atmospheric variables considered as inputs to the machine learning and AnEn algorithms from 2017 only and from the full 20-year period for a single site within the Humboldt wind energy lease area. Data are expressed in terms of their probability densities.

By qualitatively comparing the distributions of the seven atmospheric variables at one of the three wind energy lease areas (Fig. 3), the variability found in 2017 appears similar to what is found in the long-term 20-year period. To quantitatively confirm this, we apply a Levene's test (Levene, 1961) to assess the equality of the variances of the two samples (2017 vs. 20-year period) for each atmospheric variable. We find that for all the seven variables, the null hypothesis of homogeneity of variance cannot be rejected (with p-values < 0.05), thus confirming that it is highly unlikely that the variability found in 2017 is significantly different from the variability in the long term. Similar results are found at the two other wind energy lease areas (figures not shown).

After proving that the basic assumptions of the proposed approaches are validated by the data, we need to test the accuracy of their predictions.

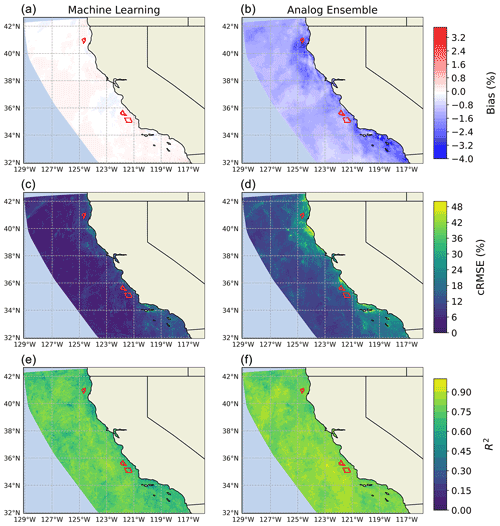

Figure 4Map of testing bias, cRMSE, and R2 determination coefficient from the GBM model (a, c, e) and the AnEn approach (b, d, f). The wind energy lease areas are highlighted in red.

To do so, at each grid cell we quantify the mean bias, centered or unbiased root-mean-square error (cRMSE), and coefficient of determination, R2, between the machine-learning or AnEn predictions and the actual WRF ensemble variability. For the machine-learning approach, we calculate these error metrics over a testing set, obtained by training the learning algorithm on 80 % of the 2017 data and then testing it on the remaining 20 %. To minimize the effects of the autocorrelation in the data, we select the testing set without shuffling the data. Also, to test the algorithm on the full seasonal variability in the atmospheric variables, we create the test set by selecting a contiguous 20 % of data for each month in 2017. We compare these results with the three error metrics calculated when applying the AnEn approach at each grid cell for 2017. The maps of the various error metrics for the two approaches are shown in Fig. 4. In general, the maps show how both approaches are capable of providing accurate predictions of the WRF boundary condition and parametric uncertainty across the whole offshore domain. We find that the uncertainty predicted by the machine-learning model has a negligible bias (which is expressed as a percentage of the mean wind resource, the same as our normalized uncertainty metric) throughout the domain, whereas the uncertainty predictions from the AnEn approach are, on average, slightly lower than the WRF ensemble variability with differences of less than 3 % at the three wind energy lease areas. The machine-learning approach also provides lower error after the bias is removed, especially closer to the coast where the AnEn approach has local cRMSE values as high as 40 %. On the other hand, we see that the AnEn approach provides a slightly stronger correspondence with the WRF data, with R2>0.80 at the vast majority of the sites, whereas for the machine-learning model, R2>0.75 with slightly lower values near the coast. The bias from the AnEn approach is likely because of the reduced length of the search period (1 year), which might be too limited for identifying a significant number (16) of analogs. This setup constrains the AnEn ability to account for rare events (e.g., particularly high-wind-speed cases) when looking for similar atmospheric conditions in such a short repository. Also, when searching for the optimal number of analogs to use, there is always a trade-off between the prediction accuracy (e.g., the RMSE) and the prediction bias. For our analysis, the main goal was to maximize the prediction accuracy in alignment with the machine learning (ML) approach, and therefore we set the RMSE as the optimization metric. During our grid search analysis to determine the optimal number of analog members, we observed that AnEn archives better bias with fewer members, which would however worsen the prediction accuracy (RMSE). Applying the bias correction proposed in Alessandrini et al. (2019), using a machine-learning similarity for analog definition (Hu et al., 2021b), or adopting a quantile mapping that uses quantiles of the analog ensemble instead of its mean (Sidel et al., 2020) would help reduce the AnEn bias at the potential expense of computational costs.

3.2 Analysis of extrapolated wind speed boundary condition and parametric uncertainty

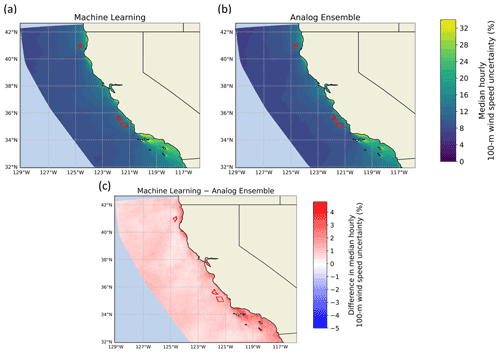

Now that the accuracy of both the proposed approaches has been assessed, we can analyze their long-term results. Figure 5 shows maps of the long-term median hourly boundary condition and parametric uncertainty for the 100 m wind speed predicted by the two proposed techniques, as well as the difference between the two.

Figure 5Median hourly boundary condition and parametric uncertainty for the 100 m wind speed, as derived from the machine-learning approach (a), the analog ensemble (b), and the difference between the two (c).

A strong agreement between the two approaches clearly emerges, with the AnEn approach predicting slightly lower values, as discussed from the analysis of the mean bias in Fig. 4. In general, we find a larger uncertainty close to land, with values locally greater than 30 % of the mean wind speed, whereas in open waters the median hourly uncertainty is smaller than 10 % of the WRF-predicted wind speed. The difference between the median prediction from the two approaches also gets larger close to the land. For the current three wind energy lease areas in the region, the machine-learning approach quantifies a long-term median hourly uncertainty between 12 % and 14 % at all three sites. On the other hand, the AnEn approach provides slightly lower values, between 10 % and 13 %, again with little variability across the three sites.

When focusing on offshore wind energy development, additional considerations are needed to understand how modeled wind speed uncertainty varies for the most relevant scenarios for energy production. When segregating data, having a long-term record allows for robust assessments of the variability among the considered classifications, which might otherwise have been much murkier when considering data from a short-term period only. Therefore, the 20-fold increase in the size of the uncertainty data set provided by our proposed approaches brings an essential advantage to this direction.

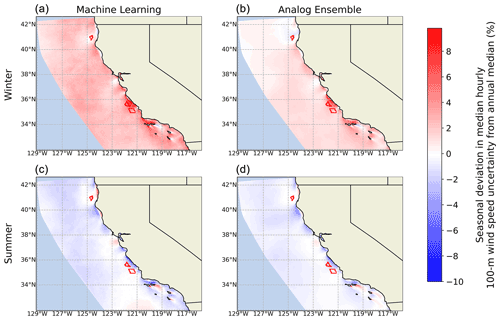

Seasonality has a primary importance for the energy market, especially in a region such as California, with a strong peak in annual demand in summer, which recently led to detrimental rolling blackouts in the region. In this fragile scenario, assessing the uncertainty in the naturally varying long-term wind speed predictions could help assess the value that offshore wind energy can deliver to the California energy market and achieve more accurate planning of the balance between supply and demand. Figure 6 compares maps of the seasonal deviation in median hourly normalized uncertainty for the 100 m wind speed in winter (December, January, and February) and summer (June, July, and August). For each season, the values shown are the difference between the median hourly uncertainty for that specific season and the overall median value (i.e., what is shown in Fig. 5).

Figure 6Seasonal deviation in median hourly normalized uncertainty in 100 m wind speed for winter (December, January, and February) and summer (June, July, and August), as derived from the machine learning approach (a, c) and the analog ensemble (b, d).

For most of the considered domain, we find a larger sensitivity in WRF-predicted wind speed in the winter months, with the GBM showing a slightly larger seasonal deviation than the AnEn approach. At the Morro Bay and Diablo Canyon lease areas, the median winter uncertainty is between 2 % and 8 % larger than the annual median at the same locations. On the other hand, the Humboldt lease area shows a near-zero winter deviation, with the machine-learning approach predicting slightly increased winter uncertainty values and AnEn predicting slightly negative ones. We find opposite results when considering the more energy-demanding summer months. Both Morro Bay and Diablo Canyon show a lower boundary condition and parametric uncertainty in summer with a difference from their annual median values smaller than 4 %. On the other hand, negligible variability is observed at the Humboldt lease area. We note that spring and fall months displayed intermediate results when compared to summer and winter (figures not shown).

Finally, we quantify the impact of different stability regimes on the long-term wind speed uncertainty. Various approaches to classify atmospheric stability offshore have been proposed and applied offshore (e.g., Archer et al., 2016), including the shape of the wind speed profile, the use of the Richardson number, and the use of turbulent kinetic energy. Here, we classify atmospheric stability based on the bulk Richardson number, RiB, calculated over the lowest 200 m, as done in Rybchuk et al. (2021) for the same data set:

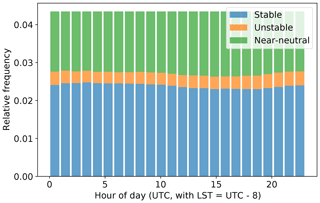

where g=9.81 m s−2 is the acceleration caused by gravity, z200=200 m, θ is potential temperature (K), and WS200 is the 200 m wind speed (m s−1). We consider stable conditions for RiB>0.025, unstable conditions for , and near-neutral conditions otherwise. Figure 7 shows a histogram of the diurnal variability in the three stability regimes at the Humboldt wind energy lease area.

Figure 7Daily distribution of atmospheric stability at the Humboldt wind energy lease area, as determined from the bulk Richardson number calculated over the lowest 200 m from the 20-year WRF simulation.

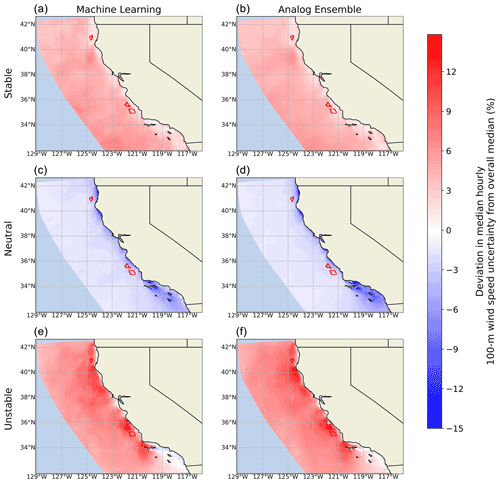

We see a predominance of near-neutral and stable conditions with a very weak diurnal variability. This is consistent with the sea surface temperature being generally colder than the near-surface air (because of ocean upwelling), which causes a predominantly stable stratification. Similar conditions are found at the other two wind energy lease areas. The maps in Fig. 8 quantify how wind speed boundary condition and parametric uncertainty varies as a function of atmospheric stability. For each atmospheric stability class, we show the difference between the median hourly uncertainty for that specific stability condition and the overall median value (i.e., what is shown in Fig. 5).

Figure 8Deviation in median hourly normalized uncertainty for the 100 m wind speed from the annual median for different atmospheric stability regimes, as derived from the machine-learning approach (a, c, e) and the AnEn approach (b, d, f).

The proposed approaches show a remarkable agreement. Neutral conditions show the lowest boundary condition and parametric uncertainty. At the three wind energy lease areas, we find uncertainty values about 2 %–4 % lower than the overall median in near-neutral conditions. On the other hand, the rare unstable cases show the largest uncertainty with deviations up to +10 % from the median at the considered wind energy lease areas. Finally, stable conditions also show positive deviations in uncertainty throughout the considered domain with differences on the order of +2 %–5 % at the wind energy lease areas.

As offshore wind energy becomes a widespread source of clean energy worldwide, the importance of having an accurate, long-term characterization of the offshore wind resource is crucial, not only in terms of its mean value but also of the uncertainty associated with this estimate. In our analysis, we focused on the California Outer Continental Shelf (OCS), where a significant offshore wind energy development is expected in the near future, to propose innovative techniques to temporally extrapolate hub-height wind speed boundary condition and parametric uncertainty from a short-term mesoscale numerical ensemble to a long-term single model run. First, we propose a gradient-boosting model algorithm, in which a regression model is trained over the short-term numerical ensemble to predict its variability and then applied to the long-term single model run. We compare this technique with an analog ensemble (AnEn) approach, wherein the extrapolated uncertainty for each time stamp in the long-term run is calculated by looking for similar atmospheric conditions within the short-term mesoscale numerical model ensemble. Adopting our proposed approaches for uncertainty extrapolation helps save significant computational resources as the desired long-term boundary condition and parametric uncertainty information can be derived from a much simpler setup, wherein the computationally expensive numerical ensembles are only run over a short-term period.

We find that both our proposed approaches agree well with the mesoscale model ensemble variability, thus providing a robust representation of the long-term wind speed boundary condition and parametric uncertainty. While AnEn has a slightly larger R2 coefficient with the mesoscale model across-ensemble data, we find that the gradient-boosting model has lower bias and centered root-mean-square error. However, we expect the AnEn performance to improve if either the bias correction for rare events proposed in Alessandrini et al. (2019) or the quantile mapping approach presented in Sidel et al. (2020) is incorporated in the analysis. In general, we find that the offshore wind speed boundary condition and parametric uncertainty increases near the coast. While the accuracy of the AnEn approach significantly degrades near the coast, the larger values in hub-height wind speed boundary condition and parametric uncertainty near the coast were also seen from the variability among the WRF ensembles (Optis et al., 2020) and attributed to diverging wind profiles associated with the choice of PBL scheme under strong stable atmospheric conditions near the coastline. We also find that uncertainty is larger in stable and unstable conditions and lower in near-neutral cases. On average, the hourly uncertainty at the current three wind energy lease areas in the California OCS is between 10 % and 14 % of their mean hub-height wind speed. Summer months also experience lower uncertainty, which will benefit the energy planning in a season with a strong demand, which has, in the past, led to detrimental rolling blackouts.

Clearly, the magnitude of the boundary condition and parametric uncertainty component that we quantified in our analysis is strictly connected to the (limited) number of choices sampled within the considered model setups. Given this underdispersive behavior of the numerical weather prediction ensembles (Buizza et al., 2008; Alessandrini et al., 2013), we expect the uncertainty quantified from our ensemble to be lower than the model error with respect to measurements. Still, we note that the same caveat would apply if the uncertainty was directly quantified by running a long-term numerical ensemble, and thus the computational advantages of our proposed approaches still hold. Moreover, we emphasize how the choices made to build our numerical ensemble represent either state-of-the-art resources or the most widely accepted choices within the wind energy modeling community. Also, a quantification and temporal extrapolation of the full uncertainty in modeled wind speed would require concurrent observations (and the knowledge of the inherent uncertainty associated with them) to be computed. Given all these considerations, many opportunities exist to further expand our work. While floating lidars with publicly available data have only been deployed in the California OCS very recently (Gorton, 2020), a few lidars have been deployed off the US eastern seaboard for more than 1 year. Observations from long-term offshore meteorological towers are also available in the North Sea in Europe. Our analysis could be expanded by first comparing the model-related boundary condition and parametric uncertainty with the full modeled wind speed uncertainty calculated by comparing modeled data and observations. Then, our proposed approaches could be expanded to temporally extrapolate the full modeled wind speed uncertainty: for example, quantified in terms of the variability in the residuals between modeled and observed wind. Testing additional input features to the algorithms could also help further improve the accuracy of the proposed extrapolation. Also, the site specificity of the proposed approaches would need to be investigated to understand if a learning model trained at a site, e.g., one ocean basin, can still provide accurate predictions when applied at a different location. Analog-based techniques could also be applied onshore, where the impact of more complex topography would likely need to be taken into account and incorporated in the algorithms. Finally, future work could focus on how interannual wind speed variability caused by climate change or long-term climatic and atmospheric oscillations (e.g., the North Atlantic oscillation) compares with the quantified uncertainty in modeled wind speed and how that should be taken into account for wind energy development purposes. To facilitate this extension, we have included in the Supplement a map of the interannual variability in 100 m wind speed quantified from the 20-year WRF run.

Data from the WRF simulations over the California OCS are available at https://doi.org/10.25984/1821404 (Bodini, 2021a). The code for the considered machine-learning model is available at https://github.com/nbodini/ML_UQ_offshore (last access: 29 October 2021) and https://doi.org/10.5281/zenodo.5618470 (Bodini, 2021b). The code for the AnEn approach is available at https://weiming-hu.github.io/AnalogsEnsemble (Hu, 2021).

The supplement related to this article is available online at: https://doi.org/10.5194/wes-6-1363-2021-supplement.

NB and MO envisioned the analysis. MO ran the numerical simulation. NB performed the machine-learning analysis in close consultation with MO. WH performed the AnEn analysis with the guidance of GC and SA. NB wrote the majority of the manuscript with significant contributions and feedback from all coauthors.

The contact author has declared that neither they nor their co-authors have any competing interests.

Publisher’s note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This research was performed using computational resources sponsored by the U.S. Department of Energy's Office of Energy Efficiency and Renewable Energy and located at the National Renewable Energy Laboratory. The authors thank Michael Rossol for his help in performing some of the computations using the National Renewable Energy Laboratory's High-Performance Computing Center.

This paper was edited by Joachim Peinke and reviewed by two anonymous referees.

Alessandrini, S., Sperati, S., and Pinson, P.: A comparison between the ECMWF and COSMO Ensemble Prediction Systems applied to short-term wind power forecasting on real data, Appl. Energ., 107, 271–280, https://doi.org/10.1016/j.apenergy.2013.02.041, 2013. a, b

Alessandrini, S., Delle Monache, L., Sperati, S., and Cervone, G.: An analog ensemble for short-term probabilistic solar power forecast, Appl. Energ., 157, 95–110, https://doi.org/10.1016/j.apenergy.2015.08.011, 2015a. a

Alessandrini, S., Delle Monache, L., Sperati, S., and Nissen, J.: A novel application of an analog ensemble for short-term wind power forecasting, Renew. Energ., 76, 768–781, https://doi.org/10.1016/j.renene.2014.11.061, 2015b. a

Alessandrini, S., Sperati, S., and Delle Monache, L.: Improving the analog ensemble wind speed forecasts for rare events, Mon. Weather Rev., 147, 2677–2692, https://doi.org/10.1175/MWR-D-19-0006.1, 2019. a, b, c

Archer, C. L., Colle, B. A., Delle Monache, L., Dvorak, M. J., Lundquist, J., Bailey, B. H., Beaucage, P., Churchfield, M. J., Fitch, A. C., Kosovic, B., Lee, S., Moriarty, P. J., Simao, H., Stevens, R. J. A. M., Veron, D., and Zack, J.: Meteorology for coastal/offshore wind energy in the United States: Recommendations and research needs for the next 10 years, B. Am. Meteorol. Soc., 95, 515–519, https://doi.org/10.1175/BAMS-D-13-00108.1, 2014. a

Archer, C. L., Colle, B. A., Veron, D. L., Veron, F., and Sienkiewicz, M. J.: On the predominance of unstable atmospheric conditions in the marine boundary layer offshore of the US northeastern coast, J. Geophys. Res.-Atmos., 121, 8869–8885, https://doi.org/10.1002/2016JD024896, 2016. a

Arcos Jiménez, A., Gómez Muñoz, C., and García Márquez, F.: Machine learning for wind turbine blades maintenance management, Energies, 11, 13, https://doi.org/10.3390/en11010013, 2018. a

Arrillaga, J. A., Yagüe, C., Sastre, M., and Román-Cascón, C.: A characterisation of sea-breeze events in the eastern Cantabrian coast (Spain) from observational data and WRF simulations, Atmos. Res., 181, 265–280, https://doi.org/10.1016/j.atmosres.2016.06.021, 2016. a

Bodini, N., Optis, M., Rossol, M., and Rybchuk, A.: US Offshore Wind Resource data for 2000–2019, OpenEI [data set], https://doi.org/10.25984/1821404, 2021a. a

Bodini, N.: nbodini/ML_UQ_offshore: ML_UQ_offshore (Version v1), Zenodo [code], https://doi.org/10.5281/zenodo.5618470, 2021b. a

Bodini, N. and Optis, M.: The importance of round-robin validation when assessing machine-learning-based vertical extrapolation of wind speeds, Wind Energ. Sci., 5, 489–501, https://doi.org/10.5194/wes-5-489-2020, 2020. a

Bodini, N., Lundquist, J. K., and Optis, M.: Can machine learning improve the model representation of turbulent kinetic energy dissipation rate in the boundary layer for complex terrain?, Geosci. Model Dev., 13, 4271–4285, https://doi.org/10.5194/gmd-13-4271-2020, 2020. a

Brandily, T.: Levelized Cost of Electricity 1H 2020: Renewable Chase Plunging Comodity Prices, Tech. rep., Bloomberg New Energy Finance Limited, available at: https://www.bnef.com/core/lcoe?tab=Forecast LCOE (last access: 27 October 2021), 2020. a

Brower, M.: Wind Resource Assessment: A Practical Guide to Developing a Wind Project, John Wiley & Sons, Hoboken, New Jersey, https://doi.org/10.1002/9781118249864, 2012. a

Buizza, R., Leutbecher, M., and Isaksen, L.: Potential use of an ensemble of analyses in the ECMWF Ensemble Prediction System, Q. J. Roy. Meteor. Soc., 134, 2051–2066, https://doi.org/10.1002/qj.346, 2008. a, b

Bureau of Ocean Energy Management: Outer Continental Shelf Renewable Energy Leases Map Book, Tech. rep., Bureau of Ocean Energy Management, available at: https://www.boem.gov/sites/default/files/renewable-energy-program/Mapping-and-Data/Renewable_Energy_Leases_Map_Book_March_2019.pdf (last access: 27 October 2021), 2018. a

Carbon Trust Offshore Wind Accelerator: Carbon Trust Offshore Wind Accelerator Roadmap for the Commercial Acceptance of Floating LiDAR Technology, Tech. rep., Carbon Trust, available at: https://prod-drupal-files.storage.googleapis.com/documents/resource/public/Roadmap for Commercial Acceptance of Floating LiDAR REPORT.pdf (last access: 27 October 2021), 2018. a

Carvalho, D., Rocha, A., Gómez-Gesteira, M., and Santos, C. S.: Sensitivity of the WRF model wind simulation and wind energy production estimates to planetary boundary layer parameterizations for onshore and offshore areas in the Iberian Peninsula, Appl. Energ., 135, 234–246, https://doi.org/10.1016/j.apenergy.2014.08.082, 2014a. a

Carvalho, D., Rocha, A., Gómez-Gesteira, M., and Silva Santos, C.: WRF wind simulation and wind energy production estimates forced by different reanalyses: Comparison with observed data for Portugal, Appl. Energ., 117, 116–126, https://doi.org/10.1016/j.apenergy.2013.12.001, 2014b. a

Cervone, G., Clemente-Harding, L., Alessandrini, S., and Delle Monache, L.: Short-term photovoltaic power forecasting using Artificial Neural Networks and an Analog Ensemble, Renew. Energ., 108, 274–286, https://doi.org/10.1016/j.renene.2017.02.052, 2017. a

Clifton, A., Kilcher, L., Lundquist, J., and Fleming, P.: Using machine learning to predict wind turbine power output, Environ. Res. Lett., 8, 024009, https://doi.org/10.1088/1748-9326/8/2/024009, 2013. a

Deepwater Wind: Block Island Wind Farm, available at: https://dwwind.com/project/block-island-wind-farm (last access: 27 October 2021), 2016. a

Delle Monache, L., Eckel, F. A., Rife, D. L., Nagarajan, B., and Searight, K.: Probabilistic weather prediction with an analog ensemble, Mon. Weather Rev., 141, 3498–3516, https://doi.org/10.1175/MWR-D-12-00281.1, 2013. a, b

Donlon, C. J., Martin, M., Stark, J., Roberts-Jones, J., Fiedler, E., and Wimmer, W.: The operational sea surface temperature and sea ice analysis (OSTIA) system, Remote Sens. Environ., 116, 140–158, https://doi.org/10.1016/j.rse.2010.10.017, 2012. a

Fabre, S., Stickland, M., Scanlon, T., Oldroyd, A., Kindler, D., and Quail, F.: Measurement and simulation of the flow field around the FINO 3 triangular lattice meteorological mast, J. Wind Eng. Ind. Aerod., 130, 99–107, https://doi.org/10.1016/j.jweia.2014.04.002, 2014. a

Friedman, J. H.: Stochastic gradient boosting, Comput. Stat. Data An., 38, 367–378, https://doi.org/10.1016/S0167-9473(01)00065-2, 2002. a

Gelaro, R., McCarty, W., Suárez, M. J., Todling, R., Molod, A., Takacs, L., Randles, C. A., Darmenov, A., Bosilovich, M. G., Reichle, R., Wargan, K., Coy, L., Cullather, R., Draper, C., Akella, S., Buchard, V., Conaty, A., da Silva, A. M., Gu, W., Kim, G.-K., Koster, R., Lucchesi, R., Merkova, D., Nielsen, J. E., Partyka, G., Pawson, S., Putman, W., Rienecker, M., Schubert, S. D., Sienkiewicz, M., and Zhao, B.: The modern-era retrospective analysis for research and applications, version 2 (MERRA-2), J. Climate, 30, 5419–5454, https://doi.org/10.1175/JCLI-D-16-0758.1, 2017. a

Gentine, P., Pritchard, M., Rasp, S., Reinaudi, G., and Yacalis, G.: Could machine learning break the convection parameterization deadlock?, Geophys. Res. Lett., 45, 5742–5751, https://doi.org/10.1029/2018GL078202, 2018. a

Gorton, A.: Atmosphere to Electrons (A2e), buoy/lidar.z06.00, Maintained by A2e Data Archive and Portal for U.S. Department of Energy, Office of Energy Efficiency and Renew. Energ., Tech. rep., Pacific Northwest National Lab (PNNL), Richland, WA, United States, https://doi.org/10.21947/1669352, 2020. a

Hahmann, A. N., Vincent, C. L., Peña, A., Lange, J., and Hasager, C. B.: Wind climate estimation using WRF model output: method and model sensitivities over the sea, Int. J. Climatol., 35, 3422–3439, https://doi.org/10.1002/joc.4217, 2015. a, b, c

Hahmann, A. N., Sīle, T., Witha, B., Davis, N. N., Dörenkämper, M., Ezber, Y., García-Bustamante, E., González-Rouco, J. F., Navarro, J., Olsen, B. T., and Söderberg, S.: The making of the New European Wind Atlas – Part 1: Model sensitivity, Geosci. Model Dev., 13, 5053–5078, https://doi.org/10.5194/gmd-13-5053-2020, 2020. a

Hersbach, H., Bell, B., Berrisford, P., Hirahara, S., Horányi, A., Muñoz-Sabater, J., Nicolas, J., Peubey, C., Radu, R., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Abellan, X., Balsamo, G., Bechtold, P., Biavati, G., Bidlot, J., Bonavita, M., De Chiara, G., Dahlgren, P., Dee, D., Diamantakis, M., Dragani, R., Flemming, J., Forbes, R., Fuentes, M., Geer, A., Haimberger, L., Healy, S., Hogan, R. J., Hólm, E., Janisková, M., Keeley, S., Laloyaux, P., Lopez, P., Lupu, C., Radnoti, G., de Rosnay, P., Rozum, I., Vamborg, F., Villaume, S., and Thépaut, J.-N.: The ERA5 global reanalysis, Q. J. Roy. Meteor. Soc., 146, 1999–2049, https://doi.org/10.1002/qj.3803, 2020. a

Holstag, E.: Improved Bankability, The Ecofys position on Lidar Use, Ecofys report, 2013. a

Hong, S.-Y., Noh, Y., and Dudhia, J.: A new vertical diffusion package with an explicit treatment of entrainment processes, Mon. Weather Rev., 134, 2318–2341, https://doi.org/10.1175/MWR3199.1, 2006. a

Hu, W.: Parallel Analog Ensemble, GitHub [code], available at: https://weiming-hu.github.io/AnalogsEnsemble, last access: 27 October 2021. a

Hu, W., Cervone, G., Clemente-Harding, L., and Calovi, M.: Parallel Analog Ensemble – The Power Of Weather Analogs, NCAR Technical Notes NCAR/TN-564+ PROC, p. 1, https://doi.org/10.5065/P2JJ-9878, 2021a. a

Hu, W., Cervone, G., Young, G., and Monache, L. D.: Weather Analogs with a Machine Learning Similarity Metric for Renewable Resource Forecasting, arXiv [preprint], arXiv:2103.04530, 9 March 2021b. a

JCGM 100:2008: Evaluation of measurement data – Guide to the expression of uncertainty in measurement, Joint Committee for Guides in Metrology, 2008. a

Johnson, C., White, E., and Jones, S.: Summary of Actual vs. Predicted Wind Farm Performance: Recap of WINDPOWER 2008, in: AWEA Wind Resource and Project Energy Assessment Workshop, available at: http://www.enecafe.com/interdomain/idlidar/paper/2008/AWEA workshop 2008 Johnson_Clint.pdf (last access: 27 October 2021), 2008. a

Junk, C., Delle Monache, L., Alessandrini, S., Cervone, G., and Von Bremen, L.: Predictor-weighting strategies for probabilistic wind power forecasting with an analog ensemble, Meteorol. Z., 24, 361–379, https://doi.org/10.1127/metz/2015/0659, 2015. a, b

Kirincich, A.: A Metocean Reference Station for offshore wind Energy research in the US, J. Phys. Conf. Ser., 1452, 012028, https://doi.org/10.1088/1742-6596/1452/1/012028, 2020. a

Levene, H.: Robust tests for equality of variances, Contributions to probability and statistics, Essays in honor of Harold Hotelling, 279–292, https://doi.org/10.2307/2285659, Stanford University Press, Palo Alto, 1961. a

Mattar, C. and Borvarán, D.: Offshore wind power simulation by using WRF in the central coast of Chile, Renew. Energ., 94, 22–31, https://doi.org/10.1016/j.renene.2016.03.005, 2016. a

Murphy, J. M., Sexton, D. M., Barnett, D. N., Jones, G. S., Webb, M. J., Collins, M., and Stainforth, D. A.: Quantification of modelling uncertainties in a large ensemble of climate change simulations, Nature, 430, 768–772, https://doi.org/10.1038/nature02771, 2004. a

Musial, W., Heimiller, D., Beiter, P., Scott, G., and Draxl, C.: Offshore wind energy resource assessment for the United States, Tech. rep., National Renewable Energy Laboratory (NREL), Golden, CO, United States, available at: https://www.nrel.gov/docs/fy16osti/66599.pdf (last access: 27 October 2021), 2016. a

Nakanishi, M. and Niino, H.: An improved Mellor–Yamada level-3 model with condensation physics: Its design and verification, Bound.-Lay. Meteorol., 112, 1–31, https://doi.org/10.1023/B:BOUN.0000020164.04146.98, 2004. a

Neumann, T., Nolopp, K., and Herklotz, K.: First operating experience with the FINO1 research platform in the North Sea; Erste Betriebserfahrungen mit der FINO1-Forschungsplattform in der Nordsee, DEWI-Magazin, Germany, 2004. a

Niu, G.-Y., Yang, Z.-L., Mitchell, K. E., Chen, F., Ek, M. B., Barlage, M., Kumar, A., Manning, K., Niyogi, D., Rosero, E., Tewari, M., and Xia, Y.: The community Noah land surface model with multiparameterization options (Noah-MP): 1. Model description and evaluation with local-scale measurements, J. Geophys. Res.-Atmos., 116, D12109, https://doi.org/10.1029/2010JD015139, 2011. a

OceanTech Services/DNV GL: NYSERDA Floating Lidar Buoy Data, OceanTech Services/DNV GL [data set], available at: https://oswbuoysny.resourcepanorama.dnvgl.com (last access: 27 October 2021), 2020. a

Olsen, B. T., Hahmann, A. N., Sempreviva, A. M., Badger, J., and Jørgensen, H. E.: An intercomparison of mesoscale models at simple sites for wind energy applications, Wind Energ. Sci., 2, 211–228, https://doi.org/10.5194/wes-2-211-2017, 2017. a, b

Optis, M. and Perr-Sauer, J.: The importance of atmospheric turbulence and stability in machine-learning models of wind farm power production, Renew. Sustain. Energ. Rev., 112, 27–41, https://doi.org/10.1016/j.rser.2019.05.031, 2019. a

Optis, M., Rybchuk, O., Bodini, N., Rossol, M., and Musial, W.: 2020 Offshore Wind Resource Assessment for the California Pacific Outer Continental Shelf, Tech. rep., National Renewable Energy Laboratory (NREL), Golden, CO, United States, available at: https://www.nrel.gov/docs/fy21osti/77642.pdf (last access: 27 October 2021), 2020. a, b, c

Optis, M., Bodini, N., Debnath, M., and Doubrawa, P.: New methods to improve the vertical extrapolation of near-surface offshore wind speeds, Wind Energ. Sci., 6, 935–948, https://doi.org/10.5194/wes-6-935-2021, 2021a. a

Optis, M., Kumler, A., Brodie, J., and Miles, T.: Quantifying sensitivity in numerical weather prediction-modeled offshore wind speeds through an ensemble modeling approach, Wind Energ., 24, 957–973, https://doi.org/10.1002/we.2611, 2021b. a, b

Papanastasiou, D., Melas, D., and Lissaridis, I.: Study of wind field under sea breeze conditions; an application of WRF model, Atmos. Res., 98, 102–117, https://doi.org/10.1016/j.atmosres.2010.06.005, 2010. a

Parker, W. S.: Ensemble modeling, uncertainty and robust predictions, WIRES Clim. Change, 4, 213–223, https://doi.org/10.1002/wcc.220, 2013. a

Peña, A., Floors, R., and Gryning, S.-E.: The Høvsøre tall wind-profile experiment: a description of wind profile observations in the atmospheric boundary layer, Bound.-Lay. Meteorol., 150, 69–89, https://doi.org/10.1007/s10546-013-9856-4, 2014. a

Ruiz, J. J., Saulo, C., and Nogués-Paegle, J.: WRF Model Sensitivity to Choice of Parameterization over South America: Validation against Surface Variables, Mon. Weather Rev., 138, 3342–3355, https://doi.org/10.1175/2010MWR3358.1, 2010. a

Rybchuk, A., Optis, M., Lundquist, J. K., Rossol, M., and Musial, W.: A Twenty-Year Analysis of Winds in California for Offshore Wind Energy Production Using WRF v4.1.2, Geosci. Model Dev. Discuss. [preprint], https://doi.org/10.5194/gmd-2021-50, in review, 2021. a

Salvação, N. and Soares, C. G.: Wind resource assessment offshore the Atlantic Iberian coast with the WRF model, Energy, 145, 276–287, https://doi.org/10.1016/j.energy.2017.12.101, 2018. a

Sidel, A., Hu, W., Cervone, G., and Calovi, M.: Heat Wave Identification Using an Operational Weather Model and Analog Ensemble, in: 100th American Meteorological Society Annual Meeting, available at: https://ams.confex.com/ams/2020Annual/meetingapp.cgi/Paper/371727 (last access: 27 October 2021), 2020. a, b

Siuta, D., West, G., and Stull, R.: WRF Hub-Height Wind Forecast Sensitivity to PBL Scheme, Grid Length, and Initial Condition Choice in Complex Terrain, Weather Forecast., 32, 493–509, https://doi.org/10.1175/WAF-D-16-0120.1, 2017. a

Skamarock, W. C., Klemp, J. B., Dudhia, J., Gill, D. O., Barker, D. M., Duda, M. G., Huang, X.-Y., Wang, W., and Powers, J. G.: A Description of the Advanced Research WRF Version 3, Tech. Rep. NCAR/TN-475+STR, Mesoscale and Microscale Meteorology Division, National Center for Atmospheric Research, Boulder, Colorado, USA, https://doi.org/10.5065/D68S4MVH, 2008. a

Steele, C. J., Dorling, S. R., von Glasow, R., and Bacon, J.: Idealized WRF model sensitivity simulations of sea breeze types and their effects on offshore windfields, Atmos. Chem. Phys., 13, 443–461, https://doi.org/10.5194/acp-13-443-2013, 2013. a

Stiesdal, H.: Midt i en disruptionstid, Ingeniøren, available at: https://ing.dk/blog/midt-disruptionstid-190449 (last access: 27 October 2021), 2016. a

Truepower, A.: AWS Truepower Loss and Uncertainty Methods, Albany, NY, available at: https://www.awstruepower.com/assets/AWS-Truepower-Loss-and-Uncertainty-Memorandum-5-Jun-2014.pdf (last access: 27 October 2021), 2014. a

Ulazia, A., Saenz, J., and Ibarra-Berastegui, G.: Sensitivity to the use of 3DVAR data assimilation in a mesoscale model for estimating offshore wind energy potential. A case study of the Iberian northern coastline, Appl. Energ., 180, 617–627, https://doi.org/10.1016/j.apenergy.2016.08.033, 2016. a

van Hoof, J.: Unlocking Europe's offshore wind potential, Tech. rep., PricewaterhouseCoopers B.V., available at: https://www.pwc.nl/nl/assets/documents/pwc-unlocking-europes-offshore-wind-potential.pdf (last access: 27 October 2021), 2017. a

Vanvyve, E., Delle Monache, L., Monaghan, A. J., and Pinto, J. O.: Wind resource estimates with an analog ensemble approach, Renew. Energ., 74, 761–773, https://doi.org/10.1016/j.renene.2014.08.060, 2015. a

White, E.: Continuing Work on Improving Plant Performance Estimates, in: AWEA Wind Resource and Project Energy Assessment Workshop, 2008. a

Xingjian, S., Chen, Z., Wang, H., Yeung, D.-Y., Wong, W.-K., and Woo, W.-C.: Convolutional LSTM network: A machine learning approach for precipitation nowcasting, in: Advances in Neural Information Processing Systems, 802–810, 2015. a

Zhu, Y.: Ensemble forecast: A new approach to uncertainty and predictability, Adv. Atmos. Sci., 22, 781–788, https://doi.org/10.1007/BF02918678, 2005. a

Sine and cosine are used to preserve the cyclical nature of this feature. Both are needed because each value of sine only (or cosine only) is linked to two different values of the cyclical feature.