the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Offshore wind energy forecasting sensitivity to sea surface temperature input in the Mid-Atlantic

Stephanie Redfern

Mike Optis

Caroline Draxl

As offshore wind farm development expands, accurate wind resource forecasting over the ocean is needed. One important yet relatively unexplored aspect of offshore wind resource assessment is the role of sea surface temperature (SST). Models are generally forced with reanalysis data sets, which employ daily SST products. Compared with observations, significant variations in SSTs that occur on finer timescales are often not captured. Consequently, shorter-lived events such as sea breezes and low-level jets (among others), which are influenced by SSTs, may not be correctly represented in model results. The use of hourly SST products may improve the forecasting of these events. In this study, we examine the sensitivity of model output from the Weather Research and Forecasting model (WRF) 4.2.1 to different SST products. We first evaluate three different data sets: the Multiscale Ultrahigh Resolution (MUR25) SST analysis, a daily, 0.25∘ × 0.25∘ resolution product; the Operational Sea Surface Temperature and Ice Analysis (OSTIA), a daily, 0.054∘ × 0.054∘ resolution product; and SSTs from the Geostationary Operational Environmental Satellite 16 (GOES-16), an hourly, 0.02∘ × 0.02∘ resolution product. GOES-16 is not processed at the same level as OSTIA and MUR25; therefore, the product requires gap-filling using an interpolation method to create a complete map with no missing data points. OSTIA and GOES-16 SSTs validate markedly better against buoy observations than MUR25, so these two products are selected for use with model simulations, while MUR25 is at this point removed from consideration. We run the model for June and July of 2020 and find that for this time period, in the Mid-Atlantic, although OSTIA SSTs overall validate better against in situ observations taken via a buoy array in the area, the two products result in comparable hub-height (140 m) wind characterization performance on monthly timescales. Additionally, during hours-long flagged events (< 30 h each) that show statistically significant wind speed deviations between the two simulations, both simulations once again demonstrate similar validation performance (differences in bias, earth mover's distance, correlation, and root mean square error on the order of 10−1 or less), with GOES-16 winds validating nominally better than OSTIA winds. With a more refined GOES-16 product, which has been not only gap-filled but also assimilated with in situ SST measurements in the region, it is likely that hub-height winds characterized by GOES-16-informed simulations would definitively validate better than those informed by OSTIA SSTs.

- Article

(5799 KB) - Full-text XML

- BibTeX

- EndNote

This work was authored in part by the National Renewable Energy Laboratory, operated by Alliance for Sustainable Energy, LLC, for the U.S. Department of Energy (DOE) under contract no. DE-AC36-08GO28308. Funding provided by the U.S. Department of Energy Office of Energy Efficiency and Renewable Energy Wind Energy Technologies Office and by the National Offshore Wind Research and Development Consortium under agreement no. CRD-19-16351. The views expressed in the article do not necessarily represent the views of the DOE or the U.S. Government. The U.S. Government retains and the publisher, by accepting the article for publication, acknowledges that the U.S. Government retains a nonexclusive, paid-up, irrevocable, worldwide license to publish or reproduce the published form of this work, or allow others to do so, for U.S. Government purposes.

The United States Atlantic coast is a development site for upcoming offshore wind projects. There are 15 leasing areas located throughout the Atlantic Outer Continental Shelf, where a number of offshore wind farms are planned to be developed (Bureau of Ocean Energy Management, 2018). Therefore, characterizing offshore boundary layer winds in the region has risen in importance. Accurate forecasting will provide developers with a better understanding of local wind patterns, which can inform wind farm planning and layout decisions (Banta et al., 2018). Additionally, improved weather prediction will allow for real-time adjustments of turbine operation to increase their operating efficiency and protect them against unnecessary wear and tear (Gutierrez et al., 2016, 2017; Debnath et al., 2021).

The Mid-Atlantic Bight (MAB) is an offshore cold pool region spanning the eastern United States coast from North Carolina up through Cape Cod, Massachusetts, and it overlies the offshore wind leasing areas. The cold pool forms during the summer months, when the ocean becomes strongly stratified and the thermocline traps colder water near the ocean floor. During the transition to winter, as sea surface temperatures (SSTs) drop, the stratification weakens and the cold pool breaks down. Thus, the cold pool generally persists from the spring through the fall. Southerly winds that drive surface currents offshore will result in coastal upwelling of this colder water, and, at times, strong winds associated with storm development can mix the cold pool upward, cooling the surface and influencing near-surface temperatures and winds (Colle and Novak, 2010; Chen et al., 2018; Murphy et al., 2021).

Accurate representation of the MAB in forecasting models is important because SSTs are closely tied to offshore winds. Horizontal temperature gradients between land and the ocean, as well as vertical temperature gradients over the ocean – which can form, for example, when SSTs are anomalously cold, as with the MAB – help define offshore airflow. In particular, variations in temperature can lead to or impact short-lived offshore events occurring on hourly timescales, such as sea breezes and low-level jets (LLJs). Sea breezes are driven by the land–sea temperature difference, which, if strong enough (around 5 ∘C or greater), can generate a circulation between the water and the land (Stull, 2015). With a relatively colder ocean, as during summer months, this leads to a near-ground breeze blowing landward, with a weak recirculation toward the ocean aloft (Miller et al., 2003; Lombardo et al., 2018). Similarly, the near-surface horizontal and air–sea temperature differences dictate the strength of stratification over the ocean. Studies have found a robust link between atmospheric stability and LLJ development, so accurately representing SSTs is key to modeling near-surface stability and, accordingly, LLJs (Gerber et al., 1989; Källstrand, 1998; Kikuchi et al., 2020; Debnath et al., 2021). A Weather Research and Forecasting model (WRF) analysis of the region conducted by Aird et al. (2022) found that in the MAB leasing areas specifically LLJs occurred in 12 % of the hours in June 2010–2011. Both LLJs and sea breezes can affect individual wind turbine and whole farm operation, so forecasting them correctly can improve power output and turbine reliability (Nunalee and Basu, 2014; Pichugina et al., 2017; Murphy et al., 2020; Xia et al., 2021).

Typical climate and weather model initialization and forcing inputs are reanalysis products, such as ERA5 and MERRA-2, which are global data sets that assimilate model output with observations to create a comprehensive picture of climate at each time step considered (Gelaro et al., 2017; Hersbach et al., 2020). These data sets primarily include global SST products that are produced at lower temporal and spatial resolutions than what can be available via regional, geostationary satellites. These coarser-resolution data sets, therefore, do not capture observed hourly and, in many cases, diurnal fluctuations in SSTs, which may influence their ability to properly force sea breezes and LLJs. Some preliminary comparisons between weather simulations, forced with different SST products, indicate that this particular input can have a significant impact on modeled offshore wind speeds (Byun et al., 2007; Chen et al., 2011; Dragaud et al., 2019; Kikuchi et al., 2020).

Few studies have examined the impact of finer-temporal-resolution SST products specifically on wind forecasting, and to the authors' knowledge, none so far have focused on the Mid-Atlantic. There have been studies looking at numerical weather prediction model (NWP) sensitivity to SST, but they have considered other regions or different, often coarser-spatial- and coarser-temporal-resolution products (Chen et al., 2011; Park et al., 2011; Shimada et al., 2015; Dragaud et al., 2019; Kikuchi et al., 2020; Li et al., 2021). In this article, we explore the effects of forcing the Weather Research and Forecasting model (WRF), an NWP used for research and operational weather forecasting, with different SST data sets characterized by different spatial and temporal resolutions in the Mid-Atlantic region during the summer months. Specifically, we address differences in model performance on monthly timescales and then contrast characterization effectiveness during shorter wind events. Section 2 lays out the data, model setup, and methods used in this study. Section 3 explains the findings of our simulations, and Sect. 4 explores their implications. Finally, Sect. 5 summarizes the intent of the study as well as its findings.

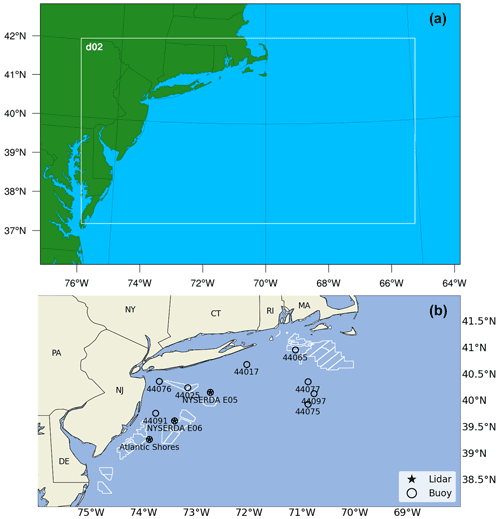

Figure 1The WRF domains (a) and a zoomed-in, more detailed look at a subset of the nested domain, with markers indicating the lidars (stars) and buoys (hollow circles) that were used in this study (b). The Atlantic Shores and two NYSERDA locations each host both a buoy and a lidar. Planning areas are outlined in white.

We first validate three different SST data sets against observations taken at an array of buoys off the Mid-Atlantic coast during the months of June and July 2020. Following validation, we select two of the three data sets for use as inputs to two different model simulations in the MAB region, which are identically configured aside from the SST data. August is not considered due to data availability constraints at the time of the study. The output data are compared with in situ measurements taken at buoys (SSTs) and floating lidars (winds) in the region. A 140 m hub height is assumed, based on the analysis of regions with moderate wind resource by Lantz et al. (2019). We evaluate performance primarily via a set of validation metrics calculated on monthly timescales. We then flag specific events during which the model generally captures regional winds, but output from the two simulations deviate significantly (defined in this study as 1 or more standard deviations from their mean differences) from one another. Again, validation analysis is performed for these periods.

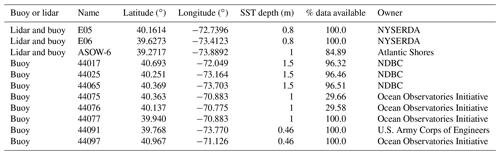

2.1 In situ and lidar data

This study makes use of both SST and wind profile observational data for model validation. SSTs are provided by the National Buoy Data Center (NBDC) at several locations along the Mid-Atlantic coast, as listed in Table 1 and shown in Fig. 1. Buoy data located at the Atlantic Shores Offshore Wind ASOW-6 location are also used (Fig. 1b). Wind data have been taken from the Atlantic Shores Offshore Wind floating lidar and the two New York State Energy Research and Development Authority (NYSERDA) floating lidars, whose locations are listed in Table 1. These lidars provide wind speed and wind direction at 10 min intervals from either 10 m (Atlantic Shores) or 40 m (NYSERDA) up through 250 m above sea level. There are periods of missing data for all buoys and lidars.

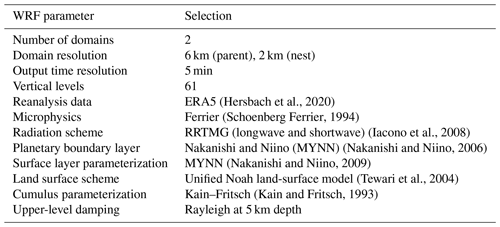

(Hersbach et al., 2020)(Schoenberg Ferrier, 1994)(Iacono et al., 2008)(Nakanishi and Niino, 2006)(Nakanishi and Niino, 2009)(Tewari et al., 2004)(Kain and Fritsch, 1993)2.2 Model setup

WRF version 4.2.1 is the NWP employed in this study (Powers et al., 2017). WRF is a fully compressible, non-hydrostatic model that is used for both research and operational applications. Our model setup, including key physics and dynamics options, is outlined in Table 2.

The study area spans the majority of the MAB, with the nested domain (grid spacing of 2 km × 2 km) running from the mid-Virginia coast to the south up through Cape Cod to the north (Fig. 1a).

2.3 Sea surface temperature data

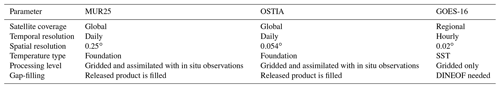

We compare how well three different SST data sets validate against buoy observations and subsequently select the two best-performing data sets to force our simulations (Table 3). Aside from these different SST product inputs, the rest of the model parameters in the simulations remain identical.

We have selected the ERA5 global reanalysis data set to force our simulations. The Operational Sea Surface Temperature and Ice Analysis (OSTIA) is the SST data set native to this product (Hersbach et al., 2020). As such, when included as part of ERA5, OSTIA's resolution has been adjusted to match ERA5's 31 km spatial resolution and hourly temporal resolution. For our simulations, however, we overwrite these SSTs with the OSTIA data set at its original resolution of 0.05∘, MUR25 data set at its 0.25∘ resolution, and GOES-16 data set at its resolution of 0.02∘.

The coarsest-resolution product we consider is the 0.25∘ Multi-scale Ultra-high Resolution SST analysis (MUR25). MUR25 is a daily product that assimilates observations and model data to render a complete global grid with SSTs. MUR25 has undergone pre- and post-processing, and the SSTs in the data set are foundation temperatures, which are measured deep enough in the water to discount diurnal temperature fluctuations. MUR25 has a spatial resolution of 0.25∘ × 0.25∘ (Chin et al., 2017), which is significantly coarser than either of the other two SST data sets we evaluate.

Our next SST selection, the Operational Sea Surface Temperature and Sea Ice Analysis (OSTIA) system, is a daily global product that combines in situ observations taken from buoys and ships, model output, and multiple remotely sensed SST data sets (Stark et al., 2007; Donlon et al., 2012). Similar to MUR25, it is a complete product in that it has no missing data. OSTIA has a spatial resolution of 0.05∘ × 0.05∘. Additionally, as a daily product like MUR25, OSTIA provides foundation SSTs (Stark et al., 2007; Donlon et al., 2012; Fiedler et al., 2019).

Our finest-resolution product is taken via GOES-16, which is a geostationary, regional satellite with a spatial resolution of 0.02∘ × 0.02∘ (Schmit et al., 2005, 2017). This product does not assimilate its measurements with in situ data. While GOES-16 does not offer global coverage and, therefore, cannot be used for certain world regions, it does cover the Mid-Atlantic Bureau of Ocean Energy Management (BOEM) offshore wind lease areas, which is our region of interest. Because GOES-16 has an hourly resolution, it can capture diurnal changes in SST and, therefore, measure surface temperature (Schmit et al., 2005, 2008).

Due to the lesser level of processing in the GOES-16 data set, it contains numerous data gaps that must be filled. These missing data are the result of a post-processing algorithm that flags pixels that fall below a specified temperature threshold, which is applied prior to release of the data set. This filter is in place to remove cloud cover. While this method is effective with regard to its defined intent, it can also erroneously discard valid pixels that capture the cold water upwelling typical to the MAB region during the warmer months, and, although this cold-pixel filter is also a common practice in global SST data sets (OSTIA), the high level of post-processing applied in those products interpolates over and fills the missing grid cells prior to release.

To gap-fill the GOES-16 data set so that we may use it in our simulations, we employ the Data INterpolating Emperical Orthogonal Function algorithm, or DINEOF, which is an open-source application that applies empirical orthogonal function (EOF) analysis to reconstruct incomplete data sets (Ping et al., 2016). The program was originally designed to specifically gap-fill remotely observed SSTs that contain missing data due to cloud-flagging and removal algorithms (as is the case with the GOES-16 data) and has demonstrated strong results in past studies (Alvera-Azcárate et al., 2005; Ping et al., 2016).

We additionally include in the GOES-16 SST data set the sensor-specific error statistics (SSES) bias field that is included with the distributed product. This component accounts for retrieval bias using a statistical algorithm designed to correct for errors in the SST field. Compared with the SST values alone, the bias-corrected GOES-16 data offsets an inherent warm bias in the raw data.

2.4 Event selection

We are particularly interested in understanding how well the model forecasts shorter wind events, as LLJs and sea breezes occur on hourly timescales. We have created a set of parameters that, when met, detect relatively brief time periods (on the order of hours to days) during which one simulation may be outperforming the other, which we then more closely examine to evaluate differences in their wind profile characterization and SST validation.

For an event to be flagged, it must meet the following criteria.

-

Correlation for both models is above 0.5 at hub height for two of the three lidar locations.

-

Differences in wind speeds between the two models must be greater than 1 standard deviation from the monthly mean difference.

-

Gaps during which the wind speed difference drops below 1 standard deviation must not persist for more than 2 h during a single event.

-

Events must last for at least 1 h.

This set of event characteristics first acts to filter out periods during which WRF is generally under-performing – possibly due to model shortcomings outside of SST forcing – so that the performance difference in the selected events may be with more certainty attributed to SSTs. Then, events are located during which the two simulations forecast statistically significantly different hub-height wind speeds, which persist for a period of time long enough to substantially affect power generation.

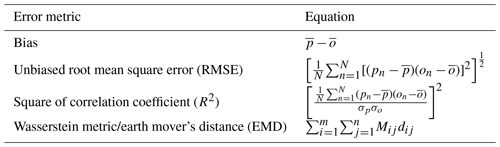

2.5 Validation metrics

To evaluate which simulation performs best during our study period, we calculate sets of validation metrics, as outlined by Optis et al. (2020). Specifically, we look at SSTs and 140 m (hub-height) wind speeds on both monthly and event timescales. The metrics we calculate are named and defined in Table 4, and they give a quantification of the error present in each case.

The bias provides information on the average simulation performance during the evaluation period – specifically, if the model is consistently over- or under-predicting the output variable in consideration. Unbiased root mean square error (RMSE) provides a more nuanced look at the spread of the error in the results. The square of the correlation coefficient (referred to from here forward as correlation) quantifies how well the simulations' variables change in coordination with those of the observations. Finally, the Wasserstein metric, also known as earth mover's distance (EMD), measures the difference between the observed and simulated variable distributions.

We first assess how well each SST data set compares with buoy data. Following SST validation, we evaluate the model's wind characterization performance when forced with different SST data. We assume a hub height of 140 m and compare output winds at this altitude against measurements taken via floating lidars off the Mid-Atlantic coast. Specifically, we analyze monthly performance and then select several shorter periods during June and July 2020 during which we compare wind characterization accuracy.

After evaluation of the SST data sets, only OSTIA- and GOES-16-forced WRF simulations are compared, as during July – one-half of our study period – MUR25 SSTs validate significantly worse against buoy measurements than the other two products. OSTIA SSTs validate the best out of the three data sets. Average, simulated hub-height winds across monthly periods validate similarly for both OSTIA and GOES-16. At an event-scale temporal resolution – that is, on the order of hours – GOES-16 and OSTIA perform comparably, with GOES-16 marginally outperforming OSTIA.

3.1 Sea surface temperature performance

We evaluate SST performance by linearly interpolating the satellite-based products to 10 min intervals (the in situ data output resolution) and making a brief qualitative assessment followed by calculating and comparing at each buoy validation metrics for the different products.

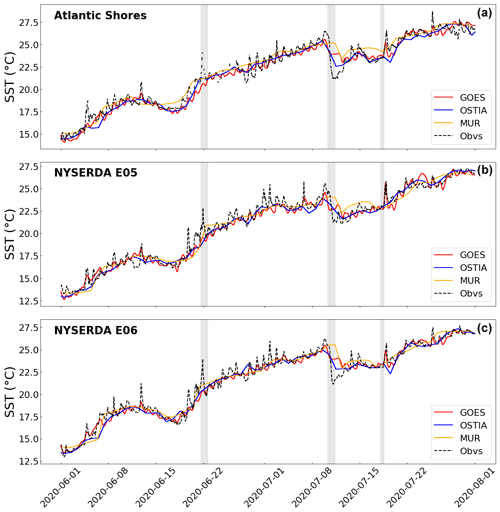

Figure 2Time series of MUR25, OSTIA, GOES-16, and in situ measurements of SST at the Atlantic Shores buoy for June and July at Atlantic Shores (a), NYSERDA E05 (b), and NYSERDA E06 (c). Specific wind events that are evaluated in Sect. 3.3 are highlighted in gray.

The time series plots in Fig. 2 show that while GOES-16 tracks the diurnal cycle seen by observations, the other two products do not (the highlighted time frames in the figure are wind events that are discussed in Sect. 3.3). Despite this feature, however, there exist a number of periods each month when GOES-16 does not accurately capture observed dips in temperature, and, during many of these times, the daily data sets, though missing the nuance of GOES-16, better represent the colder SSTs.

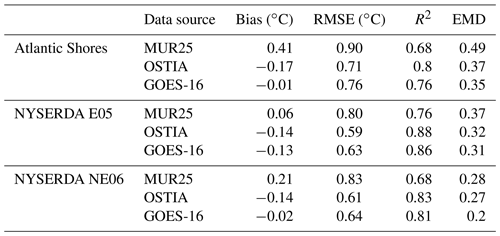

Table 5Validation metrics for each remotely sensed data source at Atlantic Shores, NYSERDA E05, and NYSERDA E06 on a 10 min output interval for July 2020.

A comparison of all three products shows markedly poorer validation against the floating lidar buoys, for at least two metrics at each buoy, by MUR25 as compared with GOES-16 and OSTIA during July 2020 (Table 5). Because July 2020 is one-half of our study period, we remove MUR25 from further analysis.

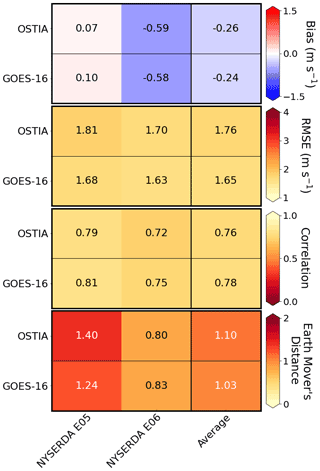

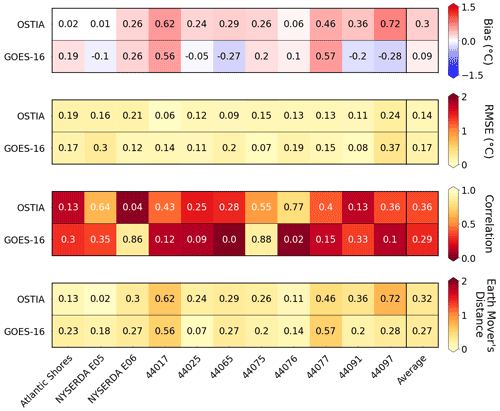

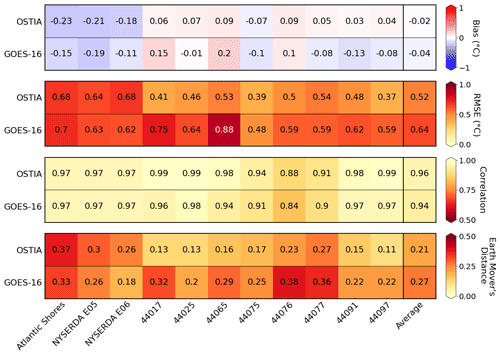

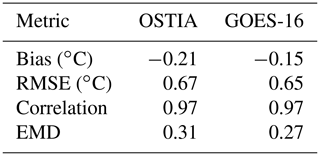

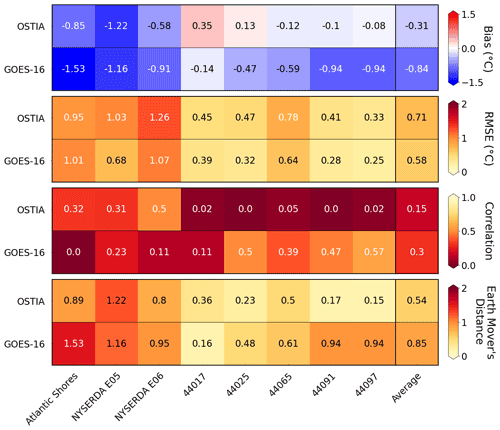

Over the course of June and July combined, looking across the entire buoy array, OSTIA overall outperforms GOES-16, as shown in Fig. 3. Both products have a relatively strong cold bias (between −0.1 and −0.25 ∘C) compared with observations at the three lidar locations. GOES-16 presents a negative bias at five additional buoys, and OSTIA has a negative bias at one other buoy. Both SST products display warm biases at buoys 44017 (off the northeast coast of Long Island) and 44076 (the farthest offshore location considered, southeast of Cape Cod). Although GOES-16 follows the diurnal cycle rather than representing only the daily average SSTs, as is the case with OSTIA, on average the correlation across all sites is comparable between the two SST products. RMSE is higher for GOES-16 at every buoy (with an average 0.64 ∘C, compared with 0.52 ∘C for OSTIA), and EMD for GOES-16 is higher than that for OSTIA at every location except the floating lidars (an average of 0.27, compared with 0.21 for OSTIA).

Figure 3Mean bias, RMSE, correlation, and EMD for GOES-16 and OSTIA SSTs at each buoy location shown, combined for June and July, along with the average metrics over all sites for each product.

Although OSTIA on average, across all buoys surveyed, better represents measured SSTs, GOES-16 performs better on average across only the floating lidar sites – Atlantic Shores, NYSERDA E05, and NYSERDA E06 (Table 6).

Table 6Average performance metrics for OSTIA and GOES-16 SSTs across the three floating lidar sites for June and July 2020.

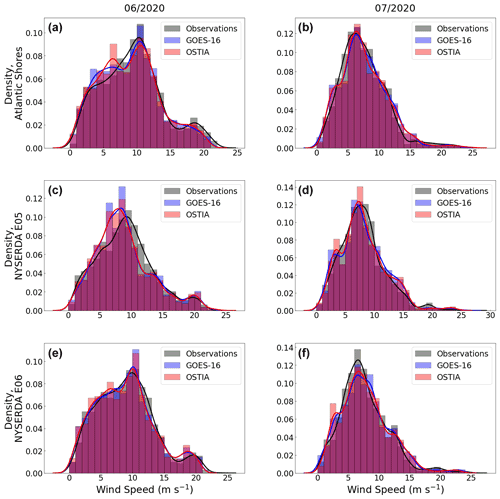

3.2 Monthly wind speeds

The probability distribution functions (PDFs) of hub-height wind speeds at each lidar show that WRF, on a monthly timescale and with a 10 min output resolution, generally captures the shape of the observed wind speed distribution at each lidar (Fig. 4), which suggests that the model itself is performing as it should. The wind speed distributions for each simulation maintain an even closer similarity in shape to one another, which highlights the biases directly related to the particular SST data set being used. A box plot of wind speeds across the entire domain for each simulation and for both months (not shown) indicates that although GOES-16 and OSTIA present near-identical average hub-height wind speeds, GOES-16 winds show a greater spread than OSTIA. Additionally, in both simulations, whole-domain winds in June tend to be significantly faster than July winds (Fig. 4).

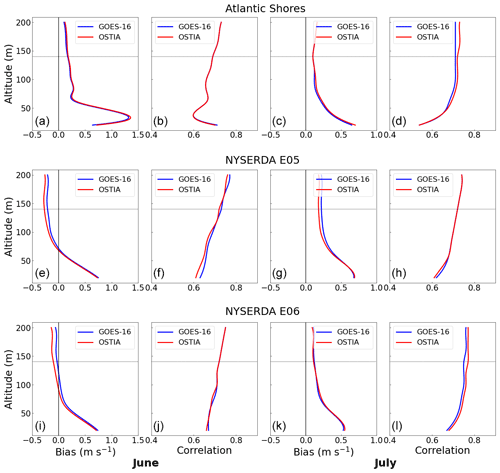

Figure 5Modeled hub-height wind speed bias and correlation for simulations forced with GOES-16 (blue) and OSTIA (red) SSTs during June and July 2020 at the Atlantic Shores lidar (a–d), the NYSERDA E05 lidar (e–h), and the NYSERDA E06 lidar (i–l).

A comparison between each of the two simulations of monthly hub-height wind speed bias and correlation shows that they perform quite similarly during both June and July (bias and correlation shown in Fig. 5). Both products over-predict wind speeds, except at the two NYSERDA lidars during June. The correlation of each product's forecasted winds with observations, from 100 m above sea level and higher, is above 0.65 at all three lidars during both June and July.

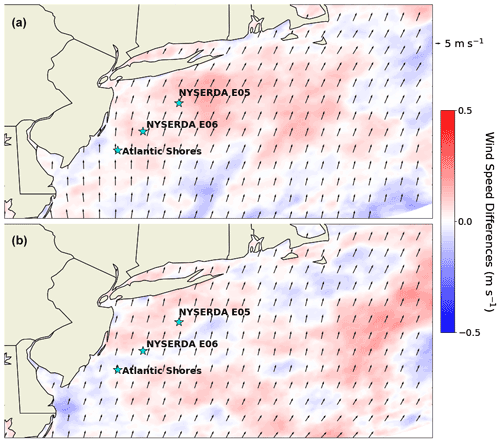

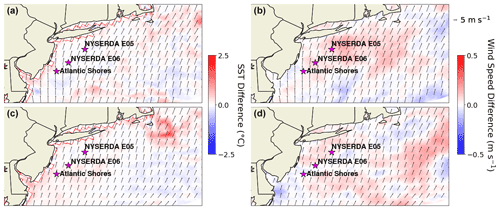

Figure 6Modeled hub-height (140 m) wind speed differences, GOES-16 – OSTIA, for June (a) and July (b).

A map of the domain displaying the June and July average wind speed differences between the GOES-16- and OSTIA-forced simulations, overlaid by wind barbs indicating the average GOES-16 wind speeds, is shown in Fig. 6. In general, wind speeds deviate from each other only by small amounts on a monthly timescale. The results show maximum average wind speed differences between the two simulations of up to 0.25 m s−1 for each month.

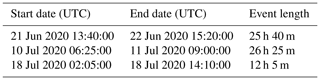

3.3 Event-scale wind speeds

Using the event selection algorithm detailed in Sect. 2.4, we locate three periods – one in June and two in July – during which 140 m wind speeds in the OSTIA and GOES-16 simulations differ from one another by statistically significant amounts and still both validate relatively well against observations (r2 > 0.6 at two or more lidars), per the criteria outlined in Sect. 2.4. These events are listed in Table 7. Lidars at which there are relatively large observational data gaps during these time periods (> 20 %) are not considered. Therefore, data from only two locations for the June event and the second July event are used. For all three cases, at each lidar being considered, GOES-16 delivers overall stronger characterization of 140 m wind speeds than OSTIA. Validation metrics vary at different heights, so vertical profiles of bias, r2, RMSE, and EMD for each event can be found in Appendix A.

3.3.1 21–22 June 2020 event

We have identified an event that meets our criteria beginning on 21 June 2020 at 13:40:00 UTC and ending on 22 June 2020 at 15:20:00 UTC. During this time period, offshore winds near the coast are southerly, with a tendency to follow the coastline as they rotate around a high-pressure system southeast of New Jersey.

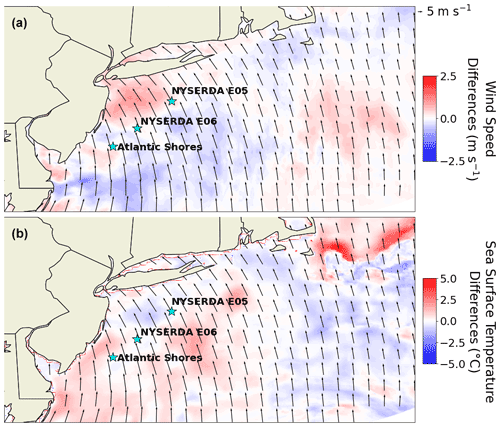

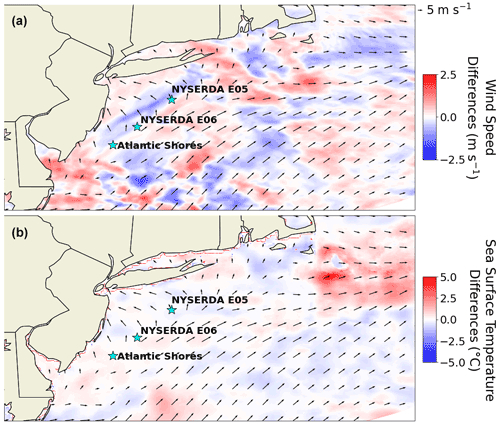

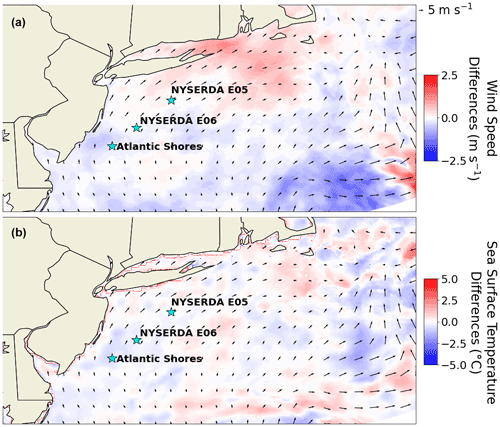

Figure 7Differences (GOES-16 – OSTIA) in average 140 m wind speeds (a) and SSTs (b) over the entire domain for the 21–22 June event.

Differences in SSTs between the two simulations do not correspond with significant 140 m wind speed differences at the three lidars; however, in other areas of the domain, including a region planned for development just south of Rhode Island (Fig. 1, marked by “RI”), these differences are larger, with magnitudes reaching over 2 m s−1 (Fig. 7). There are not significant SST differences noted within that region, although land surface temperatures may contribute to a stronger temperature gradient.

A planar depiction of 140 m wind speeds across the entire domain shows that, although the mean of the magnitude of the differences is roughly 0.25 m s−1, they vary by location across the region. The maximum difference in average wind speeds during this event is actually 2.25 m s−1. This is noteworthy, as wind speed differences of this size have significant implications regarding power generation.

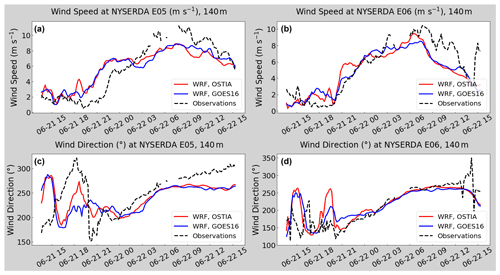

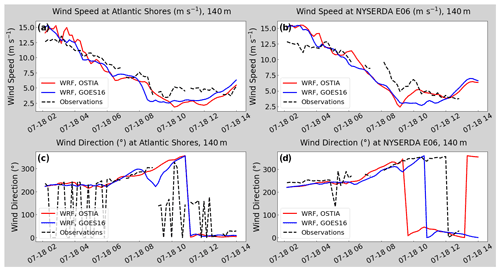

Figure 8Hub-height (140 m) wind speeds and wind directions at the NYSERDA E05 (a, c) and NYSERDA E06 (b, d) lidars during the 21–22 June event. Atlantic Shores is not shown due to a lack of observational data.

Model output from the two simulations captures the observed trend of a wind speed increase – but model outputted wind speeds deviate significantly from each another leading into a first ramping event, during a second ramp event, and once wind speeds began to stabilize (Fig. 8). Observational data are only available at the two NYSERDA lidars during these periods. The Atlantic Shores lidar has significant data gaps, so validation at this location is not conducted.

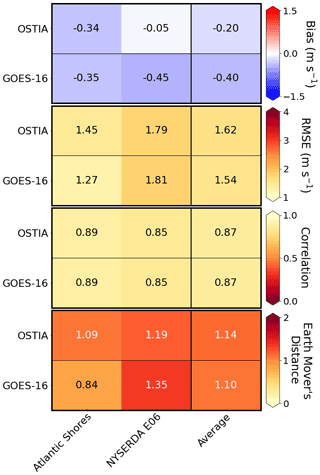

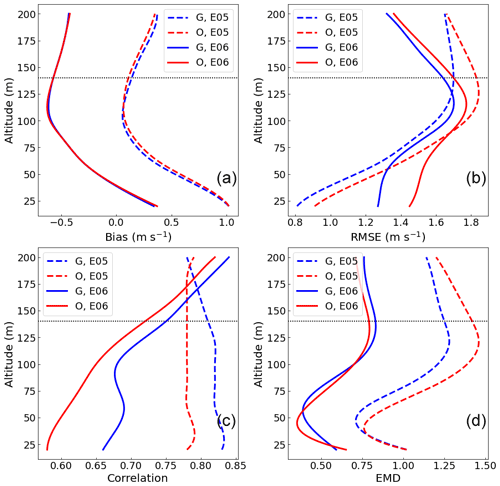

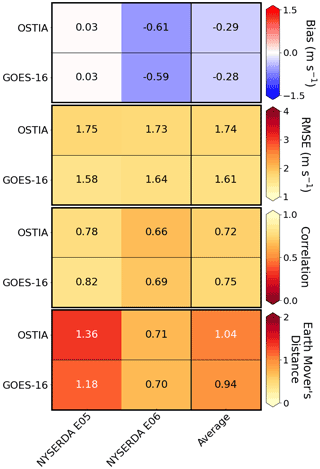

Event validation metrics at both lidars indicate stronger performance by the GOES-16 simulation than OSTIA, particularly at vertical levels associated with typical offshore turbine hub heights. Validation metrics at hub height (140 m) for the two simulations at NYSERDA E05 and NYSERDA E06 are shown in Fig. 9. Averaged correlation between the two sites is 0.78 for GOES-16 and 0.76 for OSTIA. The bias at each site is comparable between the two simulations, at −0.26 and −0.24 m s−1 for OSTIA and GOES-16, respectively. Model performance across the vertical should be taken into consideration if the rotor-equivalent wind speed is used to calculate power generation during extreme shear events, as well as when looking at turbines of varying hub heights. Of note is that both RMSE and EMD are lower for GOES-16 at all heights (Appendix A).

3.3.2 10–11 July 2020 event

We have next flagged an event that occurred between 10 July 2020 at 06:25:00 UTC and 11 July 2020 at 09:00:00 UTC. Synoptically, Tropical Storm Fay was observed to be moving in a southerly direction through the region during this time. High wind speeds, which peaked on 10 July 2020 at 18:00:00 UTC, may be attributed to this storm. Average wind directions also reflect the storm path (Fig. 10). Differences in average wind speed between the two simulations peak at only 1.35 m s−1 – which is around 1 m s−1 less than the maximum difference during the June event. The mean difference in the magnitudes of average wind speeds across the domain is 0.2 m s−1.

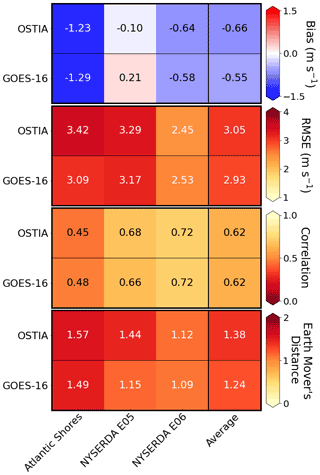

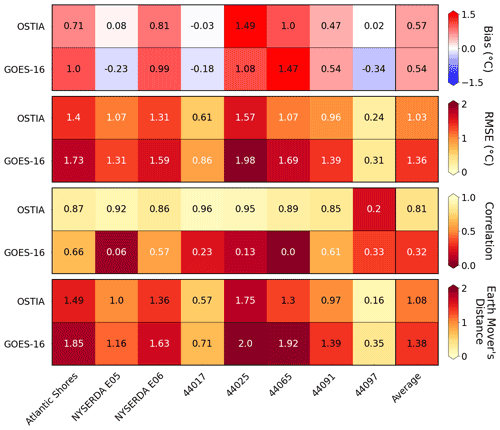

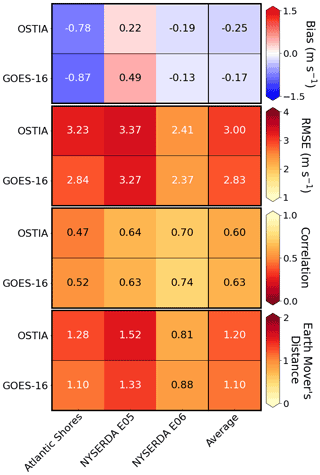

Figure 11Wind speed validation metrics at a 140 m hub height at the Atlantic Shores, NYSERDA E05, and NYSERDA E06 lidars during the 10–11 July event: bias, RMSE, correlation, and EMD.

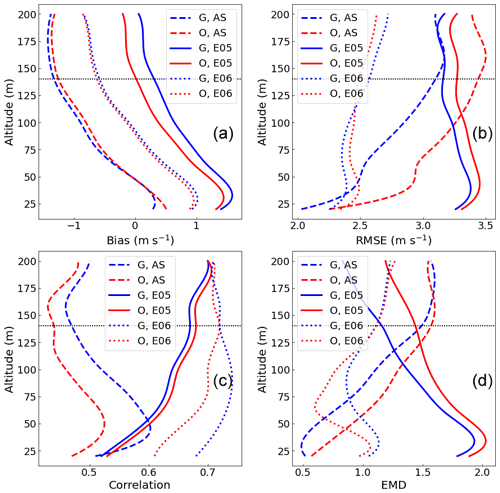

With the winds blowing in a south-southeasterly direction across the leasing area and the differences in GOES-16 showing a relatively stronger temperature gradient across that area in the direction of the wind, the GOES-16 simulation outputs stronger wind speeds compared with OSTIA (Fig. 10). This is reflected in the overall bias seen at the three lidars, although both simulations overall underestimate wind speeds during this time (Fig. 11).

Near-complete wind data sets exist at all three lidars for the duration of this event. Validation metrics show a correlation of 0.45 or greater between model output and observations, for both simulations, at 140 m at each lidar site. The models perform the best, across all four metrics, at NYSERDA E06. Average r2 between the two products is comparable, at 0.62. Bias strength peaks at −1.29 m s−1 at Atlantic Shores in the GOES-16 simulation. The bias is strongly negative at Atlantic Shores but is positive at NYSERDA E05, the northernmost lidar, in the GOES-16 simulation. On average, bias in the OSTIA simulation is 0.11 m s−1 stronger (more negative) than that in the GOES-16 simulation. Hub-height (140 m) validation metrics for each simulation at each lidar are shown in Fig. 11.

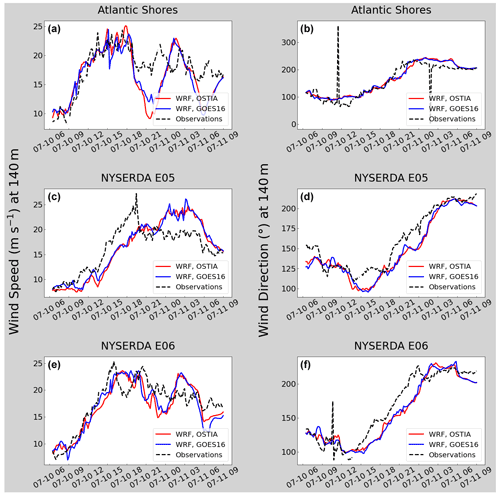

Figure 12Hub-height (140 m) wind speed and wind direction at the Atlantic Shores lidar (a, b), the NYSERDA E05 lidar (c, d), and the NYSERDA E06 lidar (e, f) during the 10–11 July event.

The GOES-16 and OSTIA simulations output statistically significantly different 140 m wind speeds during this period. Both generally track the major observed wind speed patterns at each observation site, although the timing and magnitude of some longer-scale changes are missed by the models. For example, although the models captured the wind speed increase beginning on 10 July 2020 at 09:00:00 UTC at Atlantic Shores, both erroneously forecast a subsequent down-, up-, and then down-ramp event (Fig. 12a). At NYSERDA E05, the initial spike in observed speeds occurred several hours earlier than the models forecasted (Fig. 12c), and, at NYSERDA E06, the models miss the timing and magnitude of a dip in wind speeds that was observed between 10 July 2020 at 15:00:00 UTC and 10 July 2020 at 20:00:00 UTC; they forecast a much larger drop in speed, almost 10 m s−1 compared with the original 6 m s−1, beginning just as the observed dip recovers. These faults all contribute to the relatively lower correlation of model output from both simulations with observations for this event.

3.3.3 18 July 2020 event

The last flagged event occurred between 18 July 2020 at 02:05:00 UTC and 18 July 2020 at 14:10:00 UTC. During this time, a high-pressure system was moving westerly into the region, causing a fall in wind speeds throughout the morning. This front spanned eastward into the Atlantic and aligned perpendicular to the New Jersey coast. Hub-height wind speed differences between the two simulations are varied throughout the domain, with a maximum difference in the magnitudes of average event wind speeds of 1.7 m s−1 and a mean difference of 0.29 m s−1 (Fig. 13). Larger differences, in particular, are found near the coast, and positive/negative differences vary spatially.

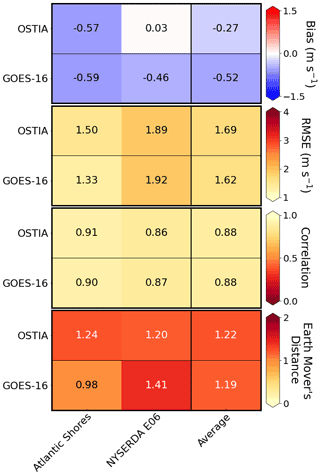

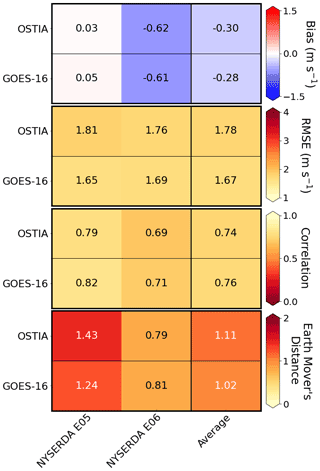

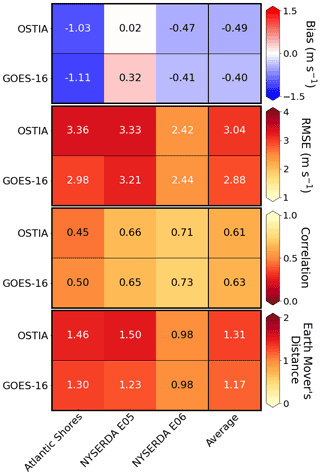

Figure 14Hub-height (140 m) wind speed validation metrics at the Atlantic Shores and NYSERDA E06 lidars during the 18 July event: bias, RMSE, correlation, and EMD.

Sufficient data for this event are present at Atlantic Shores and NYSERDA E06 but not at NYSERDA E05. Validation analysis for the event at the two lidars indicates that overall the products perform comparably, although OSTIA shows an almost 0.5 m s−1 stronger bias at NYSERDA E06. At lower heights (Fig. A3), there is clear evidence of GOES-16 outperforming OSTIA at Atlantic Shores. Farther north, at NYSERDA E06, the relative performance of each simulation is variable. Validation metrics at Atlantic Shores and NYSERDA E06 are shown in Fig. 14. Of note is that PDFs of 140 m wind speeds during this time (not shown) indicate bimodality in the observed wind speed distribution. Although OSTIA captures this feature, GOES-16 misses it almost entirely – at both lidars.

Figure 15Hub-height (140 m) wind speed and wind direction at the Atlantic Shores lidar (a, c) and the NYSERDA E06 lidar (b, d) during the 18 July event.

Over this event's time period, 140 m wind speeds drop at both locations from over 14 m s−1 to less than 3 m s−1, before beginning to increase again. As with the previous two cases, the models capture the general wind speed trend of slowing throughout the duration of the event, although they both present errors in the magnitude of wind speeds and the timing of wind profile changes. In this case, at NYSERDA E06 they predict a shift in wind direction before one was observed (there is insufficient wind direction data at NYSERDA E05 to make a determination about if this holds true at both lidars). Both simulations exhibit greater spread in wind speeds at each lidar than observations, and both have slower average velocities (Fig. 15).

In Sect. 3, we saw varied performance between the two SST products, depending on the variable in consideration (SST or winds) and the timescale (monthly or event scale). SST in OSTIA validated better than GOES-16 across the buoy array. However, hub-height wind speeds at each lidar location, for each month, point to a similar performance by both data sets. More nuanced events that occurred over hourly-to-daily timescales, which were selected based on differences between simulation output and overall WRF performance, also indicate comparable performance between the two simulations, with, in general, a slightly stronger performance using GOES-16 SSTs. Events such as these are of importance to wind energy forecasts because we found that they often correlate with wind ramps, during which times wind speeds fall below the rated power section of turbine power curves. In this region, power output is very sensitive to fluctuations in wind speed. Therefore, obtaining the most accurate wind forecast within this regime is important.

Although OSTIA SSTs at the lidars validate better than GOES-16, modeled hub-height (140 m) winds do not demonstrate the same trend. Compared with the 140 m wind heat maps for the events (Figs. 9, 11, and 14), good wind characterization performance does not necessarily correspond to good SST validation (Appendix B), which implies that SSTs across the full study area, not just localized temperatures, may have an impact on winds in the leasing area.

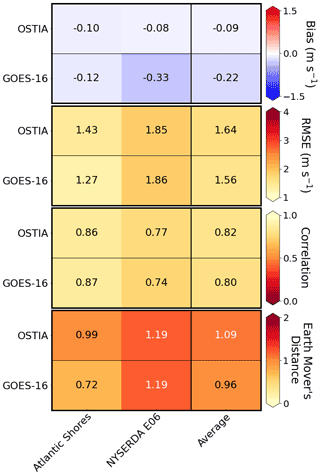

To further examine the disjointedness between SST and wind speed point validations, we consider model performance during each event in conjunction with how well their corresponding SSTs validate with observations across the buoy array (where data are available). During the 21–22 June event, 140 m wind speed bias and EMD in both simulations are the lowest of all the cases considered (Fig. 9), but there is an overall cold SST bias (−0.31 ∘C for OSTIA, −0.84 ∘C for GOES-16) across the buoy arrays, and SST correlation is very poor (Fig. B1). During the 10–11 July event, OSTIA SSTs validate better than GOES-16 (Fig. B2), but the two simulation's hub-height wind speeds validate similarly, with relatively poor performance across all validation metrics compared with the other flagged events. During the 18 July event, while SSTs from both products validated the best out of the three events (Fig. B3) and the models captured the overall trend in wind speeds with correlations of 0.88 for both simulations, EMD and the magnitude of the bias were both greater than the 21–22 June event.

Across all three events there is no clear relationship between wind forecasting ability and SST correctness. However, as with the monthly analysis, the relatively larger differences between SST and wind speed validations in the GOES-16 event simulations compared with those of OSTIA suggest that GOES-16's finer temporal and spatial resolutions may more accurately influence larger-scale dynamics throughout the region, leading to greater forecasting skill. Though beyond the scope of this study, more widespread in situ observations and validation of the two data sets against observations would help address how large of an effect this is. Such a study would additionally allow for a better understanding of how much a nuanced representation of diurnal SST cycling (as in the GOES-16 product, Fig. 2) influences the accuracy of wind forecasting in the region.

Figure 16Differences (GOES-16 – OSTIA) in average SST for June (a) and July (c), and in modeled 140 m wind speeds for June (b) and July (d).

Rapid fluctuations in wind speed, on the order of minutes to hours, are overall not well captured by either simulation. This is most evident during the 10–11 July event, when the magnitude and timing of wind speed changes are most misaligned. Correlation in particular is low (0.62), and RMSE is high (3 m s−1). In comparison, during the 18 July event, when wind speeds slow without any ramping, both simulations perform better; in particular, correlation is relatively strong for both (0.88). Similarly, during the 21–22 June event, which lacks any sharp ramping, correlation is also above 0.75 for both GOES-16 and OSTIA. Shorter, weaker fluctuations in wind speed during this event are not well captured by either simulation (Fig. 8), although GOES-16 validates with nominally less error (Fig. 9). It is possible that GOES-16's finer resolution may contribute to its slightly stronger performance in this case, as the product does capture hourly fluctuations in SSTs. In contrast, during the 10–11 July storm, winds are so strong and variable that SST resolution does not appear to make a significant difference, and the two simulations both show significant error.

Also of note are the differences in weather between June and July in the Mid-Atlantic region. June average wind speeds are faster than those in July for both simulations. Overall cooler SSTs throughout the region are also present in June, with a weaker temperature gradient in the cold bubble offshore of Cape Cod than in July. The difference in temperature in this region between GOES-16 and OSTIA is more pronounced in July, with GOES-16 showing warmer monthly average SSTs (Fig. 16). The corresponding average wind speed and surface pressure differences between the products are also more distinct in July. While the two simulations validated similarly for each month, in the more turbulent July environment, OSTIA outperforms GOES-16. However, due to the greater number of missing pixels that required gap-filling in July as compared with June (36.86 % more over the course of each month, resulting from the increased cloud cover – and the possible stronger coastal cold pool), this indication of product superiority may be questionable. The addition of more refined post-processing to account for incorrectly cloud-filtered pixels, as shown in Murphy et al. (2021), has been demonstrated to improve the accuracy of GOES-16 SSTs. A comparison of simulations using OSTIA against those using the sophisticated GOES-16 product should be conducted in the future to evaluate the level of improvement delivered using the latter's more computationally expensive data set.

In this study, we evaluated how SST inputs affect how well WRF forecasts winds off the Mid-Atlantic coast. We initially considered three remotely sensed SST data sets and validated them against in situ observations taken at various buoys in the region. The first data set, OSTIA, has a 0.05∘ resolution, is output at a daily interval, and is assimilated with in situ observations. The second product, GOES-16, has a finer resolution of 0.02∘ and is generated on an hourly interval but does not have the same level of post-processing as OSTIA. The data set contains gaps and lacks assimilation with in situ observations. To account for missing data, we ran an EOF process to statistically analyze trends in each monthly data set and fill its gaps. The third SST data set, MUR25, has a 0.2∘ spatial resolution and a daily temporal resolution, and similar to OSTIA it is assimilated with in situ observations. MUR25 was removed from further analysis after SST validation, as its performance compared with the other two data sets was notably poor.

Following SST validation, WRF was run for June and July of 2020 in the Mid-Atlantic domain depicted in Fig. 1. The simulation setups were identical with the exception of the input SST field (key model parameters presented in Table 2). We compared the characterized 140 m (hub-height) winds against lidar observations by calculating correlation, bias, RMSE, and EMD on both monthly and event-length (hourly) timescales. Times during which there was significant difference in wind speed output from the two simulations and the general WRF performance was satisfactory were flagged as events. Our findings indicated that while OSTIA SSTs validated better against buoy measurements, the model validated comparably when forced with each SST data set, with GOES-16 having output nominally more accurate wind speeds. These findings suggest that the differences in temporal and spatial resolution between the two SST products influence wind speed characterization, with finer resolutions leading to better forecasting skill.

Overall, this study shows that SST inputs to WRF do affect forecasted winds and how well they validate against observations in future leasing areas in the Mid-Atlantic. Although GOES-16 SSTs validate worse against buoy observations than OSTIA SSTs, on both monthly and event timescales, the wind characterization produced using GOES-16 inputs validates as well as, or in some cases better than, the OSTIA characterization, indicating that finer temporal and spatial SST resolution may contribute to improved wind forecasts in this region. To further explore this, a higher-quality post-processed GOES-16 data set assimilated with in situ observations, such as that created by Murphy et al. (2021), could be evaluated in future studies; generating a comparable data set was beyond the scope of this study.

This step of the research is in its early stages, and more cases need to be considered – on both monthly and event scales. Future work should focus on identifying and analyzing more promising events – in particular, wind ramps and low-pressure systems, as they have shown to produce larger differences between GOES-16- and OSTIA-forced model output. Wind direction validation, as well, should be considered in the future, as it was beyond the scope of this study.

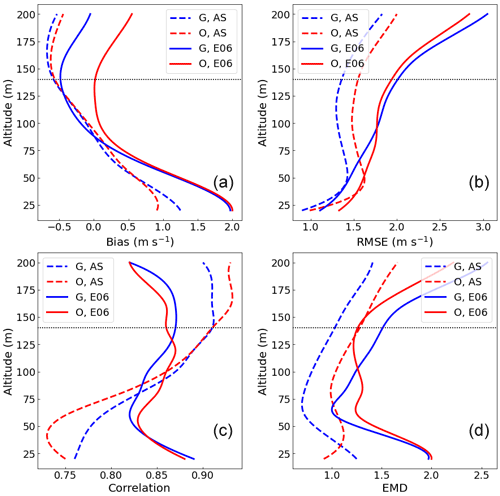

Contents of this Appendix include plots of horizontally averaged bias, correlation, RMSE, and EMD from sea level through a vertical height of 200 m for each flagged case at each relevant lidar. Figure A1 shows metrics at the two NYSERDA lidars for the 21–22 June event, Fig. A2 shows metrics at all three lidars for the 10–11 July event, and Fig. A3 shows metrics at Atlantic Shores and NYSERDA E06 for the 18 July event. The GOES-16 simulations are indicated by the blue lines and labeled with “G”, while the OSTIA simulations are shown in red and labeled with “O”. The labels AS, E05, and E06 refer to Atlantic Shores, NYSERDA E05, and NYSERDA E06, respectively.

Figure A1Validation metrics at NYSERDA E05 and NYSERDA E06 during the 21–22 June event: bias (a), RMSE (b), correlation (c), and EMD (d). Hub height is marked by a horizontal line at 140 m. The label “G” refers to GOES-16 simulations, while “O” refers to OSTIA simulations.

Figure A2Validation metrics at Atlantic Shores, NYSERDA E05, and NYSERDA E06 during the 10–11 July event: bias (a), RMSE (b), correlation (c), and EMD (d). Hub height is marked by a horizontal line at 140 m. The label “G” refers to GOES-16 simulations, while “O” refers to OSTIA simulations.

Contents of this Appendix include heat maps of SST validation metrics for each of the three events flagged in this study. Each plot includes a different subset of the buoy array; locations with missing data were removed from analysis on a case by case basis. The average value for each metric across all buoys considered is located on the far right side of each row. The validation metrics considered are bias, RMSE, correlation, and EMD. Figure B1 shows SST validation metrics for the 21–22 June event, Fig. B2 shows SST validation metrics for the 10–11 July event, and Fig. B3 shows SST validation metrics for the 18 July event.

Figure B1SST validation metrics (bias, RMSE, correlation, EMD) during the 21–22 June event, calculated across a subset of the buoy array. Omitted buoys are absent due to missing observational data at those locations.

Figure B2SST validation metrics (bias, RMSE, correlation, EMD) during the 10–11 July event, calculated across a subset of the buoy array. Omitted buoys are absent due to missing observational data at those locations.

Although this study considered a hub height of 140 m and therefore analyzed wind speeds primarily at that height, we include here validation metrics for each flagged event, at each lidar, for 100 m and 120 m hub heights. The 140 m hub heights are expected to be close to the standard for offshore wind development (Lantz et al., 2019), but shorter turbines may also be constructed; additionally, having a better understanding of model performance across the rotor-swept area and not just at hub height can better inform wind power potential characterization.

Below, Fig. C1 presents 100 m model performance at the two NYSERDA lidars for the 21–22 June event, and Fig. C2 shows performance metrics for the same event and lidars at 120 m. At both heights, GOES-16 performs slightly better than OSTIA.

Figure C3 shows 100 m model performance at all three lidars for the 10–11 July event, and Fig. C4 presents performance metrics at all three lidars for the 10–11 July event at 120 m. While GOES-16 shows a higher average wind speed bias than OSTIA, the results from GOES-16 have a lower average RMSE and a higher average correlation than OSTIA. The wind speed bias at all three lidars is notably strong, with a negative bias at Atlantic Shores (over 1 m s−1 slower than observed) and a positive bias at both NYSERDA lidars (well over 1 m s−1 at a 120 m hub height at NYSERDA E05).

Figure C5 depicts 100 m model performance at Atlantic Shores and NYSERDA E06 for the 18 July event, and Fig. C6 shows performance metrics at Atlantic Shores and NYSERDA E06 for the 18 July event at 120 m. Although both simulations' hub-height wind speeds show comparable correlation with observations, OSTIA has an over 0.1 m s−1 stronger wind speed bias than GOES-16, and GOES-16 validates with lower RMSE and EMD values.

Figure C1The 100 m hub-height validation metrics at NYSERDA E05 and NYSERDA E06 during the 21–22 June event: bias, RMSE, correlation, and EMD.

Figure C2The 120 m hub-height validation metrics at NYSERDA E05 and NYSERDA E06 during the 21–22 June event: bias, RMSE, correlation, and EMD.

Figure C3The 100 m hub-height validation metrics at NYSERDA E05 and NYSERDA E06 during the 10–11 July event: bias, RMSE, correlation, and EMD.

Figure C4The 120 m hub-height validation metrics at NYSERDA E05 and NYSERDA E06 during the 10–11 July event: bias, RMSE, correlation, and EMD.

Figure C5The 100 m hub-height validation metrics at Atlantic Shores and NYSERDA E06 during the 18 July event: bias, RMSE, correlation, and EMD.

Buoy data outside of the Atlantic Shores and NYSERDA floating lidars were collected via the National Oceanic and Atmospheric Administration's NBDC (https://www.ndbc.noaa.gov; National Oceanic and Atmospheric Administration, 2021). Satellite data were collected via the Physical Oceanography Distributed Active Archive Center. The WRF namelist may be obtained at https://doi.org/10.5281/zenodo.7275214 (Optis and Redfern, 2022).

SR downloaded and processed the GOES-16 data, ran the model for this SST input, and led the data analysis with contributions from MO, GX, and CD. MO designed the model setup, ran the model with OSTIA data, and contributed to the data analysis.

The contact author has declared that none of the authors has any competing interests.

Publisher's note: Copernicus Publications remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

We thank Patrick Hawbecker (National Center for Atmospheric Research) for providing code to overwrite ERA5 SSTs with those of each satellite product. We also thank Sarah C. Murphy (Department of Marine and Coastal Sciences, Rutgers University), Travis Miles (Department of Marine and Coastal Sciences, Rutgers University), and Joseph F. Brodie (Department of Atmospheric Research, Rutgers University) for providing help and guidance with the DINEOF software.

This research has been supported by the U.S. Department of Energy Office of Energy Efficiency and Renewable Energy Wind Energy Technologies Office and by the National Offshore Wind Research and Development Consortium under agreement no. CRD-19-16351.

This paper was edited by Sara C. Pryor and reviewed by two anonymous referees.

Aird, J. A., Barthelmie, R. J., Shepherd, T. J., and Pryor, S. C.: Occurrence of Low-Level Jets over the Eastern U.S. Coastal Zone at Heights Relevant to Wind Energy, Energies, 15, 445, https://doi.org/10.3390/en15020445, 2022. a

Alvera-Azcárate, A., Barth, A., Rixen, M., and Beckers, J. M.: Reconstruction of incomplete oceanographic data sets using empirical orthogonal functions: application to the Adriatic Sea surface temperature, Ocean Model., 9, 325–346, https://doi.org/10.1016/j.ocemod.2004.08.001, 2005. a

Banta, R. M., Pichugina, Y. L., Brewer, W. A., James, E. P., Olson, J. B., Benjamin, S. G., Carley, J. R., Bianco, L., Djalalova, I. V., Wilczak, J. M., Hardesty, R. M., Cline, J., and Marquis, M. C.: Evaluating and Improving NWP Forecast Models for the Future: How the Needs of Offshore Wind Energy Can Point the Way, B. Am. Meteorol. Soc., 99, 1155–1176, https://doi.org/10.1175/BAMS-D-16-0310.1, 2018. a

Bureau of Ocean Energy Management: Outer Continental Shelf Renewable Energy Leases Map Book, https://www.boem.gov/renewable-energy/mapping-and-data/renewable-energy-gis-data (last access: 19 December 2022), 2018. a

Byun, D., Kim, S., Cheng, F.-Y., Kim, H.-C., and Ngan, F.: Improved Modeling Inputs: Land Use and Sea-Surface Temperature, Final Report, Texas Commission on Environmental Quality, https://www.tceq.texas.gov/airquality/airmod/project/pj_report_met.html (last access: 19 December 2022), 2007. a

Chen, F., Miao, S., Tewari, M., Bao, J.-W., and Kusaka, H.: A numerical study of interactions between surface forcing and sea breeze circulations and their effects on stagnation in the greater Houston area, J. Geophys. Res.-Atmos., 116, D12105, https://doi.org/10.1029/2010JD015533, 2011. a, b

Chen, Z., Curchitser, E., Chant, R., and Kang, D.: Seasonal Variability of the Cold Pool Over the Mid-Atlantic Bight Continental Shelf, J. Geophys. Res.-Oceans, 123, 8203–8226, https://doi.org/10.1029/2018JC014148, 2018. a

Chin, T. M., Vazquez-Cuervo, J., and Armstrong, E. M.: A multi-scale high-resolution analysis of global sea surface temperature, Remote Sens. Environ., 200, 154–169, https://doi.org/10.1016/j.rse.2017.07.029, 2017. a

Colle, B. A. and Novak, D. R.: The New York Bight Jet: Climatology and Dynamical Evolution, Mon. Weather Rev., 138, 2385–2404, https://doi.org/10.1175/2009MWR3231.1, 2010. a

Debnath, M., Doubrawa, P., Optis, M., Hawbecker, P., and Bodini, N.: Extreme wind shear events in US offshore wind energy areas and the role of induced stratification, Wind Energ. Sci., 6, 1043–1059, https://doi.org/10.5194/wes-6-1043-2021, 2021. a, b

Donlon, C. J., Martin, M., Stark, J., Roberts-Jones, J., Fiedler, E., and Wimmer, W.: The Operational Sea Surface Temperature and Sea Ice Analysis (OSTIA) system, Remote Sens. Environ., 116, 140–158, https://doi.org/10.1016/j.rse.2010.10.017, 2012. a, b

Dragaud, I. C. D. V., Soares da Silva, M., Assad, L. P. d. F., Cataldi, M., Landau, L., Elias, R. N., and Pimentel, L. C. G.: The impact of SST on the wind and air temperature simulations: a case study for the coastal region of the Rio de Janeiro state, Meteorol. Atmos. Phys., 131, 1083–1097, https://doi.org/10.1007/s00703-018-0622-5, 2019. a, b

Fiedler, E. K., Mao, C., Good, S. A., Waters, J., and Martin, M. J.: Improvements to feature resolution in the OSTIA sea surface temperature analysis using the NEMOVAR assimilation scheme, Q. J. Roy. Meteor. Soc., 145, 3609–3625, https://doi.org/10.1002/qj.3644, 2019. a

Gelaro, R., McCarty, W., Suárez, M. J., Todling, R., Molod, A., Takacs, L., Randles, C. A., Darmenov, A., Bosilovich, M. G., Reichle, R., Wargan, K., Coy, L., Cullather, R., Draper, C., Akella, S., Buchard, V., Conaty, A., da Silva, A. M., Gu, W., Kim, G.-K., Koster, R., Lucchesi, R., Merkova, D., Nielsen, J. E., Partyka, G., Pawson, S., Putman, W., Rienecker, M., Schubert, S. D., Sienkiewicz, M., and Zhao, B.: The Modern-Era Retrospective Analysis for Research and Applications, Version 2 (MERRA-2), J. Climate, 30, 5419–5454, https://doi.org/10.1175/JCLI-D-16-0758.1, 2017. a

Gerber, H., Chang, S., and Holt, T.: Evolution of a Marine Boundary-Layer Jet, J. Atmos. Sci., 46, 1312–1326, https://doi.org/10.1175/1520-0469(1989)046<1312:EOAMBL>2.0.CO;2, 1989. a

Gutierrez, W., Araya, G., Kiliyanpilakkil, P., Ruiz-Columbie, A., Tutkun, M., and Castillo, L.: Structural impact assessment of low level jets over wind turbines, J. Renew Sustain. Ener., 8, 023308, https://doi.org/10.1063/1.4945359, 2016. a

Gutierrez, W., Ruiz-Columbie, A., Tutkun, M., and Castillo, L.: Impacts of the low-level jet's negative wind shear on the wind turbine, Wind Energ. Sci., 2, 533–545, https://doi.org/10.5194/wes-2-533-2017, 2017. a

Hersbach, H., Bell, B., Berrisford, P., Hirahara, S., Horányi, A., Muñoz-Sabater, J., Nicolas, J., Peubey, C., Radu, R., Schepers, D., Simmons, A., Soci, C., Abdalla, S., Abellan, X., Balsamo, G., Bechtold, P., Biavati, G., Bidlot, J., Bonavita, M., De Chiara, G., Dahlgren, P., Dee, D., Diamantakis, M., Dragani, R., Flemming, J., Forbes, R., Fuentes, M., Geer, A., Haimberger, L., Healy, S., Hogan, R. J., Hólm, E., Janisková, M., Keeley, S., Laloyaux, P., Lopez, P., Lupu, C., Radnoti, G., de Rosnay, P., Rozum, I., Vamborg, F., Villaume, S., and Thépaut, J.-N.: The ERA5 global reanalysis, Q. J. Roy. Meteor. Soc., 146, 1999–2049, https://doi.org/10.1002/qj.3803, 2020. a, b, c

Iacono, M. J., Delamere, J. S., Mlawer, E. J., Shephard, M. W., Clough, S. A., and Collins, W. D.: Radiative forcing by long-lived greenhouse gases: Calculations with the AER radiative transfer models, J. Geophys. Res.-Atmos., 113, D13103, https://doi.org/10.1029/2008JD009944, 2008. a

Källstrand, B.: Low level jets in a marine boundary layer during spring, Contrib. Atmos. Phys., 71, 359–373, 1998. a

Kain, J. S. and Fritsch, J. M.: Convective parameterization for mesoscale models: The Kain–Fritsch scheme, in: The representation of cumulus convection in numerical models, American Meteorological Society, Boston, MA, 165–170, https://doi.org/10.1007/978-1-935704-13-3_16, 1993. a

Kikuchi, Y., Fukushima, M., and Ishihara, T.: Assessment of a Coastal Offshore Wind Climate by Means of Mesoscale Model Simulations Considering High-Resolution Land Use and Sea Surface Temperature Data Sets, Atmosphere, 11, 379, https://doi.org/10.3390/atmos11040379, 2020. a, b, c

Lantz, E. J., Roberts, J. O., Nunemaker, J., DeMeo, E., Dykes, K. L., and Scott, G. N.: Increasing Wind Turbine Tower Heights: Opportunities and Challenges, United States, OSTI technical report NREL/TP-5000-73629, NREL, https://doi.org/10.2172/1515397, 2019. a, b

Li, H., Claremar, B., Wu, L., Hallgren, C., Körnich, H., Ivanell, S., and Sahlée, E.: A sensitivity study of the WRF model in offshore wind modeling over the Baltic Sea, Geosci. Front., 12, 101229, https://doi.org/10.1016/j.gsf.2021.101229, 2021. a

Lombardo, K., Sinsky, E., Edson, J., Whitney, M. M., and Jia, Y.: Sensitivity of Offshore Surface Fluxes and Sea Breezes to the Spatial Distribution of Sea-Surface Temperature, Bound.-Lay. Meteorol., 166, 475–502, https://doi.org/10.1007/s10546-017-0313-7, 2018. a

Miller, S. T. K., Keim, B. D., Talbot, R. W., and Mao, H.: Sea breeze: Structure, forecasting, and impacts, Rev. Geophys., 41, 1011, https://doi.org/10.1029/2003RG000124, 2003. a

Murphy, P., Lundquist, J. K., and Fleming, P.: How wind speed shear and directional veer affect the power production of a megawatt-scale operational wind turbine, Wind Energ. Sci., 5, 1169–1190, https://doi.org/10.5194/wes-5-1169-2020, 2020. a

Murphy, S. C., Nazzaro, L. J., Simkins, J., Oliver, M. J., Kohut, J., Crowley, M., and Miles, T. N.: Persistent upwelling in the Mid-Atlantic Bight detected using gap-filled, high-resolution satellite SST, Remote Sens. Environ., 262, 112487, https://doi.org/10.1016/j.rse.2021.112487, 2021. a, b, c

Nakanishi, M. and Niino, H.: An improved Mellor–Yamada level-3 model: Its numerical stability and application to a regional prediction of advection fog, Bound.-Lay. Meteorol., 119, 397–407, 2006. a

Nakanishi, M. and Niino, H.: Development of an improved turbulence closure model for the atmospheric boundary layer, J. Meteorol. Soc. Jpn. Ser. II, 87, 895–912, 2009. a

National Oceanic and Atmospheric Administration: National Data Buoy Center, https://www.ndbc.noaa.gov (last access: 19 December 2022), 2021. a

Nunalee, C. G. and Basu, S.: Mesoscale modeling of coastal low-level jets: implications for offshore wind resource estimation, Wind Energy, 17, 1199–1216, https://doi.org/10.1002/we.1628, 2014. a

Optis, M. and Redfern, S.: Mid-Atlantic SST namelist, Zenodo [data set], https://doi.org/10.5281/zenodo.7275214, 2022. a

Optis, M., Bodini, N., Debnath, M., and Doubrawa, P.: Best Practices for the Validation of US Offshore Wind Resource Models, Tech. rep., Tech. Rep. NREL/TP-5000-78375, NREL – National Renewable Energy Laboratory, https://doi.org/10.2172/1755697, 2020. a

Park, R. S., Cho, Y.-K., Choi, B.-J., and Song, C. H.: Implications of sea surface temperature deviations in the prediction of wind and precipitable water over the Yellow Sea, J. Geophys. Res.-Atmos., 116, D17106, https://doi.org/10.1029/2011JD016191, 2011. a

Pichugina, Y. L., Brewer, W. A., Banta, R. M., Choukulkar, A., Clack, C. T. M., Marquis, M. C., McCarty, B. J., Weickmann, A. M., Sandberg, S. P., Marchbanks, R. D., and Hardesty, R. M.: Properties of the offshore low level jet and rotor layer wind shear as measured by scanning Doppler Lidar, Wind Energy, 20, 987–1002, https://doi.org/10.1002/we.2075, 2017. a

Ping, B., Su, F., and Meng, Y.: An Improved DINEOF Algorithm for Filling Missing Values in Spatio-Temporal Sea Surface Temperature Data, PLOS ONE, 11, e0155928, https://doi.org/10.1029/2011JD016191, 2016. a, b

Powers, J. G., Klemp, J. B., Skamarock, W. C., Davis, C. A., Dudhia, J., Gill, D. O., Coen, J. L., Gochis, D. J., Ahmadov, R., Peckham, S. E., Grell, G. A., Michalakes, J., Trahan, S., Benjamin, S. G., Alexander, C. R., Dimego, G. J., Wang, W., Schwartz, C. S., Romine, G. S., Liu, Z., Snyder, C., Chen, F., Barlage, M. J., Yu, W., and Duda, M. G.: The Weather Research and Forecasting Model: Overview, System Efforts, and Future Directions, B. Am. Meteorol. Soc., 98, 1717–1737, https://doi.org/10.1175/BAMS-D-15-00308.1, 2017. a

Schmit, T. J., Gunshor, M. M., Menzel, W. P., Gurka, J. J., Li, J., and Bachmeier, A. S.: Introducing the next-generation Advanced Baseline Imager on GOES-R, B. Am. Meteorol. Soc., 86, 1079–1096, 2005. a, b

Schmit, T. J., Li, J., Li, J., Feltz, W. F., Gurka, J. J., Goldberg, M. D., and Schrab, K. J.: The GOES-R Advanced Baseline Imager and the Continuation of Current Sounder Products, J. Appl. Meteorol. Clim., 47, 2696–2711, https://doi.org/10.1175/2008JAMC1858.1, 2008. a

Schmit, T. J., Griffith, P., Gunshor, M. M., Daniels, J. M., Goodman, S. J., and Lebair, W. J.: A closer look at the ABI on the GOES-R series, B. Am. Meteorol. Soc., 98, 681–698, 2017. a

Schoenberg Ferrier, B.: A double-moment multiple-phase four-class bulk ice scheme. Part I: Description, J. Atmos. Sci., 51, 249–280, 1994. a

Shimada, S., Ohsawa, T., Kogaki, T., Steinfeld, G., and Heinemann, D.: Effects of sea surface temperature accuracy on offshore wind resource assessment using a mesoscale model, Wind Energy, 18, 1839–1854, https://doi.org/10.1002/we.1796, 2015. a

Stark, J. D., Donlon, C. J., Martin, M. J., and McCulloch, M. E.: OSTIA: An operational, high resolution, real time, global sea surface temperature analysis system, OCEANS 2007 – Europe, 2007, 1–4, https://doi.org/10.1109/OCEANSE.2007.4302251, 2007. a, b

Stull, R. B.: Practical meteorology: an algebra-based survey of atmospheric science, University of British Columbia, 2015. a

Tewari, M., Chen, F., Wang, W., Dudhia, J., LeMone, M., Mitchell, K., Ek, M., Gayno, G., Wegiel, J., and Cuenca, R. H.: Implementation and verification of the unified NOAH land surface model in the WRF model (Formerly Paper Number 17.5), in: Vol. 14, Proceedings of the 20th Conference on Weather Analysis and Forecasting/16th Conference on Numerical Weather Prediction, Seattle, WA, USA, lgebra-based survey of atmospheric science, University of British Columbia, https://ams.confex.com/ams/84Annual/techprogram/paper_69061.htm (last access: 19 December 2022), 2004. a

Xia, G., Draxl, C., Optis, M., and Redfern, S.: Detecting and characterizing simulated sea breezes over the US northeastern coast with implications for offshore wind energy, Wind Energ. Sci., 7, 815–829, https://doi.org/10.5194/wes-7-815-2022, 2022. a

- Abstract

- Copyright statement

- Introduction

- Methods

- Results

- Discussion

- Conclusions

- Appendix A: Average validation metrics through 200 m for flagged cases

- Appendix B: Sea surface temperature validation metrics for the flagged events

- Appendix C: Wind speed validation heat maps at 100 m and 120 m

- Data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References

- Abstract

- Copyright statement

- Introduction

- Methods

- Results

- Discussion

- Conclusions

- Appendix A: Average validation metrics through 200 m for flagged cases

- Appendix B: Sea surface temperature validation metrics for the flagged events

- Appendix C: Wind speed validation heat maps at 100 m and 120 m

- Data availability

- Author contributions

- Competing interests

- Disclaimer

- Acknowledgements

- Financial support

- Review statement

- References