the Creative Commons Attribution 4.0 License.

the Creative Commons Attribution 4.0 License.

Overview of normal behavior modeling approaches for SCADA-based wind turbine condition monitoring demonstrated on data from operational wind farms

Xavier Chesterman

Timothy Verstraeten

Pieter-Jan Daems

Ann Nowé

Jan Helsen

Condition monitoring and failure prediction for wind turbines currently comprise a hot research topic. This follows from the fact that investments in the wind energy sector have increased dramatically due to the transition to renewable energy production. This paper reviews and implements several techniques from state-of-the-art research on condition monitoring for wind turbines using SCADA data and the normal behavior modeling framework. The first part of the paper consists of an in-depth overview of the current state of the art. In the second part, several techniques from the overview are implemented and compared using data (SCADA and failure data) from five operational wind farms. To this end, six demonstration experiments are designed. The first five experiments test different techniques for the modeling of normal behavior. The sixth experiment compares several techniques that can be used for identifying anomalous patterns in the prediction error. The selection of the tested techniques is driven by requirements from industrial partners, e.g., a limited number of training data and low training and maintenance costs of the models. The paper concludes with several directions for future work.

- Article

(7582 KB) - Full-text XML

- BibTeX

- EndNote

In recent years, investments in renewable energy sources like wind and solar energy have increased significantly. This is the result of goals set in climate change agreements and changes in the geopolitical situation. According to the Global Wind Report 2022, an additional 93.6 GW of wind energy production capacity was installed in 2021. This brings the total to 837 GW, which corresponds to a 12 % increase compared to the previous year (Lee and Zhao, 2022). To keep the transition on track, the profitability of the investments needs to be guaranteed. Furthermore, to keep the European economy competitive in the globalized market, the price of energy production using wind turbines needs to be kept as low as possible. Both depend to a large extent on the maintenance costs.

According to Pfaffel et al. (2017), recent studies have shown that the operation and maintenance of wind turbines make up 25 %–40 % of the levelized cost of energy. A more detailed analysis shows that premature failures due to excessive wear play a considerable role. These are caused by, among other things, high loads due to environmental conditions and aggressive control actions (Verstraeten et al., 2019; Tazi et al., 2017; Greco et al., 2013). If it were possible to identify these types of failures well in advance, it would create the opportunity to avoid unexpected downtime and organize the maintenance of turbines more optimally. This in turn would result in increased production and a further reduction in maintenance costs, which would improve the profitability of the investments and reduce wind energy prices.

This paper gives an overview of the current state of the art on condition monitoring for wind farms using SCADA data and the normal behavior modeling (NBM) framework. The focus on SCADA data is motivated by the fact that they are an inexpensive source of information that are readily available. This is valuable in an industrial context where adding new sensors is expensive and not straightforward. The focus on the NBM methodology can be justified by the fact that it has shown its merits and that properly trained NBM models can result in interesting engineering insights. Several techniques used in the state-of-the-art research are also implemented and compared. For this, 10 min SCADA data from five different wind farms are used. Furthermore, failure information is also available for these wind farms. More specifically, there is information on generator bearing, generator fan, and rotor brush high-temperature failures. The different techniques are implemented and compared using six demonstration experiments. Five experiments focus on NBM, and one focuses on the analysis of prediction error. By conducting a comparative analysis and discussion of the results on real data, a better understanding can be achieved of the performance of different techniques applied to real data. Because an exhaustive overview is unfeasible, only a limited selection can be discussed. This selection is based on several assumptions, e.g., that there are only a relatively limited number of training data and a limited amount of time and that due to maintenance constraints the complexity of the methodology needs to be kept as low as possible. These constraints are based on feedback received from several industrial partners.

The paper is built up as follows. The first section is the Introduction. The second section discusses the current state of the art. In the third section, an experimental methodology is designed that combines, compares, and demonstrates the performance of several techniques mentioned in the state-of-the-art overview. The fourth part is the comparative analysis of several techniques from the state of the art. The fifth and last part is the conclusion, which also includes a discussion of possible future directions for research.

Failure prediction on wind turbines using SCADA data is a hot research topic. This is due to the fact that over time more sensor data have become available (Helsen, 2021). There are several different families of methodologies that compete in this domain. According to Helbing and Ritter (2018), the methodologies can be divided into model-based signal processing and data-driven methods. An alternative classification can be found in Black et al. (2021), where a distinction is made between (1) trending, (2) clustering, (3) NBM, (4) damage modeling, (5) alarm assessment, and (6) performance monitoring. In Tautz-Weinert and Watson (2017) five categories are identified: (1) trending, (2) clustering, (3) NBM, (4) damage modeling, and (5) assessment of alarms and expert systems.

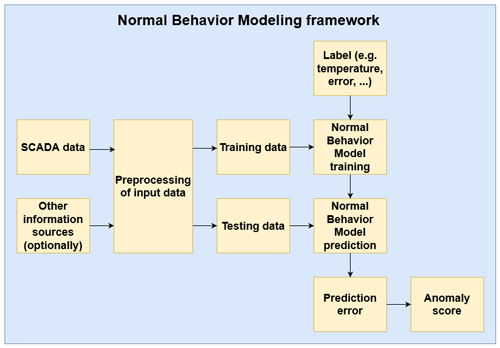

The NBM methodological family is very diverse. Many different algorithms can be used to model the “normal” or “healthy” behavior of a wind turbine signal. However, in all this diversity, there are several commonalities. Figure 1 gives an overview of a standard NBM flow. The SCADA data are ingested by the pipeline. The first step is splitting the data into a training and testing dataset. This is done prior to the preprocessing and modeling steps to avoid “information leakage”. The testing dataset is a random subsample that is set aside for the final validation of the methodology and should not be used during training. How this split is made depends on the type and the number of data. Often 80 %–20 % or 70 %–30 % random splits are made. However, other options are possible. If the data are time series, which is the case when using SCADA data, and the models used as NBM use lagged predictors, then the train–test split should be done more carefully so that the relation between the target and the lagged predictors is not broken. A possibility is assigning the first 70 % of the observations (based on their timestamp) to the training and the rest to the testing dataset. In the next step, the data are preprocessed. This is done to clean the signals (e.g., removing measurement errors, filling in missing values) and in some cases to reduce the noisiness of the signals (e.g., binning). Filtering is sometimes used if it is expected that the relation between the signals is influenced by certain other factors (e.g., wind turbine states). The preprocessing is done on the training and testing dataset separately. However, the same techniques are used on both datasets.

In the next step, the training dataset is used to learn or train the normal behavior model. For this, a health label of some kind is required. In the case of SCADA-based anomaly detection for wind turbines, this is in general a temperature signal that is related to the failure that needs to be detected. For example, if the research is focused on predicting generator bearing failures, then the label might be the temperature of a generator bearing. This means that it is a supervised regression problem. Many different algorithms are suitable as NBM models, e.g., ordinary least squares (OLS), random forest (RF), support vector machine (SVM), neural network (NN), and long short-term memory (LSTM). The NBM model is trained on healthy data (meaning not polluted with anomalies that can be associated with a failure). Once the NBM model has been trained, it can be used for predicting the expected normal behavior using the test dataset. In the next step, the difference between the predicted and the observed behavior is analyzed. If there is a large deviation between the two, this can be considered evidence of a problem. The deviation is in general transformed into an anomaly score that says something meaningful about for example the probability of failure or the remaining useful life (RUL).

In what follows an overview is given of different techniques that are used in the state-of-the-art literature for each step of the pipeline. The papers that will be discussed in this section have the following properties: firstly, they are based on wind turbine SCADA data; secondly, they perform condition monitoring and anomaly detection on temperature signals of the turbine (this excludes for example research that focuses on the power curve); and thirdly, they follow the NBM methodology. By limiting the scope of the overview, it can be more exhaustive and give the reader a better insight into what has been tried in the literature.

2.1 Preprocessing techniques

Preprocessing is an important, although often somewhat underexposed, part of the NBM pipeline. Decisions taken during this step can influence the training and performance of the NBM models later on. Different preprocessing techniques exist and have been used in recent research. The choice of a technique is to a certain extent guided by the properties of the input data; e.g., for time series data, the order of the data points, as well as the relation between them, is relevant. This means that only preprocessing techniques that retain this property of the data should be used. But even then multiple preprocessing techniques are usable. Why a certain technique is chosen over a different one is often not thoroughly explained in papers. This subsection attempts to give an overview of which techniques are used in current state-of-the-art research. An analysis of the literature shows that the preprocessing of the data is used for among other things the handling of missing values, outliers, noise reduction, filtering, and transforming the data.

Missing values can be problematic for certain statistical and machine learning models. For this reason, they need to be treated or filled in properly. Several techniques are used in the literature. A first technique is removing the observations with missing data (see Maron et al., 2022; Miele et al., 2022; Cui et al., 2018; and Bangalore et al., 2017). This can be difficult when time series modeling is used. Furthermore, the question needs to be asked why the data are missing. If they are not “missing completely at random” (MCAR), this can result in bias (Emmanuel et al., 2021). A different solution is single imputation. A first example of this is carry forward and/or backward. In this technique, the missing value is replaced by the last known value preceding the missing value (carry forward) or the first known value following on from the missing value (carry backward). This is used in Bermúdez et al. (2022), Campoverde et al. (2022), Chesterman et al. (2022, 2021), and Mazidi et al. (2017). An alternative is interpolation. This can be done in several ways, e.g., Hermite interpolation (see Bermúdez et al., 2022, and Campoverde et al., 2022) or linear interpolation (see Chesterman et al., 2022, 2021, and Miele et al., 2022). There are several elements that need to be taken into account when using these techniques. First of all, extra care needs to be taken when using them. Large gaps in time series are a problem, since the imputation can become meaningless. This can result in pollution of the relation between multiple signals. High dimensionality of the data can also be a problem (Emmanuel et al., 2021).

If the number of missing data is fairly limited (and there are no long stretches of missing data), an aggregate like the mean or median can be calculated and used as a proxy. The resulting time series has of course a lower resolution, but the missing values are gone. This method is used in Verma et al. (2022). The success of this methodology of course depends on how many data are missing and on how many data each aggregated value is based on. Another solution is what is called machine-learning-based imputation. This technique uses certain machine learning models to impute the missing values (Emmanuel et al., 2021). There are several ways to do this. For example, clustering algorithms like k-nearest neighbors can be used to find similar complete observations. This is used in Black et al. (2022).

The above overview gives several techniques that have been used in research that focuses on anomaly detection in temperature signals from wind turbines. However, there exist several other techniques that can be used for imputing missing values, e.g., hot-deck imputation, regression imputation, expectation maximization, and multiple imputation (Emmanuel et al., 2021). To the best of the authors' knowledge, no (or a very limited number of) papers in this specific research domain have been written that use these techniques. The lack of research that uses, for example, multiple imputation (like multivariate imputation by chained equations or MICE; van Buren and Groothuis-Oudshoorn, 2011) can be considered a blind spot.

A second problem that might influence the NBM training is outliers. If these occur in sufficient quantity, they can impact the modeling severely. Oftentimes the decision is made in the literature to simply remove them. This of course implies that the outliers can be detected in the first place. This can be done for example by using the interquartile range (see Campoverde et al., 2022), a custom dynamic or user-defined threshold (see Chesterman et al., 2022, 2021, and Castellani et al., 2021), the 5σ rule (see Miele et al., 2022) or clustering (see Cui et al., 2018, and Bangalore et al., 2017). Removing outliers needs to be done carefully to avoid abnormal values associated with the failure of interest also being removed. This requires a good understanding of the data. In some cases the outliers will be clearly visible. This can for example be the case when measurement errors result in values that are multiple times larger or smaller than what is physically possible. In these cases removing the outliers is straightforward. However, in other cases, the difference between outliers or anomalies caused by the failing component and outliers caused by another reason is much less clear. Removing these types of outliers should only be done after careful consideration.

Some papers also use noise reduction techniques. Noise in signals can make it more difficult for the NBM algorithm to model the relation between them. If it is possible to clean the signal, this should be considered, since it will improve the performance of the NBM model. This can be done for example by aggregating the data to a lower resolution (see Chesterman et al., 2022, 2021, and Turnbull et al., 2021) or cleaning or filtering the data using expert knowledge (see Peter et al., 2022; Takanashi et al., 2022; Verma et al., 2022; Turnbull et al., 2021; Udo and Yar, 2021; Beretta et al., 2020; and Kusiak and Li, 2011).

In some cases, it might be useful to transform the data. This can result in features with more favorable properties. For example, principal component analysis (PCA) transformation (see Campoverde et al., 2022, and Castellani et al., 2021) and zero-phase component transformation (see Renström et al., 2020) result in uncorrelated features which are linear combinations of the original signals. This can be beneficial for the training of the NBM. Another transformation that might be done is rebalancing the dataset. This can be necessary when certain operational states of the turbine are underrepresented in the training data. Oversampling of the minority class or undersampling of the majority class is an option. A more sophisticated technique is the synthetic minority oversampling technique (SMOTE) used in for example Verma et al. (2022). Lastly, in some papers, new features are created by clustering the original signals of the SCADA data into several groups using clustering algorithms. In the next step, the new features are used as input to the NBM model (see Liu et al., 2020).

This overview shows that many different preprocessing techniques are available and have been tried. However, the technique choice is often not well motivated in the papers. Also, the impact of a certain technique on the results is in general not extensively discussed, even though it is known from statistical research that this can be significant.

2.2 The data and signals

SCADA data can come in different resolutions. The most available resolution is 10 min, since it reduces the number of data that need to be transmitted (Yang et al., 2014). This means that, for each 10 min window, the dataset contains the average signal value. In general, the SCADA data also contain information on the minimum, maximum, and standard deviation of the signal during the 10 min window. Less common resolutions are for example 1 min and 1 s. In the state-of-the-art literature, the following resolutions can be found:

-

10 min – Bermúdez et al. (2022), Black et al. (2022), Campoverde et al. (2022), Chesterman et al. (2022, 2021), Maron et al. (2022), Mazidi et al. (2017), Miele et al. (2022), Peter et al. (2022), Takanashi et al. (2022), Beretta et al. (2021, 2020), Castellani et al. (2021), Chen et al. (2021), Meyer (2021), Turnbull et al. (2021), Udo and Yar (2021), Liu et al. (2020), McKinnon et al. (2020), Renström et al. (2020), Zhao et al. (2018), Bangalore et al. (2017), Dienst and Beseler (2016), Bangalore and Tjernberg (2015, 2014), Schlechtingen and Santos (2014, 2012), Zaher et al. (2009), Garlick and Watson (2009);

-

5 min – Kusiak and Li (2011);

-

10 s – Kusiak and Verma (2012);

-

1 s – Sun et al. (2016), Li et al. (2014);

-

100 Hz – Verma et al. (2022), Kim et al. (2011).

The 100 Hz data used in for example Verma et al. (2022) come from the Controls Advanced Research Turbine (CART) of the National Renewable Energy Laboratory (NREL). The fact that it is a research turbine makes it possible to sample at much higher rates than what is normally possible. Some papers combine the SCADA data with other information sources like event logs that contain wind turbine alarms (see Miele et al., 2022; Beretta et al., 2020; Renström et al., 2020; and Kusiak and Li, 2011) or vibration data (see Turnbull et al., 2021).

The SCADA data contain information on many different parts of the turbines. This implies that the datasets consist in general of dozens or even hundreds of signals (depending on the turbine type). However, not all of them are relevant to the case that is being solved. Some papers focus on a small subset of expert-selected signals (see for example Peter et al., 2022; Bermúdez et al., 2022; and Chesterman et al., 2022). Other papers use a large subset of signals and reduce the dimensionality of the problem during the preprocessing step or during a model-based automatic feature selection step that extracts the relevant information (see for example Lima et al., 2020; Renström et al., 2020; and Dienst and Beseler, 2016). Some papers select signals based on the internal structure of the wind turbine (for example on the subsystem level; Marti-Puig et al., 2021). The advantage of the first method is that the number of signals used for training is limited, which reduces the computational burden of the training process. The disadvantage is however that for cases in which the expert knowledge is not complete, important signals might be missed. This is less likely when the second method is used. The disadvantage of this method is however that the computational cost is significantly higher and that the selected subset can change over different runs. The third method uses the wind turbine ontology or taxonomy as a guideline. Its performance depends of course on the quality of the ontology or taxonomy.

The signals that are often used for condition monitoring of wind turbines can be more or less divided into three groups: (1) environmental data like wind speed or outside temperature (used in for example Bermúdez et al., 2022; Black et al., 2022; Campoverde et al., 2022; Mazidi et al., 2017; and Miele et al., 2022); (2) operational data from the wind turbine like active power or rotor speed (used in for example Bermúdez et al., 2022; Black et al., 2022; Chesterman et al., 2022; Miele et al., 2022; and Peter et al., 2022); and (3) wind turbine temperature signals like the temperatures of the generator bearings, the temperature of the main shaft bearing, or the temperature of the generator stator (used for example in Bermúdez et al., 2022; Black et al., 2022; Campoverde et al., 2022; Chesterman et al., 2022; and Mazidi et al., 2017). The first two groups are often used by default, independent of the target signal that needs to be modeled or the failure that needs to be detected. This is because they contain information on the wind turbine context (e.g., it is a stormy day, a very hot day, the turbine is derated). The third group of signals is much more tied to the case at hand. For example, generator temperature signals are used if the focus lies on generator failures and gearbox temperature signals are used if gearbox failures need to be detected.

Overall it can be stated that most state-of-the-art research is focused on 10 min SCADA data. This means that there are research opportunities on data with a higher resolution like 1 min or 1 s. Furthermore, several signal or feature selection techniques are used in the literature. However, a thorough examination of their performance (expert-knowledge-based vs. automatic or model-driven vs. ontology- or taxonomy-guided feature selection), advantages, and disadvantages has, according to the best of our knowledge, not been done yet.

2.3 Normal behavior modeling algorithms

The next part of the NBM framework is the algorithm that is used for modeling the normal behavior. In general, a considerable amount of attention is paid to this in the literature. This part models the normal (or healthy) behavior of the signal of interest. For this, the current state-of-the-art literature uses techniques from the statistics and machine learning domains. These domains contain a large variety of algorithms that are suitable for the task. More or less three categories can be distinguished: (1) statistical models, (2) shallow (or traditional) machine learning models, and (3) deep learning models. Although this classification gives the impression that the papers can be assigned to a single category, it is quite often the case that research uses or combines models from multiple categories.

Even though there are examples of recent papers in which statistical techniques are used for the modeling of the normal behavior, they are a minority. Models that are used are for example OLS and autoregressive integrated moving average (ARIMA) (see Chesterman et al., 2022, 2021), where they are used to remove autocorrelation and the correlation with other signals from the target signal. A different kind of statistical algorithm that is occasionally used is the PCA (see Campoverde et al., 2022) and its non-linear variant (see Kim et al., 2011). These are, contrary to the previous algorithms, unsupervised, but they can be used to learn the normal or healthy relations between the signals. In Garlick and Watson (2009) an OLS and an autoregressive exogenous (ARX) model are combined to model the normal behavior, which makes it possible to take time dependencies into account. The advantage of statistical models is that they are relatively simple, computationally lightweight, and data-efficient. They are well studied, and their behavior is well understood. This makes them often suitable as a first-analysis tool or in domains where there are constraints on the computational burden or the number of data that are available. The downside is however that they are relatively simple, which makes them in general unsuitable to model highly complex non-linear dynamics. Whether this is a problem depends of course on the case that needs to be solved.

Techniques from the traditional (shallow) machine learning domain are used more often. By traditional machine learning is meant models like decision trees, random forests, gradient boosting, and support vector machines. These models are more complex than traditional statistical models and are better able to model non-linear dynamics. However, they require in general more training data and time. Examples of algorithms that are used in the current state of the art are tree-based models like random forest (see Chesterman et al., 2022; Turnbull et al., 2021; and Kusiak and Li, 2011) and gradient boosting (see Chesterman et al., 2022; Maron et al., 2022; Beretta et al., 2021, 2020; Udo and Yar, 2021; and Kusiak and Li, 2011) and models like support vector machine and regression (see Chesterman et al., 2022; Castellani et al., 2021; McKinnon et al., 2020; and Kusiak and Li, 2011). Another type of model that is occasionally used is derived from the linear model (OLS) but includes some form of regularization to be better able to cope with high dimensional data and highly correlated features, i.e., least absolute shrinkage and selection operator (LASSO) (see Dienst and Beseler, 2016). The latter model can be situated somewhere between the statistical and traditional machine learning categories.

In recent years deep learning models, e.g., neural networks, have become popular. Deep learning models are more complex than traditional machine learning models. Their advantage is that they are even better at modeling non-linear dynamics. Their disadvantages are that they require even more data, they are computer-intensive to train, and the results are more difficult to interpret. This however has not diminished their popularity, and at the moment they are the most popular model category in the state-of-the-art research. Just like the traditional machine learning domain, the deep learning domain is very diverse. Over the years many different types of models have been developed. These are either completely new models or combinations of already existing deep learning models. Both can be found in the state-of-the-art literature. Deep neural networks are used in Black et al. (2022), Jamil et al. (2022) (transfer learning), Mazidi et al. (2017), Verma et al. (2022), Meyer (2021) (multi-target neural network), Turnbull et al. (2021), Cui et al. (2018), Sun et al. (2016), Bangalore and Tjernberg (2015) (nonlinear autoregressive exogenous model, NARX), Bangalore and Tjernberg (2014), Li et al. (2014), Kusiak and Verma (2012), Kusiak and Li (2011), Zaher et al. (2009). Another popular type of model is the autoencoder (AE). Just like a PCA, this model learns normal or healthy behavior through dimension reduction which makes it ignore noise and anomalies. However, compared to the PCA it is better at learning non-linearities. This model type is used for example in Miele et al. (2022), Chen et al. (2021), Beretta et al. (2020), Renström et al. (2020), and Zhao et al. (2018). Convolutional neural networks (CNNs), originally designed and used for the analysis of images, can also be used for the detection of anomalies and failures. Examples can be found in Bermúdez et al. (2022) (combination of CNN and LSTM), Xiang et al. (2022), Zgraggen et al. (2021), and Liu et al. (2020). Another model that is used is the LSTM. This model is particularly suitable for time series, since it is able to model the time dependencies. This model is used in Bermúdez et al. (2022) and Udo and Yar (2021). Two other models that have also been used but to a much lesser extent are the extreme learning machines (ELMs) (see Marti-Puig et al., 2021) and the generative adversarial network (GAN) (see Peng et al., 2021).

Another type of algorithm that is occasionally used in the literature is based on fuzzy logic. An example of such a model is the adaptive neuro-fuzzy inference system (ANFIS). Papers that use this model are Schlechtingen and Santos (2014, 2012) and Schlechtingen et al. (2013). In Tautz-Weinert and Watson (2016), experiments are performed with, among others, ANFIS and Gaussian process regression. And finally, there is also research that uses copula-based modeling. An example is the research presented in Zhongshan et al. (2018).

Overall it can be stated that the NBM ecosystem is diverse. In recent years, deep learning has become the most popular methodology. The merits of these models are clear from the results. Often they outperform the statistical and traditional machine learning models. However, the question is whether they are always the most suitable methodology for implementation in the field. The data requirements mean that deploying the system quickly on a new wind farm is not possible (transfer learning alleviates this issue to a certain extent). Also, the high computational requirements result in more costly retraining and higher maintenance costs. The question is whether these disadvantages are outweighed by the improved performance once deployed in the field. Not much attention is paid in the literature to this question.

2.4 Algorithms for the analysis of the NBM prediction error

The last step of the NBM methodology is the analysis of the prediction error made by the NBM model. This model predicts the expected normal behavior of a signal. If the true or observed signal deviates abnormally much from this prediction or the deviation shows certain trends, then this might indicate that something is going wrong with the related component and that a failure is imminent. The main goal of the last step is to search for these patterns in the prediction error. There are many different techniques (and combinations of techniques) that can be used for this. There are different ways to classify them. They can be divided by domain. Firstly there are statistics-based methods that use the distribution of the prediction error under healthy conditions to determine a threshold that can be used to classify the prediction errors as normal or anomalous. Secondly, there are methods that are based on models from the statistical process control (SPC) domain. Thirdly there are methods that are based on models from the machine learning domain. A different classification focuses on the number of signals they analyze in a single pass. There are univariate methods, which only take a single signal at a time into consideration. There are also multivariate methods, which look at multiple signals. In general, machine-learning-based methods are multivariate. The SPC-based method can be both, since the univariate control chart algorithms like Shewhart, cumulative sum (CUSUM), and exponential weighted moving average (EWMA) have their multivariate counterparts. However, in the state-of-the-art literature often only the univariate versions are used. The statistics-based methods that use the distribution of the prediction error are in general univariate. An exception to this is the Mahalanobis distance, which is mostly used in a multivariate setting.

The overview of the state of the art given in this paper will use the first classification as a guideline. The technique that uses the distribution of the prediction error to find a suitable threshold to identify anomalies is for example used in Meyer (2021), Zhao et al. (2018), and Kusiak and Verma (2012). The Mahalanobis distance combined with an anomaly threshold is used in Miele et al. (2022), Renström et al. (2020), Cui et al. (2018), and Bangalore et al. (2017). SPC techniques are used in Udo and Yar (2021) (Shewhart control chart), Chesterman et al. (2022) (CUSUM), Chesterman et al. (2021) (CUSUM), Bermúdez et al. (2022) (EWMA), Campoverde et al. (2022) (EWMA), Xiang et al. (2022) (EWMA), and Renström et al. (2020) (EWMA). The machine-learning-based methods for the analysis of the prediction error are in general modifications of traditional machine learning algorithms. For example, the isolation forest, which is used in Beretta et al. (2021, 2020) and McKinnon et al. (2020), is similar to the random forest algorithm, while the one-class SVM, used in Turnbull et al. (2021), Beretta et al. (2020), and McKinnon et al. (2020), is similar to the SVM.

Overall it can be stated that in the current state of the art, multiple techniques are used for the analysis of the prediction error, without a single category clearly having the upper hand. Furthermore, both univariate and multivariate techniques are still used. The multivariate techniques can analyze the prediction errors in multiple signals, which gives them an advantage compared to the univariate techniques. However, their disadvantage is that when analyzing several signals at the same time, a deviation in a single signal might be masked. This is shown in Renström et al. (2020), where the authors observe that when the Mahalanobis distance is calculated on several prediction errors at the same time (multivariate setting), it does not always clearly increase when only a single prediction error deviates. For this reason, they point out that it would be interesting to combine multivariate and univariate techniques.

In this section, the methodology of the experiments is explained, which will be used to demonstrate certain techniques found in the state-of-the-art literature. For this demonstration, an NBM pipeline is designed, which consists of the following steps: data preprocessing, NBM, anomaly detection, and health score calculation. The pipeline is validated on data from five operational wind farms. The data contain information on three types of failures, i.e., generator bearing replacements, generator fan replacements, and rotor brush high-temperature failures. In each step of the pipeline, multiple techniques and configurations are tested and compared. To this end, six experiments are designed. Care is taken to create a lab environment as much as possible. This means that the parts of the pipelines that are not relevant to the experiment are kept constant.

3.1 The input data

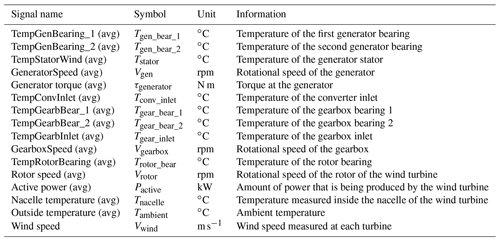

The experiments are based on two data sources (for confidentiality reasons not all the details of the input data can be shared). The first one is 10 min SCADA data originating from five different onshore wind farms (wind farms 1–5). The geographic location of each wind farm is different. The wind turbines in these wind farms are all of the same type with a rated power of 2 MW. The wind farms are relatively small, containing only four to six wind turbines. Some datasets contain a substantial number of missing values. The number of (obvious) measurement errors is low. The SCADA data contain over 100 signals. Only the 10 min averages of the signals related to the drive train or the operational condition of the wind turbine are used in this research. The signals that are selected from the SCADA data are based on the state-of-the-art literature. It is a relatively large subset, larger than what would be used if the selection were based on only expert knowledge. Table 1 gives an overview.

The second source of information comprises the maintenance logs, which contain the replacements and failures. Information is available on three types of events: generator bearing replacements, generator fan replacements, and rotor brush high-temperature failures. For each replacement or failure event, information is available on the turbine ID, the date, and the event type. This is valuable information for validating the methodologies. However using these logs also involves a couple of challenges, e.g., imprecise event dates or missing events. The logs give only an approximate indication of when something went wrong. Furthermore, it needs to be pointed out that a replacement of a component does not necessarily mean that the component failed. Some components will have failed, whereas others will have been replaced as a preventive measure.

3.2 Preprocessing

The first step of the NBM framework or pipeline is the preprocessing of the data (see Fig. 1). Because the main focus of this paper lies on the NBM and the anomaly detection techniques, no extensive comparative analysis of different preprocessing techniques is done. However, the NBM pipeline makes use of several preprocessing techniques, which makes it necessary to at least discuss or mention them. Some techniques are trivial but necessary. They will just be mentioned without going into more detail. The more interesting ones, like for example the healthy data selection or the fleet median normalization, will be discussed more thoroughly.

The NBM framework makes use of the following preprocessing steps:

-

Data cleaning. This involves selection of relevant variables and turbines, renaming of the variables, matching of the SCADA data with the replacement information, and linear interpolation or carry forward/backward of the missing values (similar to what was done in Bermúdez et al., 2022; Campoverde et al., 2022, Mazidi et al., 2017; and Renström et al., 2020, but with linear interpolation). This step will not be discussed in more detail, since it is a trivial transformation.

-

Selecting healthy training data. This rule-based method will be discussed in depth.

-

Determining the operating condition of the turbines. This is done using the IEC 61400-1-12 standard (Commission, 2022) as a guideline.

-

Signal filtering using the wind farm median. This is an important transformation with a significant impact on the results. For this reason, this step is discussed in depth.

-

Removal of sensor measurement errors. The removal of sensor measurement errors is done in a fully automated way. A short discussion of this step is given.

-

Aggregating to the hour level. The purpose of this step is to reduce the amount of noise and the number of missing values in the data. Similar actions were taken for example in Turnbull et al. (2021) and Verma et al. (2022). This step is not always appropriate. This is for example the case for failures that form very fast over time or for signals that exhibit damage patterns that are very short-lived like in vibration analysis. It is up to the data analyst to determine whether the advantages outweigh the disadvantages. This preprocessing step will not be discussed in more detail, since it is a trivial technique.

3.2.1 Signal filtering using the fleet (wind farm) median

The wind turbine signals in the SCADA data are quite complex, meaning there are a lot of factors that influence them. This complexity makes it more difficult to model the normal behavior. This means that if a part of this complexity could be removed, it would simplify the problem, which normally should result in an improved modeling performance and an overall more data-efficient model. This can be accomplished by calculating the fleet median of a signal (e.g., temperature of generator bearing 1 at time t for turbines 1, 2, 3, 4, 5) and subtracting it from the wind turbine signals (e.g., temperature of generator bearing 1 at time t of turbine 1). This technique is also used in Chesterman et al. (2022, 2021). The fleet median can be seen as an implicit normal behavior model. It is implicit because it does not require the selection of predictors or the training of a model. It models the normal behavior as long as 50 % + 1 turbines are acting normally at any given time. By taking the median over a whole farm, it captures farm-wide effects, which are common to all the turbines, which is why it will be called the “common component”. Elements modeled by this component are for example the wind speed, the wind direction, and the outside temperature. By subtracting the median from the wind turbine signal, these farm-wide effects are removed. What is left are turbine-specific effects, which will be called the “idiosyncratic component”. Turbine-specific anomalies should only be visible in there.

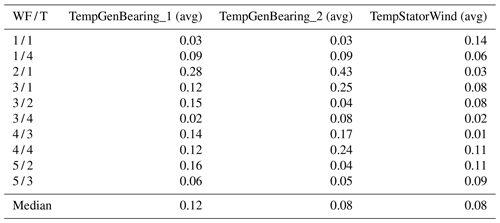

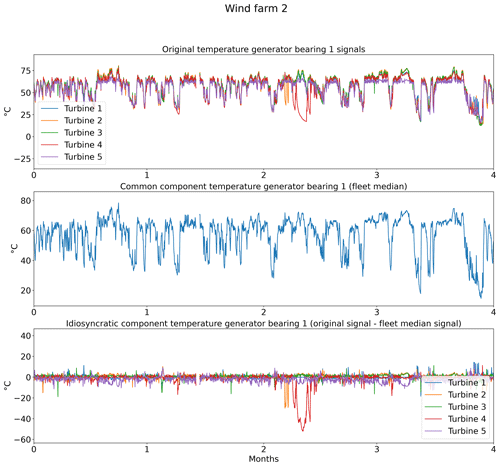

In practice, this preprocessing step means that from each signal in Table 1 the fleet median is subtracted (e.g., Trotor − fleet median Trotor, Trear − fleet median Trear). Figure 2 shows the results of the decomposition for the five turbines of wind farm 2. The top subplot shows the original generator bearing 1 temperature signal. The middle subplot shows the common components. From the plot, it is obvious that the common component captures the general (fleet or farm-wide) trend, while turbine-specific evolutions are ignored. The bottom subplot shows the idiosyncratic components. The power down of turbine 4 is (more) clearly visible in the idiosyncratic component than in the original signal. This indicates that the decomposition is successful. Figure 3 shows that the fleet median is useful for filtering out macro-level or fleet-wide effects like seasonal fluctuations from the data. The common component captures clearly the seasonal fluctuations in the nacelle temperature, which results in an idiosyncratic component that is free of them. This is beneficial because it means that seasonal fluctuations will not influence the false positive rate when less than 1 year of training data is used. Furthermore, the common component also captures the transient behavior like cool-downs that are common to all turbines in the fleet. This means that the idiosyncratic component is free from most transient behavior. What remains of transient behavior are cool-downs that are unique to the turbine. This means that they are caused for example by a turbine that is turned off for maintenance. These events are relatively rare, which means that subtracting the fleet median from the signals has reduced the modeling complexity substantially.

Figure 2Example of the impact of decomposing the generator bearing 1 temperature of the turbines in wind farm 2 in a common and idiosyncratic component.

Figure 3Example of the impact of decomposing the nacelle temperature of the turbines in wind farm 2 in a common and idiosyncratic component.

How well the fleet median removes the macro-level effects depends of course on the quality of the fleet median. An issue that may arise, especially in small wind farms, is that a substantial number of turbines are offline for maintenance. For example, it can be that two turbines are offline for maintenance in a wind farm with four turbines. This will of course have an impact on how representative the median is of the normal behavior. For this reason, a rule-based safeguard is added that under certain conditions will convert the fleet median to NaN. The rules are the following:

-

If fleet size<5, no missing values at time t are allowed.

-

If , at most 20 % missing values at time t are allowed.

-

If fleet size≥10, at most 40 % missing values at time t are allowed.

3.2.2 Selecting healthy training data

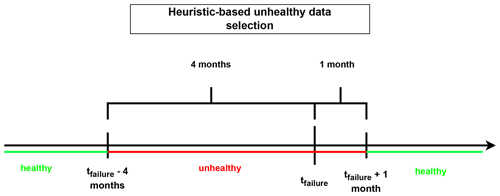

The selection of healthy training data is an important step in the NBM framework. If it is not done properly, it can result in the contamination of the training data with anomalous or “unhealthy” observations, which can disturb the training of the normal behavior. Data are considered healthy if they are not polluted by abnormal behavior caused by a damaged component. Unfortunately, data from real machines do not contain a label that indicates whether they are healthy or not. This means that certain assumptions need to be made about the data, i.e., a “healthy data” rule. The rule used in this paper considers data that precede a failure by less than 4 months (which is the same as what is used in Verma et al., 2022) or follow a failure by less than a month (to avoid test and upstart behavior) unhealthy.

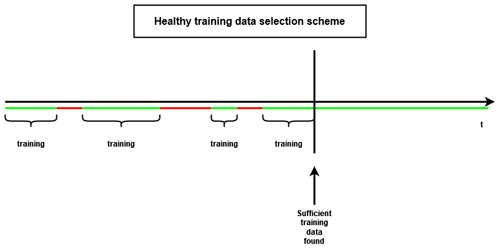

Once the unhealthy data have been identified, healthy data can be selected. The methodology presented here selects the healthy data in a fully automated fashion. The user can determine how many training data (number of observations) per turbine are required for modeling the normal behavior. The healthy data are selected in chronological order from the time series. For most experiments, the first 4380 (which equals roughly 6 months of data) healthy samples of each turbine are selected for training. The selected data are combined into a single training dataset. This implies that only a single model per signal per farm is trained, which results in a large reduction in the training time and more efficient usage of the training data. Figures 4 and 5 give a schematic representation of how the healthy training data are selected.

3.2.3 Handling of measurement or sensor errors

Removing outliers and/or measurement errors is something that is done in most research. The SCADA data used for the demonstration experiments contain a small number of unrealistic values. The technique used here uses thresholds to determine which observations are measurement errors and which are not. The overview of the state of the art shows that this is a technique that is often used. The thresholds used in the demonstration experiments are determined in a fully automated fashion so that they do not need to be manually determined for each signal of each turbine type. This manipulation of the data is not without risk. Only measurement errors should be removed and not the deviations in the signals caused by the failure of the component. The threshold depends on the median value of the original signal (meaning that the fleet median has not been subtracted from the wind turbine signal). This value is multiplied with a scaling factor, which in this paper is set to 1. This threshold is used on the signal from which the fleet median has been subtracted. All absolute values in this signal that are larger than the threshold are considered measurement errors. Concretely this means that values from the wind turbine signal that deviate from the fleet median (on the positive side) by more than its median value are considered measurement errors. Equation (1) shows the procedure using mathematical notation. The measurement errors are replaced with NaN values. In a later step, they are replaced using linear interpolation or if necessary carry forward/backward.

where Errormeasurement is an indicator whether the observation is a measurement error or not, Valuefleet_corrected is the signal value from which the fleet median value has been subtracted, Valueoriginal is the signal value, and outlier_factor is a constant multiplier.

3.3 Normal behavior modeling

The normal behavior model is the core of the NBM framework. It models the normal or healthy relation between one or more predictors and a target signal. Once trained it can be used to predict the expected normal behavior. The differences between the observed and predicted values are then analyzed by anomaly detection algorithms in the final step. In this section, the methodological setup of the experiments will be discussed. To assess the performance of the NBM configurations, two metrics are used. The first metric is the healthy test data RMSE. A proper NBM model should be able to model the healthy data well. However, this metric does not tell us much about how well it can distinguish unhealthy from healthy data. For this reason, a second metric is introduced, which is the difference between the median prediction error in the healthy and unhealthy data (ΔPEunh-h) (see Eq. 2). The idea behind this metric is that good NBM models should have a small prediction error in the healthy data (PEh) (because they are trained on the healthy data) and a large prediction error in the unhealthy data (PEunh) (because something has changed compared to the healthy situation). For the type of failures studied in this paper, ΔPEunh-h should be positive because the damage to the related components should result in higher-than-normal temperatures. The more positive ΔPEunh-h is, the better the NBM because it indicates that the model can better distinguish healthy from unhealthy data.

where ΔPEunh-h is the difference between the median unhealthy and healthy data prediction error, PEunh is the unhealthy data prediction error, and PEh is the healthy data prediction error.

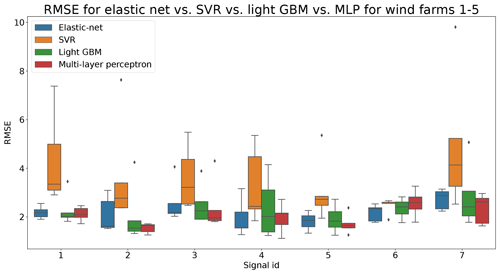

As a baseline the fleet median signal for the healthy training data is used. Since the fleet median also models the normal behavior, although without the requirement of specifying the predictors, it will be called an “implicit NBM” (vs. the “explicit NBM” models that do require a specification of predictors, model, parameters, etc.). The explicit NBM that will mainly be used is the elastic net. It is a simple, transparent, and robust model that can handle large numbers of (correlated) predictors. At the same time, it can work with a limited number of training data. This corresponds to requirements set by industrial partners, e.g., at most only a couple of months of 10 min training data per turbine, low maintenance cost, low training cost, and high transparency. Nevertheless, in experiments 2 and 6 the performance of the elastic net will be compared to that of more complex models from the shallow machine learning domain (i.e., light gradient-boosting machine (light GBM), support vector regression (SVR) in experiment 6) and the deep learning domain (i.e., multi-layer perceptron (MLP) in experiments 2 and 6). This should give an idea of the limits and usability of the elastic net model and whether the trade-off between computational cost and complexity on the one hand and the performance on the other hand is acceptable or not.

3.3.1 Elastic net regression for modeling the normal behavior

It has been shown in the literature that linear models can be good modelers of the normal behavior of wind turbines, and they are also time-efficient (see Dienst and Beseler, 2016). However, by using elastic net (which was developed in Zou and Hastie, 2005), which is basically a linear regressor with L1 and L2 regularizers added to it (see Eq. 3), there are some extra advantages. Firstly, the model is more robust when many (correlated) features are used. Secondly, it also performs an automatic feature selection. This implies that it is possible to model for example the generator bearing 1 temperature by giving the model all the signals that are connected to the whole drive train of the turbine. This reduces the configuration burden for the user. Furthermore, the algorithm works in a transparent way, which avoids the “black box” problem. The number of training data it requires is also favorable compared to more complex machine learning algorithms. A disadvantage of the model is that it is relatively simple, which means that it is not good at modeling highly non-linear dynamics. Whether this is a problem will be tested in experiment 5, where the performance of the elastic net is compared with that of more complex shallow machine learning and deep learning models.

where denotes estimates of the coefficients or weights by the elastic net model, β0 and βj denote coefficients or weights of the model; is the L1 penalty term; is the L2 penalty term, also called Tikhonov regularization; λ1≥0 is the weight of L1 penalty term; and λ2≥0 is the weight of L2 penalty term.

3.3.2 Training of the NBM model

The NBM models are trained on healthy data that are extracted from the SCADA data. Failing to train on more or less healthy data can result in severe degradation of the modeling performance of the NBM (this depends on the relative quantity of anomalies). To reduce the computational and maintenance burden of the pipeline, a single NBM model per signal per wind farm is trained. This means that healthy training data from several wind turbines are combined in a single training dataset. This decision was taken in response to concerns raised by wind turbine operators that if separate models were trained per turbine, this would result in an unacceptable maintenance burden. Combining training data from multiple wind turbines is however not without risks. Structural signal differences (e.g., a turbine with a generator bearing that is always 1 or 2 ∘C warmer than the one of a different turbine under the same conditions) between the different wind turbines are not modeled (unless wind turbine dummies are added to the predictor list). This can result in structural deviations in the prediction errors, e.g., a prediction error that is structurally positive or negative. However, data analysis showed that the temperature or behavior differences between the different bearings are small. There are no indications in the results (see below) that the differences between the different bearings seriously hamper the analysis. Furthermore, experiments in which the model was retrained after each bearing replacement did not show any clear performance improvement.

In general, the training of the NBM models will be done by using the first 4380 healthy observations (or 6 months of data) of each turbine. This number is limited on purpose so that it answers the requirements of the industry. Fewer training data mean that new wind turbines can more easily be added to the anomaly detection system (less startup time). The subtraction of the fleet median from the wind turbine signals neutralizes seasonal fluctuations. The NBM models are trained on the training data using a full grid search or a random grid search when the number of hyperparameter combinations is large, over sensible ranges for the hyperparameters. To avoid overfitting 5-fold cross-validation (CV) is used. The trained model is tested on the test dataset to assess the performance. For each model, the healthy test data RMSE is calculated. This is used to compare the models from the different experiments. The third experiment examines the impact of further reducing the quantity of training data to 2 months per turbine.

3.4 The anomaly detection procedure

The trained NBM model is used to predict the expected normal behavior. The prediction error in the model indicates how anomalous the observed behavior is. As shown in the state-of-the-art overview, there are many different anomaly detection techniques that can be used to analyze it. The techniques used in the sixth experiment are based on univariate statistical techniques that are transparent, robust, and computationally light. More specifically, two different techniques are tested. The first technique is based on the prediction error distribution. The second technique is based on a technique from the SPC domain.

The first technique is most suitable for identifying point anomalies. It is based on the principle of iterative outlier detection (IOD) (also called iterative outlier removal). This means that outliers are removed over several iterations until the outlier thresholds (these are the thresholds that determine which observations are outliers and which are not) have stabilized. To make these thresholds more robust against outliers, the standard deviation is approximated by the median absolute deviation (MAD) (see Eqs. 4 and 5, with k=1.4826). The anomaly scores are calculated using Eq. (6).

where Xi is the signal observation at time t=i, is the signal median, and MAD is the median absolute deviation.

where is a robust estimate of the standard deviation signal, k is a constant multiplier or scaler, and MAD is the median absolute deviation.

where idio_comp is the idiosyncratic component, medianidio_comp is the median idiosyncratic component, and is a robust estimation of the standard deviation.

In the next step, the anomaly scores are transformed into health scores. This is done by calculating the moving average of the anomaly scores for different windows (1, 10, 30, 90, and 180 d). For these moving averages, upper and lower bounds are calculated. This is done by combining the moving averages with the same window length of the same signals from the different wind turbines and calculating Tukey's fences. Three positive thresholds are used to determine the moving-average anomaly score. Next, the sum of the moving-average anomaly scores is taken over the different windows for each time step t. This sum is the health score and determines the health category (Eq. 8).

where MAwinx is the moving average with window length x, is the quantile of the moving-average distribution, and MAanomaly score win x is the moving average of the anomaly score for window length x.

where MAanomaly score win x is the moving average of the anomaly score over a window with length x.

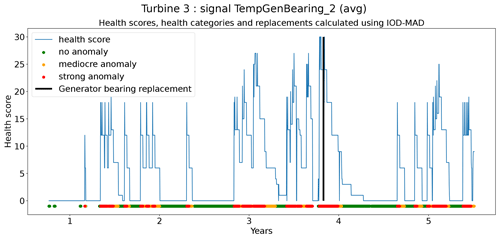

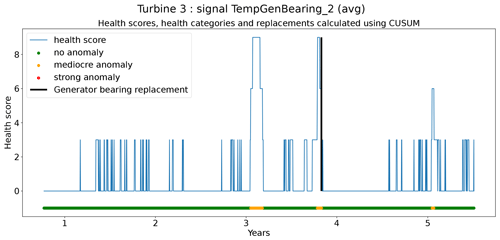

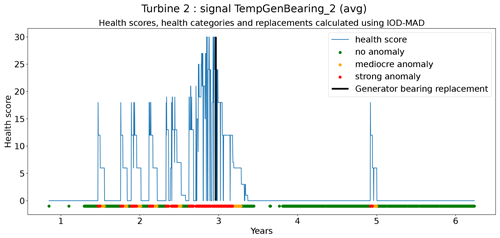

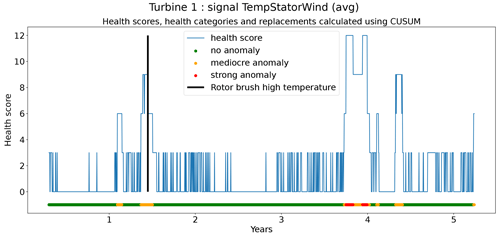

The second technique is based on CUSUM (Page, 1955), which comes from the statistical process control (SPC) domain. CUSUM is designed to be more sensitive to small changes in the mean than for example Shewhart charts (used for example in Udo and Yar, 2021). The algorithm is run with different subgroup sizes, e.g., 10, 30, 90, and 180 d. Instead of using the subgroup mean and the overall mean, the subgroup median and overall median are used. The standard deviation of the subgroups is replaced with the robust standard deviation estimated using the MAD (see Eq. 5). This makes the algorithm more robust against anomalous trends. For each subgroup size, anomaly thresholds are calculated using Eq. (7). For each signal, there are three thresholds. These are common for all turbines in the wind farm. The signal health scores are calculated by summing the anomaly scores for the different subgroup sizes (meaning for each time step t the sum is taken over all the subgroup sizes) (Eq. 8). The health category is calculated using Eq. (9).

Assessing the performance of the anomaly detection algorithms on real data is a non-trivial task due to data imperfections. Imprecisions in the replacement dates, problems that are not resolved after a first attempt, incomplete event lists, preventive maintenance, etc. make it hard to automate the validation process. This means that each detection or non-detection needs to be validated by a human. Also, it introduces a certain inexactness into the validation process. For this reason, a somewhat different validation procedure will be used. The performance of the anomaly detection algorithms is assessed by calculating the percentage of failures that are correctly identified. This is the case when a cluster of bad health is found around the time of the failure. The ratio of false positives is also estimated. This is done using the following methodology. Firstly, turbines are selected that have experienced no known failures. This is the case for 10 turbines in total. For those turbines, it can be assumed that the components were probably relatively healthy during the observation period. This means that the number of bad health observations will be fairly limited. Bad health observations that are found for those turbines are probably false positives. For each signal, the percentage of “bad” health observations is calculated. The median ratio for each signal over all the selected turbines is used as an approximation of the false positive ratio of the anomaly detection model.

3.5 The experiments

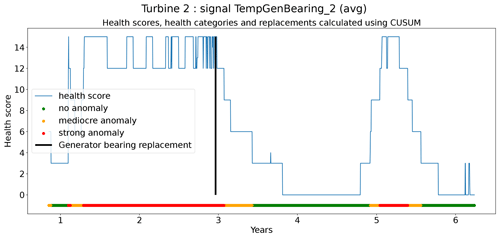

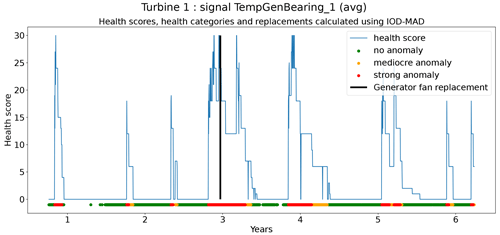

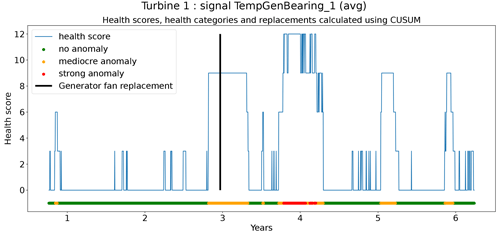

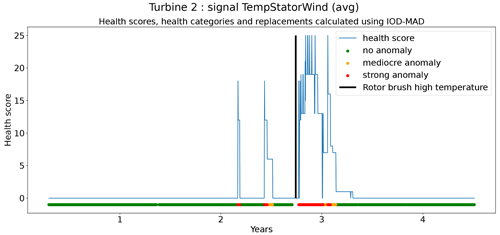

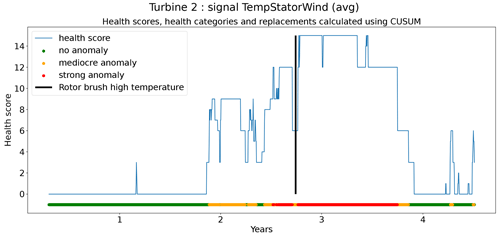

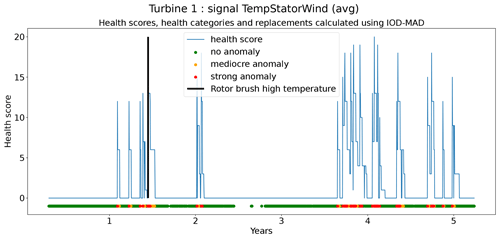

In total six demonstration experiments will be conducted. Five experiments will focus on the NBM model, and one experiment will focus on the anomaly detection algorithms. Experiment 1 compares the performance of the base elastic net regression with that of the implicit NBM. Experiment 2 evaluates the added value of using lagged predictors. Lagged predictors have also been used in Garlick and Watson (2009). Experiment 3 analyzes the impact of reducing the quantity of training data from 6 months per turbine to 2 months. In the state of the art, different numbers of training data are used. This is mainly driven by the number of data available. Experiment 4 discusses the added value of PCA-transformed input for the elastic net. Using PCA for preprocessing of the data is also done in Campoverde et al. (2022). Experiment 5 examines the added value of using more complex machine learning models like SVR (with a radial kernel) and light GBM. The performance of these models compared to that of the elastic net will say something about the importance of non-linearities. Experiment 6 compares the performance of the IOD–MAD and the CUSUM anomaly detection algorithms. For the analysis, the prediction error in the base elastic net model is used.

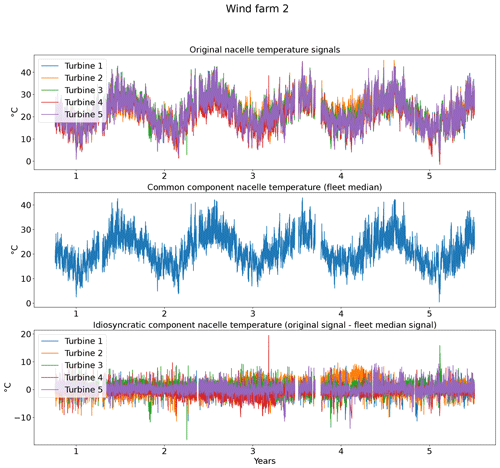

4.1 Experiment 1: the added value of using the elastic net regression model on top of the results of the implicit NBM

The pipeline configuration is as follows: implicit NBM based on fleet median, explicit NBM based on elastic net regression, heuristic-based healthy data selection, full-grid-search hyperparameter tuning (5-fold CV), and 6 months of training data per turbine.

The first experiment investigates the usefulness of adding an explicit NBM (elastic net regression) model to the pipeline. One of the downsides of the implicit NBM (fleet median) is that it is unable to model turbine-specific transient behavior. Whether this is a serious problem depends on the case. However, if it is a problem, it can be solved by adding an explicit NBM to the pipeline. If the above reasoning is correct, it can be expected that the healthy test data RMSE will decrease considerably if the elastic net regression is added to the pipeline.

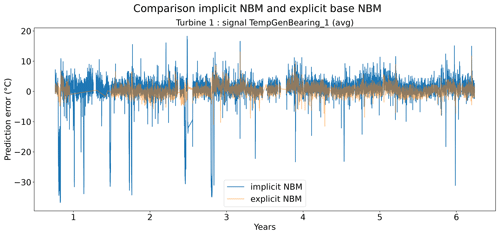

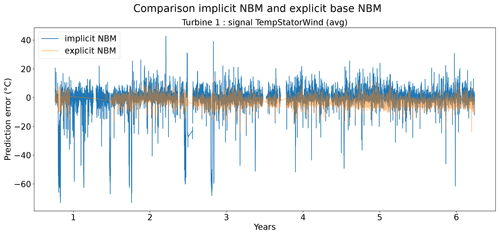

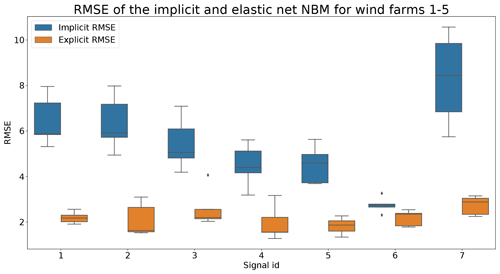

Figures 6 and 7 show that the prediction error when using the elastic net is indeed smaller than when only the implicit NBM is used. The most obvious improvement is that the large negative spikes in the prediction error in the implicit NBM, which correspond to cool-downs caused by power downs of the turbine, are much smaller in the prediction error made by the elastic net. This indicates that the elastic net is modeling the transient behavior to a certain extent. The error is however still larger during transient phases than during steady-state phases. The healthy test data RMSEs in Fig. 8 further support the findings that the elastic net is a useful addition to the pipeline. The RMSEs of the prediction errors are substantially smaller when the elastic net is used.

Figure 6Prediction error in explicit and implicit NBM model for the TempGenBearing_1 (avg) signal of turbine 1.

Figure 7Prediction error in explicit and implicit NBM model for the TempStatorWind (avg) signal of turbine 1.

Figure 8Comparison of RMSE of implicit and explicit NBM for wind farms 1–5. Signal ID 1: TempGearbBear_1 (avg); signal ID 2: TempGearbBear_2 (avg); signal ID 3: TempGearbInlet (avg); signal ID 4: TempGenBearing_1 (avg); signal ID 5: TempGenBearing_2 (avg); signal ID 6: TempRotorBearing (avg); signal ID 7: TempStatorWind (avg).

Based on the results of the first experiment, it can be concluded that using the elastic net has a clear added value. The healthy test data RMSE is always smaller for the pipeline with the explicit NBM. The fact that the RMSE of the elastic net model is quite small shows that relatively simple and lightweight models can be useful for the modeling of normal behavior.

4.2 Experiment 2: the added value of using lagged predictors

The pipeline configuration is as follows: implicit NBM based on fleet median; explicit NBM based on elastic net regression; heuristic-based healthy data selection; full-grid-search hyperparameter tuning (5-fold CV); 6 months training data per turbine; and lags 1, 2, and 3 of each predictor are added.

In the second experiment, the input data are augmented by adding the lagged values of the input signals (excluding the target signal that is being modeled). The idea behind using the lagged terms is that it makes it possible to model the time dependencies. This can be useful when modeling the transient behavior of the turbine. In steady-state situations where factors like active power change little, the positive impact will most likely be less clear. Three lags () for each input signal are added. This means that the model can look up to 3 h in the past.

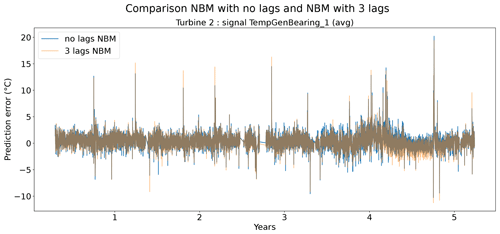

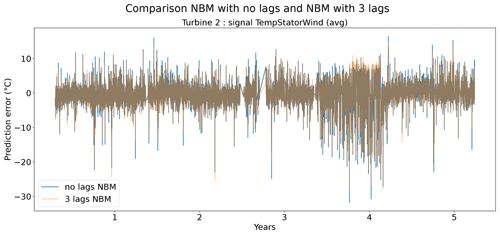

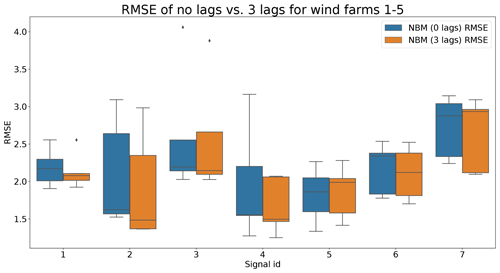

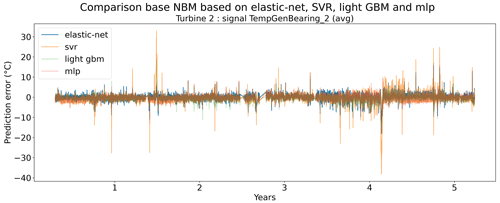

Figures 9 and 10 show that the difference between the prediction errors for the NBM with no lags and the NBM with three lags is marginal. In general, there is no clear difference visible between the two. Surprisingly, there is also no clear improvement to be found in the modeling of the transient behavior. Figure 11 gives an overview of the RMSE in the healthy data for all the target signals for the turbines in wind farms 1–5. The results show that adding the three lags to the model results for a majority of the signals in a marginal reduction in the median healthy test data RMSE.

Figure 9Prediction error in explicit and implicit NBM model for the TempGenBearing_1 (avg) signal of turbine 2.

Figure 10Prediction error explicit and implicit NBM model for the TempStatorWind (avg) signal of turbine 2.

Figure 11Comparison of RMSE of the no lags and the three lags NBM for wind farms 1–5. Signal ID 1: TempGearbBear_1 (avg); signal ID 2: TempGearbBear_2 (avg); signal ID 3: TempGearbInlet (avg); signal ID 4: TempGenBearing_1 (avg); signal ID 5: TempGenBearing_2 (avg); signal ID 6: TempRotorBearing (avg); signal ID 7: TempStatorWind (avg).

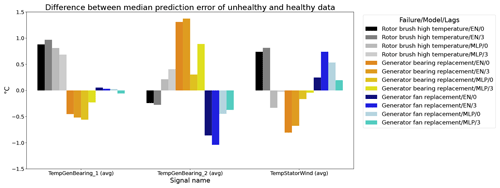

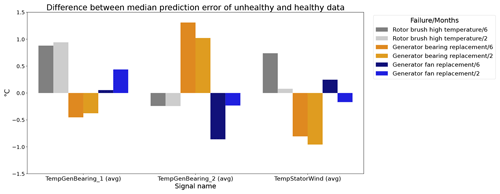

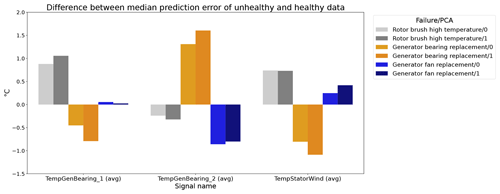

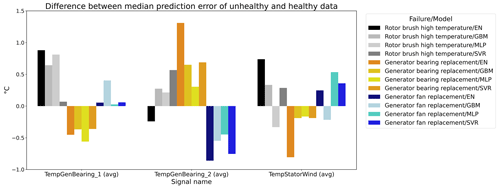

Figure 12 shows the difference between the unhealthy and healthy median prediction errors (ΔPEunh-h calculated using Eq. 2) for the elastic net and multi-layer perceptron (MLP). The figure focuses on the results for three target signals, i.e., TempGenBearing_1 (avg) (Tgen_bear_1), TempGenBearing_2 (avg) (Tgen_bear_2), and TempStatorWind (avg) (Tstator). For the three failures that are being examined, i.e., the rotor brush high-temperature failure, the generator bearing failure and the generator fan failure, it is assumed that the degradation of the component can be observed directly or indirectly in the Tstator, Tgen_bear_1, and Tgen_bear_2 and the Tstator, Tgen_bear_1, and Tgen_bear_2 respectively. More specifically, in all three cases, an increase in the temperatures is expected when the component is damaged. This means that ΔPEunh-h should be positive. The more positive it is, the more useful the NBM is for anomaly detection.

Figure 12Difference between the median prediction error in the unhealthy and healthy data for elastic net and MLP models with no or three lags of the predictor variables. EN: elastic net; MLP: multi-layer perceptron; 0: no predictor lags; 3: three predictor lags (xt−1, xt−2, xt−3).

The results in Fig. 12 show that for the elastic net the ΔPEunh-h only marginally increases when three predictor lags are used. For rotor brush high-temperature failures, ΔPEunh-h is clearly positive for the elastic net model with zero or three lagged predictors. This corresponds with the expectations. However, ΔPEunh-h is also clearly positive for Tgen_bear_1. This is more difficult to explain, since it is unlikely that the rotor brush high-temperature failure can be linked to abnormally high temperatures at the first generator bearing. For the generator bearing failure, the ΔPEunh-h is clearly positive for Tgen_bear_2. This is also in line with expectations. Since ΔPEunh-h is only positive for the second generator bearing and not for the first generator bearing, it is likely that most of the bearing failures happened at the second generator bearing. Unfortunately, the replacement information received from the wind turbine operator does not indicate which bearing has failed, so this statement can not be verified. For the generator fan failures, the ΔPEunh-h is clearly positive for the elastic net with three lags. This is not the case for the other signals, which is quite surprising, since the hypothesis is that a generator fan failure should be indirectly visible in all three generator signals. Furthermore, there is no evidence that the MLP (with zero or three lags) improves upon the elastic net model. The meaning of this will be discussed below. The results in Fig. 12 give only a first indication of whether the NBM is useful for anomaly detection or not. The lack of a clear positive ΔPEunh-h for signals where it is expected to be positive does not mean that all is lost. The anomaly detection techniques discussed in Experiment 6 are more sensitive for small deviations. This means that they can in some cases still detect anomalies even though the ΔPEunh-h is not clearly positive.

From the results of the second experiment, it can be concluded that the addition of three lags only marginally improves the model accuracy using healthy data. There is some evidence that the addition of the predictor lags results in NBM models with more anomaly detection potential. However, the improvement is in general small. Taking into account that adding the lags of all the predictors results in a strong increase in the dimensionality of the problem and the computational time, it is debatable whether the (limited) performance gains outweigh the extra cost. Reasons for the low added value of the lags can perhaps be an insufficient number of lags, a lack of information on the dynamics in the aggregated SCADA data, or the combination of transient and non-transient behavior. The first hypothesis seems to be unlikely, since limited experimentation using more lags showed no clear improvement in performance. The second hypothesis is possible due to the fact that subtracting the fleet median from the SCADA data signals (see Preprocessing section) results in less autocorrelation in the data. Also, the aggregation of the data to the hour level will have an impact. This results in lagged predictor values that are less informative. The third hypothesis would imply that the dynamics of the steady state and the transient behavior of the turbine are so different they can not be learned by one elastic net model. This is a possible explanation. A solution to this problem would be to train a separate model for the transient and steady-state behavior or use a more complex model that is better able to learn the differences between the two states. The fact that no performance gains are achieved when using the MLP makes this hypothesis however somewhat less convincing. Nevertheless, to give a conclusive answer to which hypothesis is the correct one, further research is required.

4.3 Experiment 3: impact of reducing the training data to 2 months per turbine instead of 6

The pipeline configuration is as follows: implicit NBM based on fleet median, explicit NBM based on elastic net regression, heuristic-based healthy data selection, full-grid-search hyperparameter tuning (5-fold CV), and 2 months of training data per turbine.

In this experiment, the impact is analyzed of reducing the quantity of training data from 6 months per turbine to 2 months. This is relevant since fewer training data mean less computational and startup time when using the pipeline on a new wind farm. However, reducing the number of training data in general also comes at a cost. The model accuracy tends to decrease. The question is how much and whether it is outweighed by the advantages. Furthermore, since the training data are selected in chronological order (meaning the first X healthy observations), fewer training data mean that it becomes more likely that certain turbine conditions are missed (or are underrepresented in the data). This can for example be the case with long-duration power downs which cause exceptionally low temperatures for certain components. The most likely result will be that those conditions will be less well modeled, resulting in a larger prediction error. Depending on the use case of the pipeline, this might be a problem.

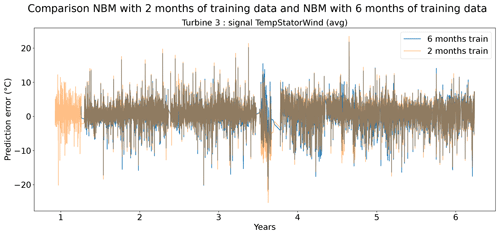

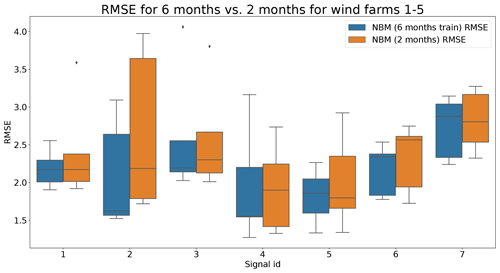

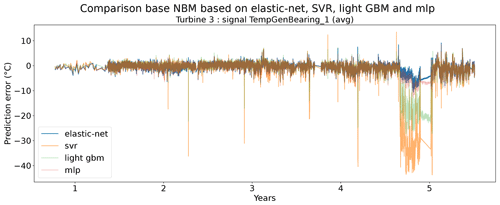

Figures 13 and 14 show indeed that there is some loss of prediction accuracy when the number of training data are reduced from 6 to 2 months. This shows itself as an increase in the prediction error. During steady-state behavior, this loss is not really visible, but during transient behavior, the loss of fit can be substantial (see for example Fig. 13). This is most likely caused by the fact that the training data do not (sufficiently) contain similar transient behavior examples. Figure 15 gives a more general overview of the RMSE results. It shows that the reduction in training data in general leads to an increase in the healthy test data RMSE. This increase is not massive but often also not negligible. For some signals, the median RMSE is slightly smaller. This reduction should not be considered evidence for a superior model but more an indication that the influence of the sample on the results is considerable. This is something that should be taken into account when analyzing the results.

Figure 13Prediction error in NBM models with 6 months and 2 months of training data for the TempGenBearing_2 (avg) signal of turbine 3 of wind farm 5.

Figure 14Prediction error in NBM models with 6 months and 2 months of training data for the TempStatorWind (avg) signal of turbine 3 of wind farm 5.

Figure 15Comparison of RMSE when using 6 months or 2 months of training data for the NBM model for wind farms 1–5. Signal ID 1: TempGearbBear_1 (avg); signal ID 2: TempGearbBear_2 (avg); signal ID 3: TempGearbInlet (avg); signal ID 4: TempGenBearing_1 (avg); signal ID 5: TempGenBearing_2 (avg); signal ID 6: TempRotorBearing (avg); signal ID 7: TempStatorWind (avg).

Figure 16 shows the differences between the median prediction errors in the unhealthy and healthy data (ΔPEunh-h). The results indicate that for detecting rotor brush high-temperature failures, 6 months of training data is better than 2. This is clear from the fact that ΔPEunh-h for Tstator is much larger when using 6 months of training data. The generator bearing failures are detected clearly in Tgen_bear_2. Again it can be observed that ΔPEunh-h is somewhat smaller when using only 2 months of training data. The analysis for the generator fan failures is somewhat less clear. On the one hand, there is, surprisingly, a larger ΔPEunh-h for the model trained on only 2 months of data when using Tgen_bear_2 as the target signal. On the other hand, the model trained on 2 months of training data does not result in a positive ΔPEunh-h for the Tstator signal, contrary to the model with 6 months of training data. Overall it can be stated that fewer training data result in general in NBM models with less potential to be useful for anomaly detection.

Figure 16Difference between the median prediction error in the unhealthy and healthy data (ΔPEunh-h) for elastic net models with 6 months and 2 months of training data; 6: 6 months of training data; 2: 2 months of training data. The results are shown for TempGenBearing_1 (avg) (Tgen_bear_1), TempGenBearing_2 (avg) (Tgen_bear_2), and TempStatorWind (avg) (Tstator).

Experiment 3 has shown that reducing the training data to 2 months results in reduced performance of the model. This is not so much a problem for the behavior states that are frequently shown by the turbine (e.g., steady-state behavior). It does have however a large influence on the states that are rare (e.g., long-term cool-downs). Furthermore, the results also show that the anomaly detection potential of the NBM decreases if the number of training data is reduced to 2 months. Whether the reduced performance of the NBM is a problem really depends on the use case. For some use cases, the larger prediction error in certain rare states is not an issue. For those cases, it might be useful to reduce the number of training data because this will reduce the computational burden of the pipeline. However, if rare behavior is important, the advantages may not outweigh the disadvantages.

4.4 Experiment 4: added value of a PCA transformation step before the explicit NBM

The pipeline configuration is as follows: implicit NBM based on the fleet median, PCA transformation, explicit NBM based on elastic net regression, heuristic-based healthy data selection, full-grid-search hyperparameter tuning (5-fold CV), and 6 months of training data per turbine.

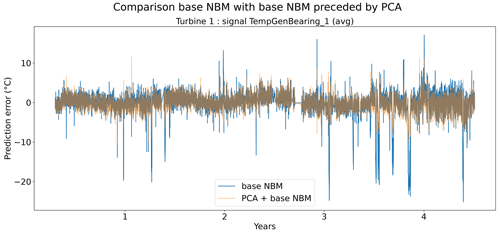

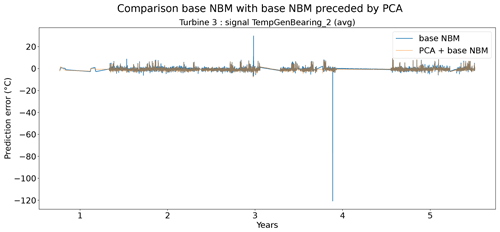

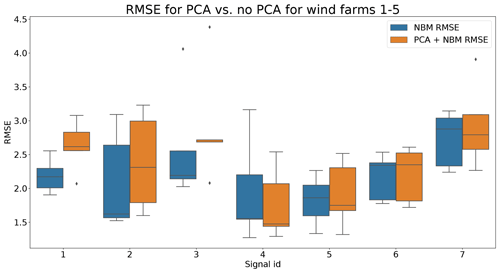

In the fourth experiment, the impact of PCA-transforming (only a transformation, no dimensionality reduction) the data prior to the elastic net modeling is analyzed. Normally the elastic net algorithm should be able to handle high dimensional data with strong correlations between some of the predictors. However, in practice, there might still be some benefit of first PCA-transforming the data. Figure 19 shows that the prediction accuracy in general does not (or only marginally) improve when a PCA transformation step is added to the pipeline. For several signals, the opposite happens. However, these results hide certain interesting side effects. Figure 17 shows that the pipeline with the PCA tends to be a better modeler of the cool-downs than the model without the PCA. Figure 18 also shows that the pipeline without the PCA in some rare cases generates (unrealistically) large prediction errors, while this is not the case when the PCA is used. Furthermore, the training time (and hyperparameter tuning) is considerably shorter for the pipeline with the PCA, even though the number of combinations being tested during tuning is much larger (2728 for the pipeline with PCA, 341 for the pipeline without PCA). This might have something to do with the fact that the new features generated by the PCA transformation are uncorrelated. This can improve the training of the elastic net. For example, for wind farm 5 the hyperparameter tuning without PCA took 4329 s, while with the PCA it took only 3859 s.

Figure 17Prediction error in base NBM and PCA + NBM for the TempGenBearing_1 (avg) signal of turbine 1 of wind farm 1.

Figure 18Prediction error in base NBM and PCA + NBM for the TempGenBearing_2 (avg) signal of turbine 3 of wind farm 3.

Figure 19Comparison of the RMSE when the data are PCA-transformed to when the data are not PCA-transformed for wind farms 1–5. Signal ID 1: TempGearbBear_1 (avg); signal ID 2: TempGearbBear_2 (avg); signal ID 3: TempGearbInlet (avg); signal ID 4: TempGenBearing_1 (avg); signal ID 5: TempGenBearing_2 (avg); signal ID 6: TempRotorBearing (avg); signal ID 7: TempStatorWind (avg).

Figure 20 shows the differences between the unhealthy and the healthy prediction error (ΔPEunh-h). The results show that using the PCA as a preprocessing step has in general a small positive impact on ΔPEunh-h. For the rotor brush high-temperature failures, there is no clear change in the positive difference for the Tstator signal for the model with and without PCA preprocessing. For the generator bearing failures, the positive difference for the Tgen_bear_2 signal is slightly larger when using the model with PCA preprocessing. The same is true for the Tstator signal for the generator fan failures.